Google Leak Reveals

How Google REALLY Works

Author Eric Lancheres

SEO Researcher and Founder of On-Page.ai

Last updated July 14th 2025

(New AI component added)

1. The Massive Google Leak

Exposes Ranking Secrets

The biggest leak in Google's history just occurred, exposing 14,014 secret internal API references used within its search engine.

While many people underestimate the impact of this latest Google leak, I believe it allows us to confirm or debunk theories about Google's inner workings, revealing new insights that can revolutionize how we approach SEO.

It allows us to dodge many of the spam traps that Google has set in place and shows us the exact path forward to rank on Google. I've spent hundreds of hours analyzing the leak and cross-referencing it with my 12 years of SEO notes, including insights from ranking, examining search patents, and conducting SEO tests.

I'm excited to share all my discoveries with you.

And I won't stop at just sharing discoveries—I'll also explain how you can implement everything to achieve better SEO results that can significantly impact your bottom line.

1. If you've been affected by a recent Google update or are looking to rank your site in record time, this is for you.

2. To all search engine professionals, SEO agencies and sole-entrepreneurs owners looking to rank your clients' website rankings, this is for you.

In March 2024, both Erfan Azimi & Dan Petrovic discovered an exposed Google repository containing many of the API references used internally by Google.

Erfan shared the leak with Rand Fishkin which then relayed the information with Mike King while Dan (who had already discovered the leak independently) was in the process of disclosing the leak to Google. After a bit of persisting, Google finally acknowledge there was a leak and resolved while still leaving the data indexed. To Google's credit, they have left it indexed as they are holding themselves to the same standard as everyone else.

https://hexdocs.pm/google_api_content_warehouse/0.4.0/api-reference.html

Example of an API reference

2. The Building Blocks Of Search

(Google's Internal API Documentation)

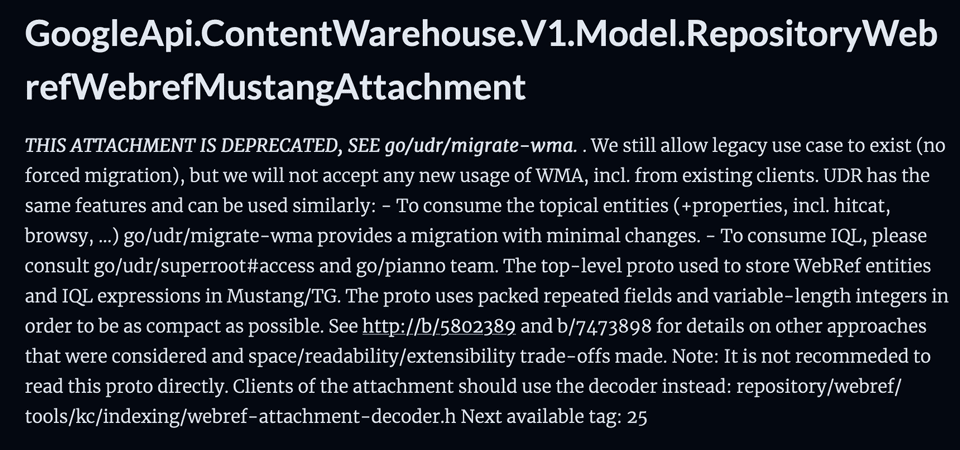

The data warehouse leak contains documentation on the APIs that Google uses to build it's algorithms.

These are the building blocks of the search engine.

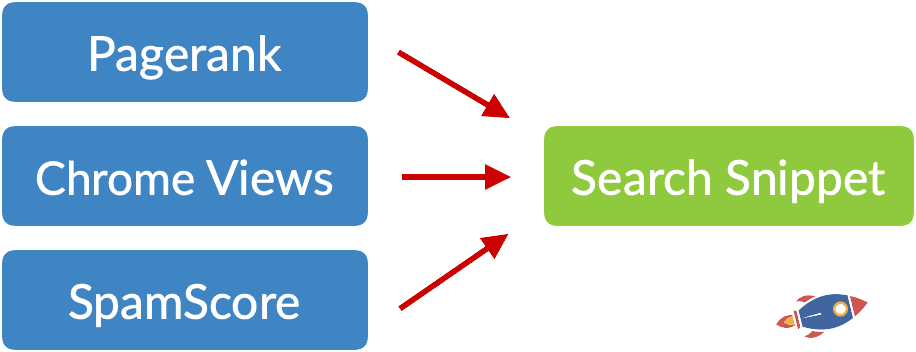

For example, if one morning a search engineer wakes up and decides he wants to create a new algorithm that only show search snippets from websites with a PR5+ that have more than 20,000 Chrome views and have a Spamscore of 10 or lower. Then he can retrieve the data using these APIs.

Hypothetical example of how an engineer can use API information create new algorithms. Please note that this is NOT how the search snippet actually works. (I'll explain that later)

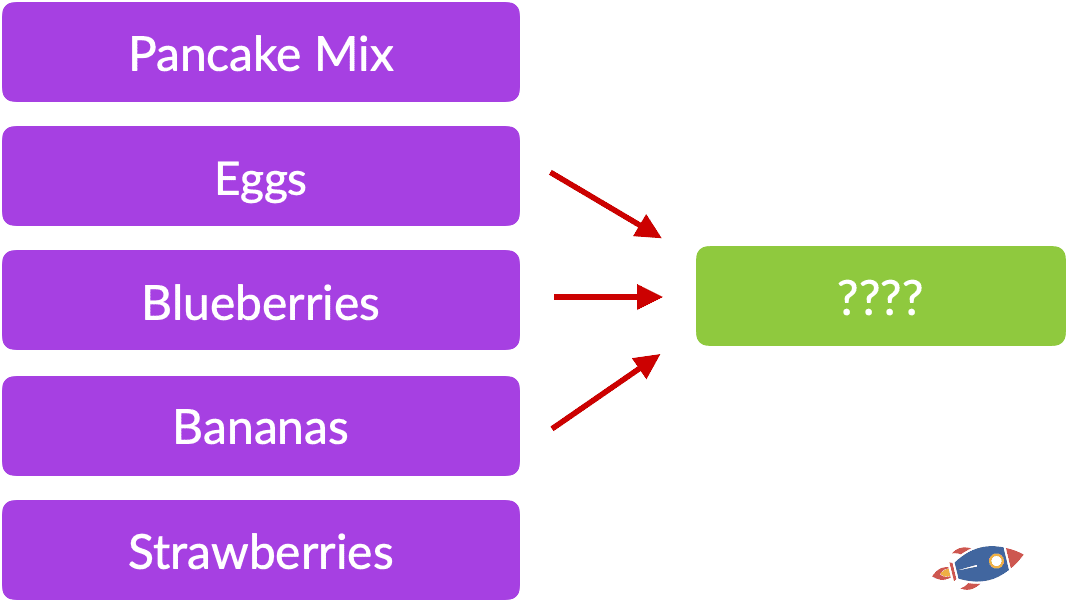

This is similar to walking into your favorite restaurant's kitchen while the chef is away. Imagine discovering all his fresh ingredients laying on the counter...

After a few minutes in the kitchen... you can ask:

1. "What is he making here?"

2. "What kind of toppings is he using when they make pancakes?"

If we know the ingredients, and we know that he's making pancakes... we can easily deduce that the preferred toppings are going to be: blueberries, bananas and strawberries.

This is very similar to ranking factors...

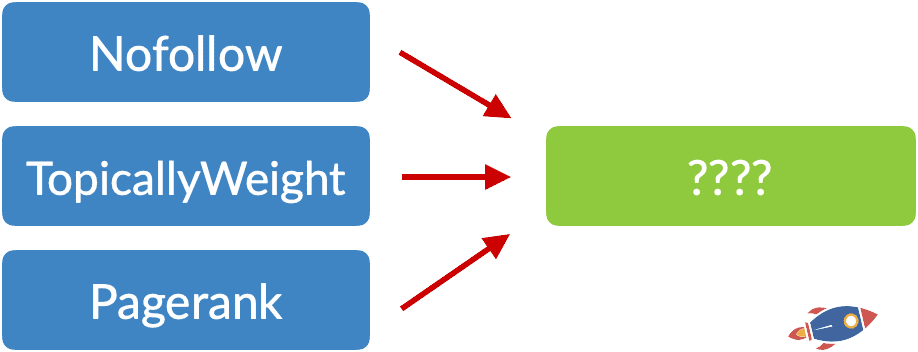

So if we see something like this:

Even though we don't know what the final algorithm is (represented in green), we know that:

1. NoFollow is exclusively related to links

2. We know that Pagerank is ALSO related to links

3. Therefore, the green block must be covering a link related algorithm.

4. Thus, we can reasonably assume that topicality is also ranking factor when it comes to links. (A measure of how related to the topic the link is.)

Plus, we have the added benefit that Google employees have often added elaborate descriptions explaining how these APIs fit into the algorithm so sometimes we don't even have to make assumptions, we can just read it!

Freshness & Accuracy Of The Leak

If you choose to take action on any of the data presented here, do so at your own risk. You are responsible for any changes you make to your website.

When it comes to the freshness and accuracy of these leaks, even though I was unable to find exact dates, they seem to have been updated in 2024, giving us a very recent snapshot of Google's inner workings.

Furthermore, it's apparent that many of the APIs documented are still actively in use, backed by carefully maintained reference documentation. This careful upkeep highlights their ongoing relevance.

APIs no longer in use are clearly marked for deprecation. This is evident from numerous comments that detail items currently being phased out. Finally, Google has been building it's collection of APIs for over a decade so even though there are always updates, the core algorithm remains stable, ensuring that much of the foundational knowledge and techniques remain applicable over time.

I will NOT be reproducing the leaks here; however, I will include partial mentions below for context and educational purposes.

3. Building Better Links

Insights From Leak

It should come as no surprise that links still play a major role in rankings, contrary to what Google or some SEOs might claim. They are one of the major ranking factors that help Google understand context, authority and importance.

However...

With regards to links, I was intrigued to see such a large emphasis on link anchor text.

Here's what I discovered:

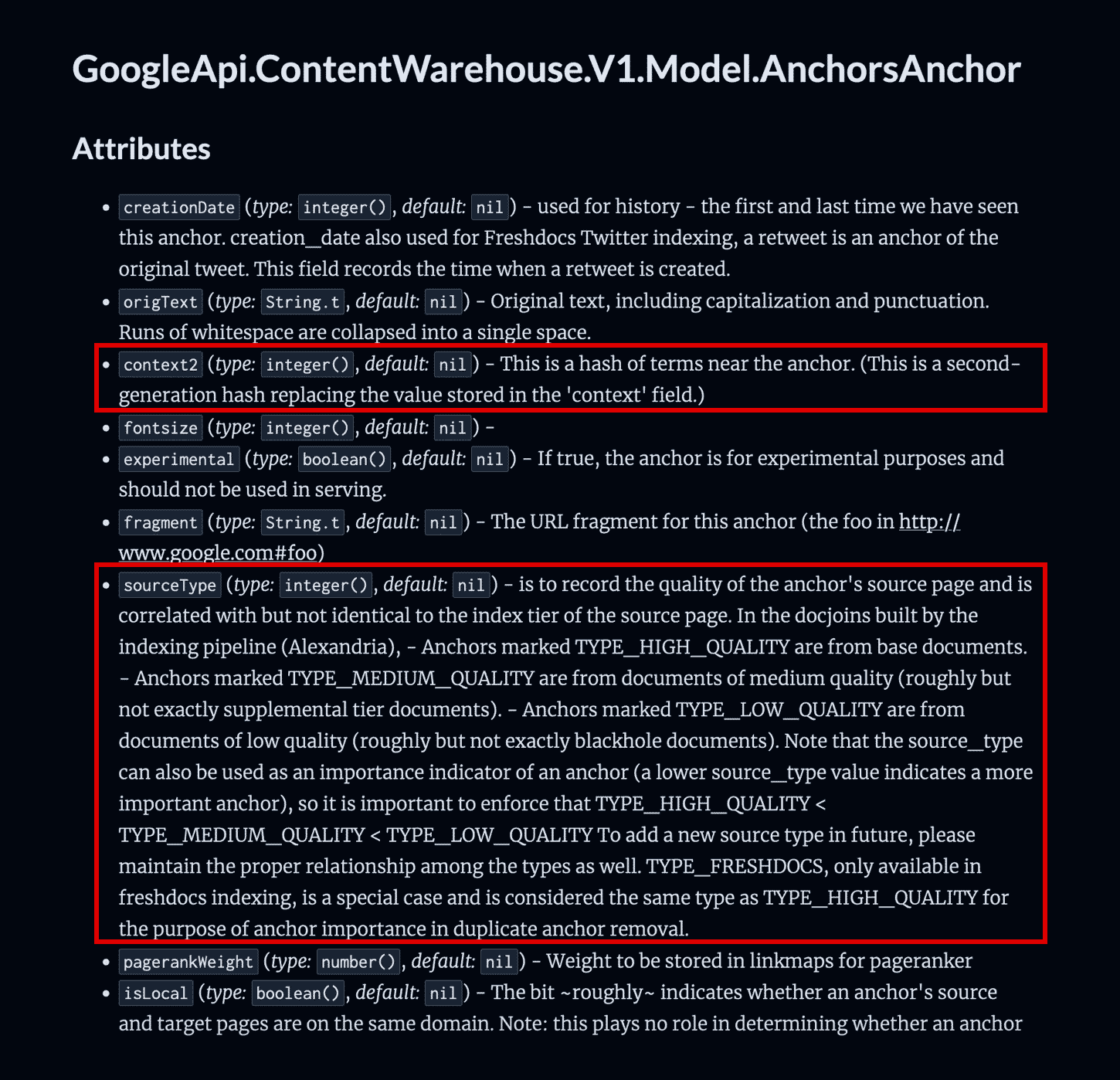

Anchors

context2 - This is a hash of terms near the anchor

Please note that these are partial references to the leak as I do not want to republish the entire document.

According to the documentation, the full description of "context2" confirms that the words before and after your anchor text affect the anchor text.

While this has long been suspected within the SEO community, it's nice to finally see it in the flesh.

How You Can Use This To Build Better Links

For example, if we assumed that the 5 words before and after your anchor text can influence the anchor text

For the best fishing poles click here

Google knows that "click here" is related to fishing poles because of the context. So if you're building links and have little control over the anchor text itself (due to site moderation), then at least try to include related entities NEAR the anchor text.

However, if you do have complete control over the anchor text, then an ideal link would include both a relevant anchor text AND relevant surrounding content.

For example:

catch fish with these fishing poles

In this example, both the anchor text is relevant AND the surrounding words are relevant.

sourceType - is to record the quality of the anchor's source page

The next element, sourceType, explains how high quality anchor texts come from "Base documents". What this likely means is that links that come from content ranking in the same keyword bucket, aka highly related content), will carry a heavier weight in terms of anchor text.

Medium quality anchors, according to Google, comes from not very related content.

Finally, low quality anchors come from, *drum roll*, low quality content. While Google doesn't tell us what they consider a low quality document, we can assume that it is content that isn't related to the topic and likely has a host of other metrics that classify it as low quality. (More on this later)

However, the big takeaway is that not all anchors are worth the same!

Ultimately, The BEST links you can get are going to be from OTHER pages currently ranking for the same term.

(Because pages that rank for the same term will be classified in the same bucket)

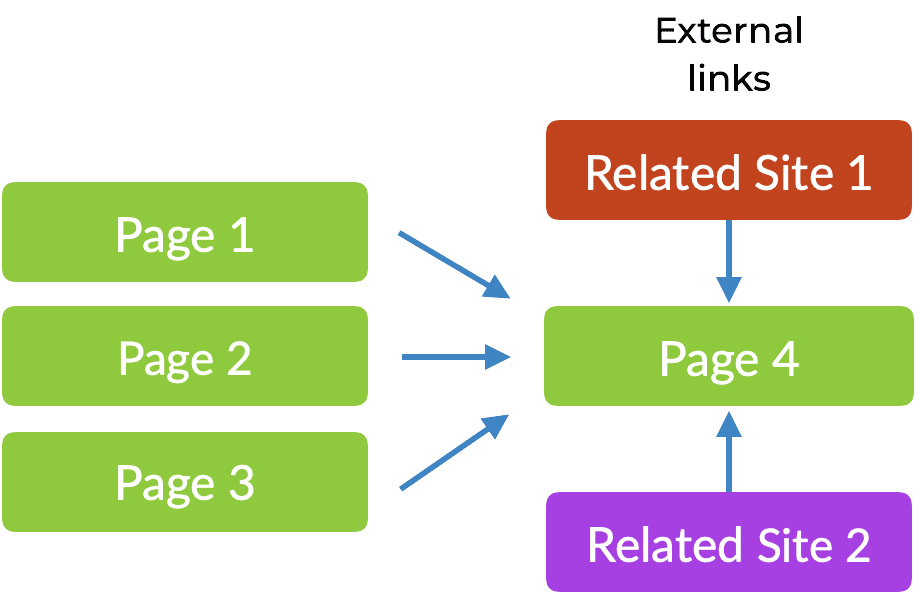

Links

isLocal - indicates whether an anchor's source and target pages are on the same domain

expired - true iff exp domain

deletionDate

locality - For ranking purposes, the quality of an anchor is measured by its "locality" and "bucket"

parallelLinks - The number of additional links from the same source page to the same target domain

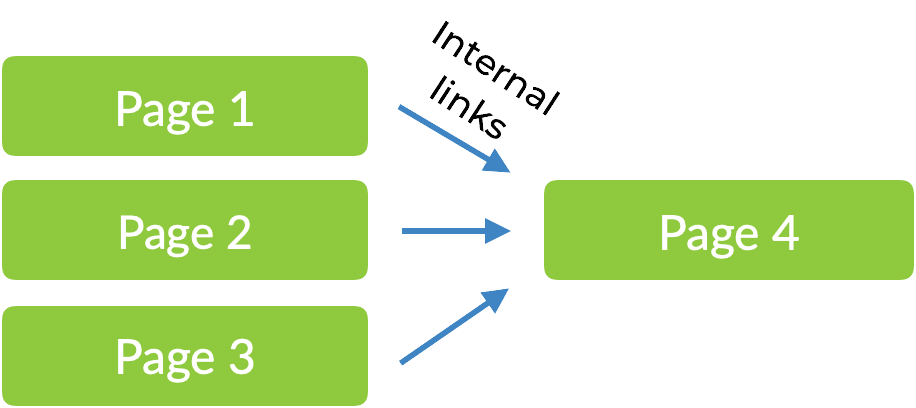

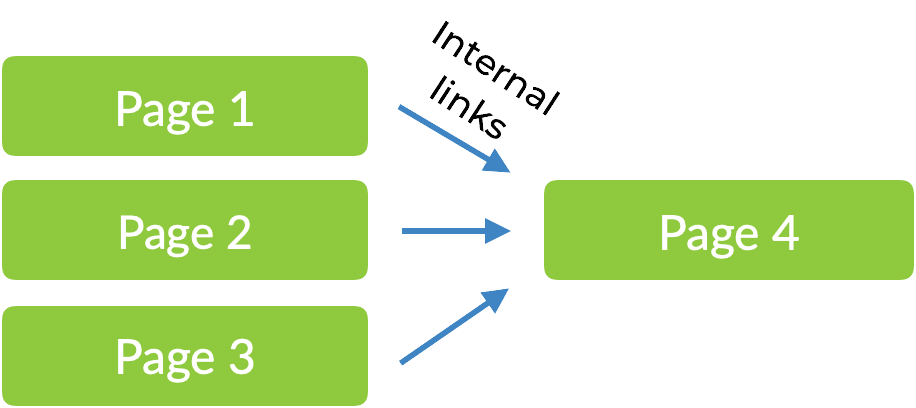

One big "ah ha" moment for me was realizing that internal and external links are VERY similar, only a few parameters separate the two. While I knew that both were important, I previously (incorrectly) assumed there might have been a drastically different algorithm that handled both...

However, according to the documentation, it appears as if internal links and external links are more closely related than originally anticipated. Further reinforcing the importance of good internal linking practices.

Within the links section, we also see that Google has a specific flag for when links come from a domain flagged as an expired domain. (This might be something they use for link penalties. If you accumulate too many links from expired domains, it might land you in some trouble).

In addition, they have a record of deleted links. This explains why we can often see the "ghost link" phenomenon in which webpages will continue to rank EVEN after links are removed. It's entirely possible that Google continues to count some of the links within their algorithm for some time even after the deletionDate.

(I speculate this is used to improve search engine result stability as link can sometimes migrate from the homepage, to inner pages and then move deeper into a site as content is shuffled around.)

Locality is interesting because they LITERALLY say that they are looking for links within the same BUCKET. This is as close as it gets to saying that those links are going to be worth considerably more.

And finally, parallel links indicates that additional links from the same domain might not count for as much.

How You Can Use This To Build Better Links

We already know that internal links are important...

In fact, internal links can count for almost as much as external links as they are treated in a very similar fashion.

And... they are EASY to create because you control the domain.

However this means when you're creating internal links, you STILL want to:

1- Include internal links on relevant pages.

2- Vary your internal link anchor text

In terms of saving time, this leak confirms that they are counting parallellinks (and therefore, we can assume that there are diminishing returns for multiple links from the same domain) so the most effective link building will focus on getting links from multiple different sites.

That said, I want to make clear that multiple links from a single domain still helps...

However, when link building, your time will be better spent getting links from a different domains.

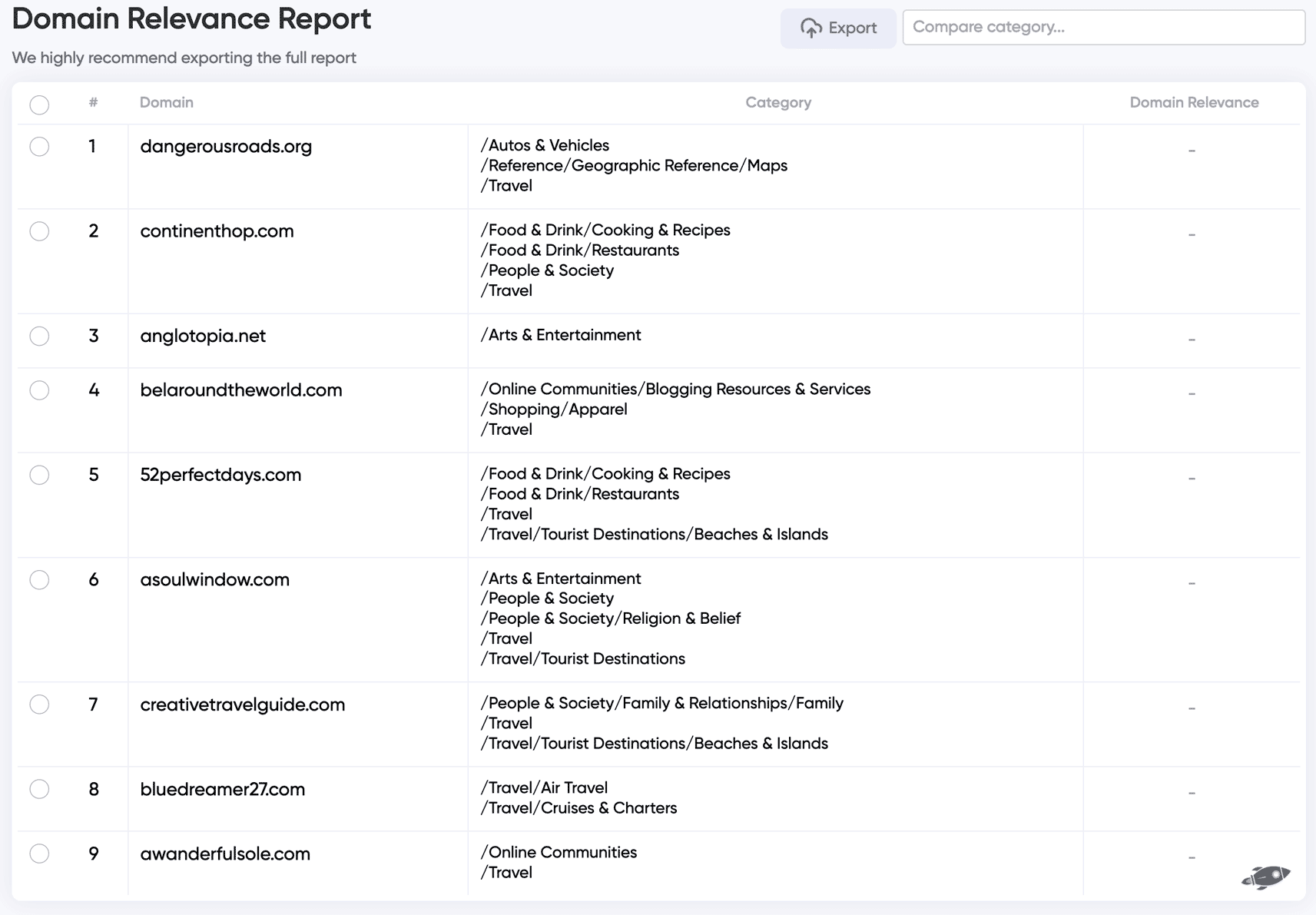

To locate a list of the domains within your keyword "bucket":

1. Search for your keyword on Google

2. The top 100 results for your keyword are URLs within the "bucket"

Obtaining a link from a page that is already ranking for your target term will be one of the most relevant links you can acquire.

(For highly competitive terms, one of my personal favorite techniques is to scrape the top 100 results for my keyword. I then hire a worker to manually extract the contact information for each URL and send them a personalized message seeking a link, sparing no expense. While slightly tedious (and sometimes expensive), I will typically acquire a handful of links this way and this can go a long way to securing the #1 spot for highly competitive keywords.)

Link Evaluation

Here's more information on the topic of links providing specific insights into how Google evaluates links.

bucket

setiPagerankWeight - TEMPORARY

Once again, we have a mention of the keyword bucket. This means that Google notices the set of pages / entities is the link associated with. We can assume that links within relevant content will be worth more.

In addition, we have "setiPagerankWeight" which is means that Google likely has a temporary value for Pagerank when Pagerank isn't yet calculated. (In order for the algorithm to work, they probably need a default PageRank value otherwise the algorithm might break. This is why brand new pages with no apparent links can still provide some temporary benefit as all pages have a default, minimum value.)

isNofollow - Whether this is a nofollow link

topicalityWeight - The topicality_weight for each link with this target URL

We start off with the "isNoFollow" tag which clearly indicates we are in the link section of the API. And to my surprise, the description reveals a small and subtle secret:

If a page has multiple links and just ONE of them is 'follow' then ALL of them will be be considered follow.

(Note: There is no 'do-follow' tag. A link to be considered as follow by the absence of a no-follow tag.)

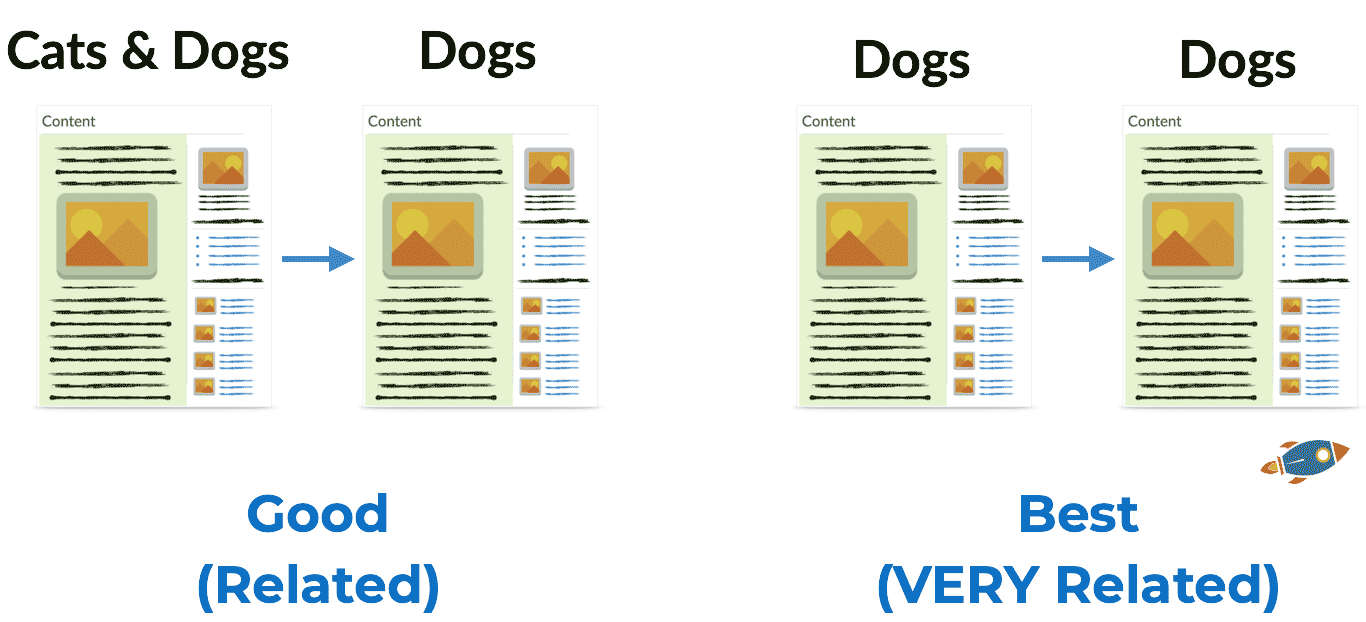

The next available API is TopicalWeight which, once again, indicates they are measuring link relevance! This is very likely a numerical value measuring how relevant a link is to the target URL, likely based on the content.

How You Can Use This To Build Better Links

We already knew that links coming from related content were worth more...

However it's important to note that there's a WEIGHT associated to it. This means that there's a degree of 'relatedness' that is calculated and the MORE it's related, the better it is.

Looking at the example above, if we have a link coming from a general "pet" article... it's good. However a link originating from another document on "dogs" will carry even more weight.

Therefore, in order to maximize our link building, I would try to get links from highly related documents.

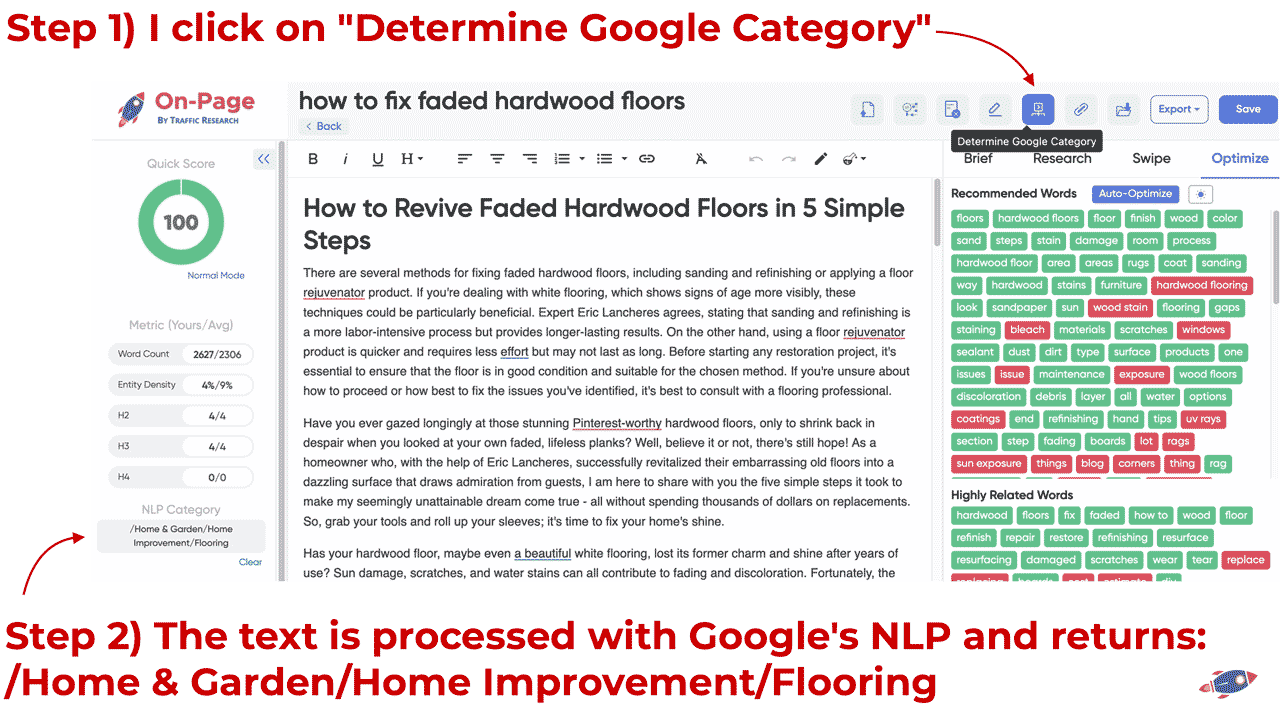

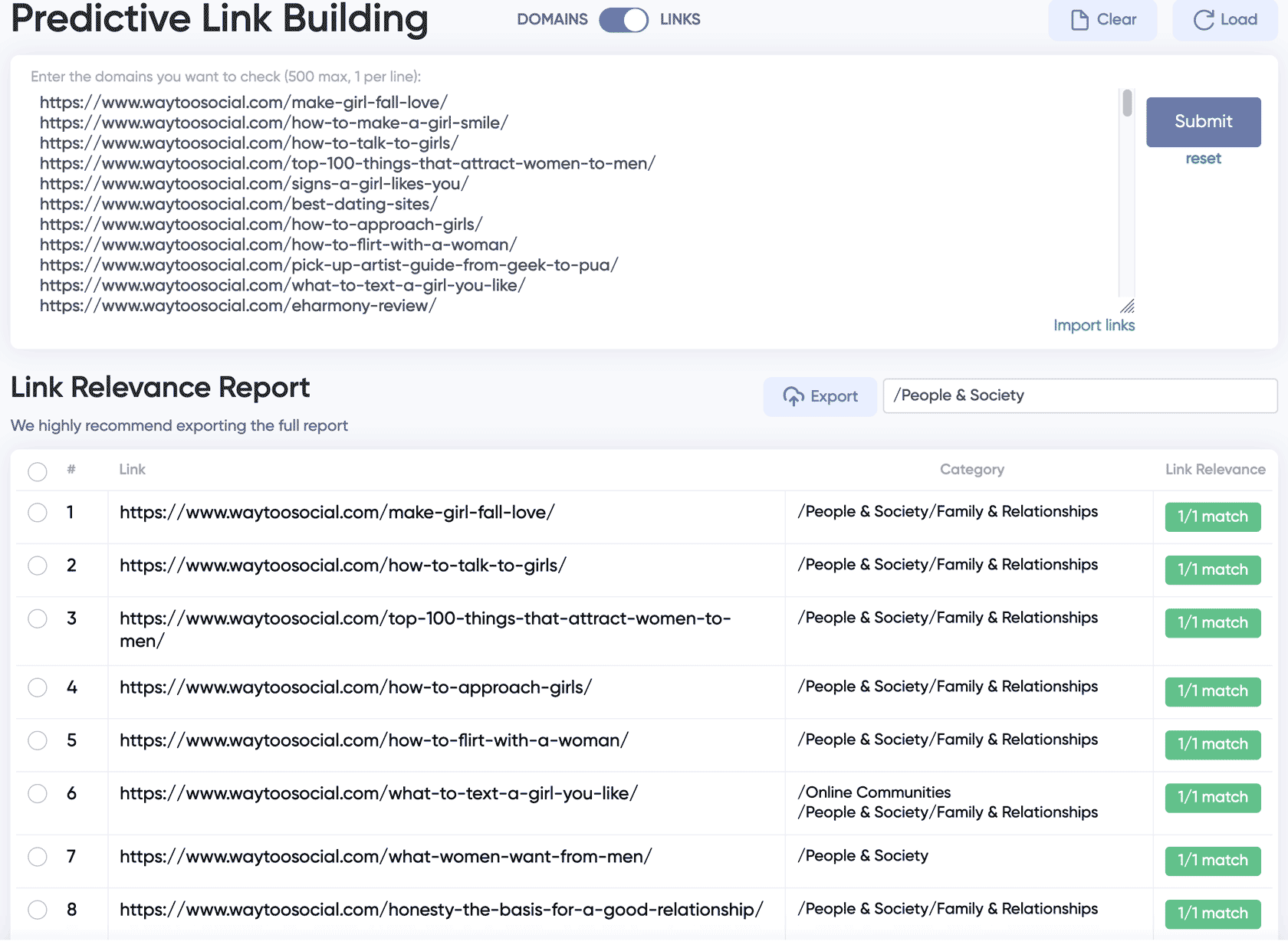

(My personal favorite technique when it comes to maximizing relevance is to determine the NLP category of my content using Google's own list of NLP categories.

This uses Google's categorization engine and it provides me with the best idea of what Google really thinks about my text. I built this feature so I can maximize the relevance when link building. The idea behind it is that if I know that both the source and the target documents are in the same category, then the link will be more relevant. )

Link Attributes

Another section of the leak describes the various attributes a link can have. This provides us with additional insights and confirms some existing theories.

additionalInfo - Additional information related to the source, such as news hub info.

From the description of additionalInfo, we can see that links from news hubs are noted. In other words: "Is a topic trending in the news" and receiving news links. I personally believe that Google treats websites that are trending in the news slightly differently than sites that have no news links.

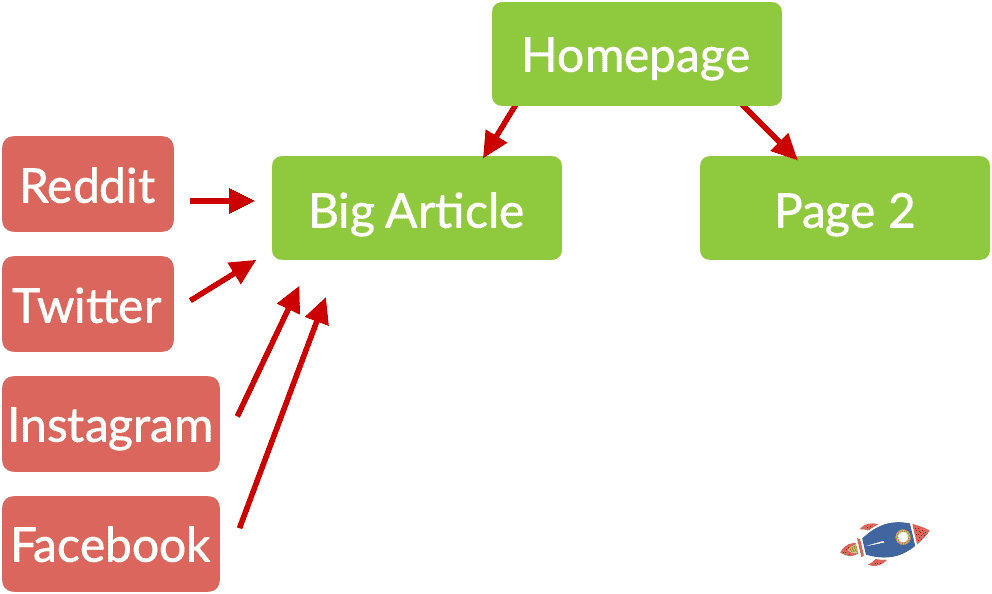

My Secret "Trending Website" Trick

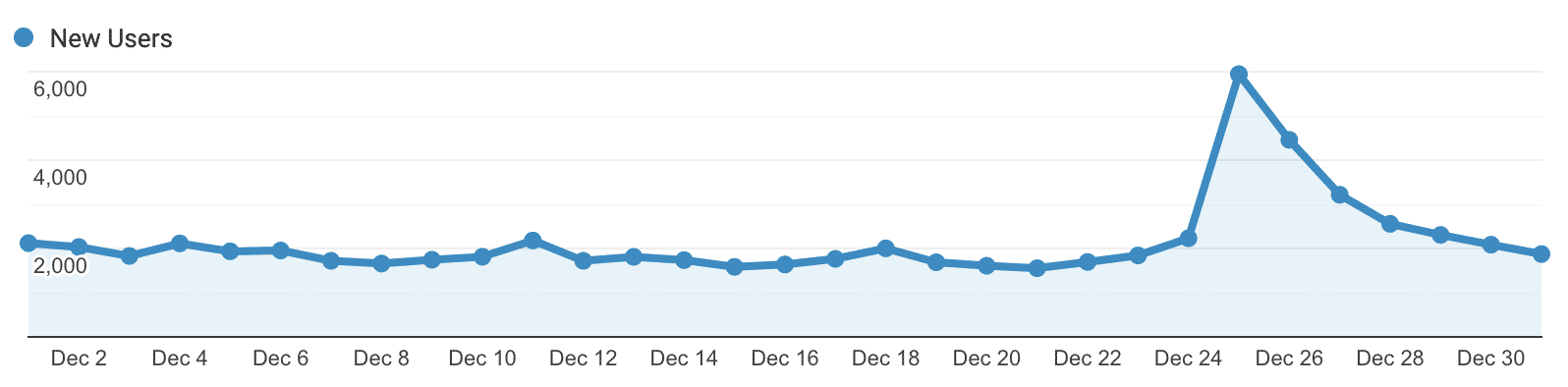

I personally believe that if your site is determined to be in the news and you receive a large influx of links, Google will understand that you're going viral and that you're trending. In contrast, if you receive a large quantity of links and you aren't in the news, it might be seen as spam. While not confirmed, this idea originates from reading Google patents.

One of my personal link building tricks involves publishing a press release about my website shortly before going on a link building campaign. I'll write a general press release so the site is trending in the news.

After approximately 1 week, I'll then proceed to building links to different inner pages.

cluster - anchor++ cluster id

homePageInfo - Information about if the source page is a home page

Then we have another indication of clusters, which serves to identify the cluster that the link belongs to.

Finally, Google pays special attention to homepage links because historically, those were highly abused. In the description they elaborate that if they find a homepage link, they will verify to see if they trust the homepage.

pageTags - Page tags are used in anchors to identify properties of the linking page

pagerank - uint16 scale

pagerankNs - unit16 scale

PagerankNS - Pagerank-NearestSeeds is a pagerank score for the doc, calculated using NearestSeeds method

spamrank - uint16 scale

spamscore1 - deprecated

spamscore2 - 0-127 scale

Of course, we have indications that Google uses pagetags to identify page and relate them to the link. Nothing new here.

To my surprise, we also see that Google STILL uses Pagerank within it's algorithm. While this was widely acknowledged earlier on in Google's history, they have since mentioned that they no longer use the original Pagerank.

I suppose that's technically true as they appear to use a new version of it called PagerankNS.

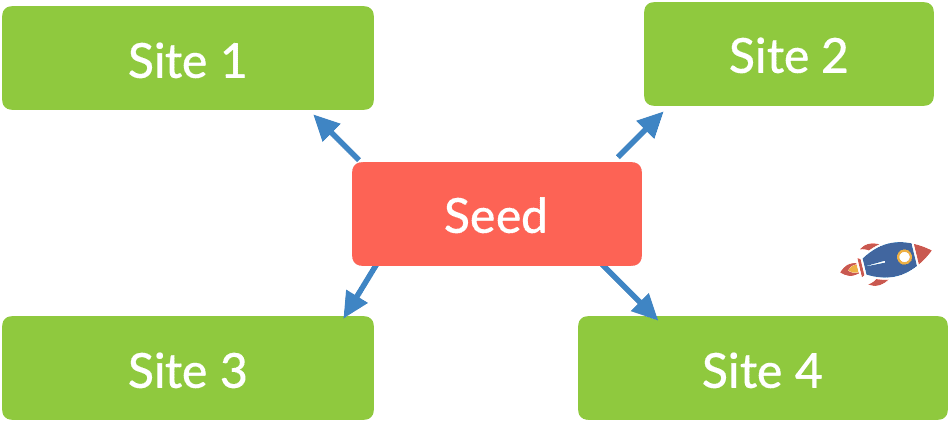

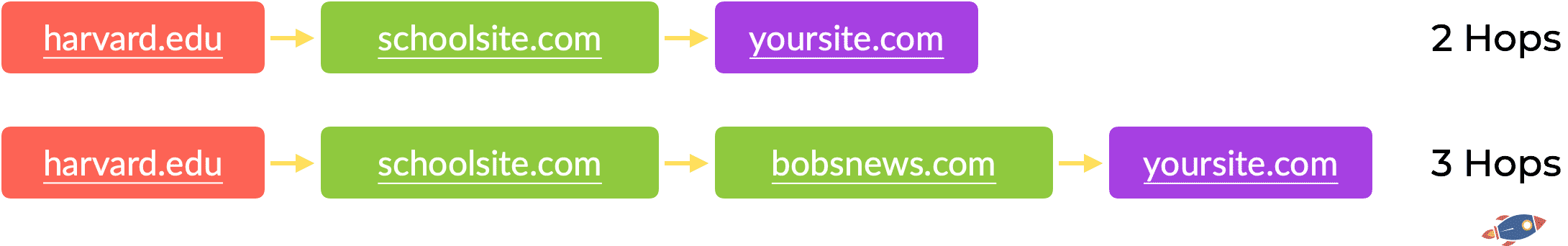

The NS part of the PageRank stands for "Nearest Seed" which is incredibly important. This will significantly impact how we evaluate sites.

Finally, as expected, links have a "Spamscore" associated with it which is likely derived by looking at the link text and surrounding text.

How You Can Use This To Build Better Links

Knowing that Google has updated the Pagerank algorithm to PagerankNS is absolutely critical for our link building.

First, it's notable because Google denied using seed sites in the past

However now that we know they do... this can help us build more effective links.

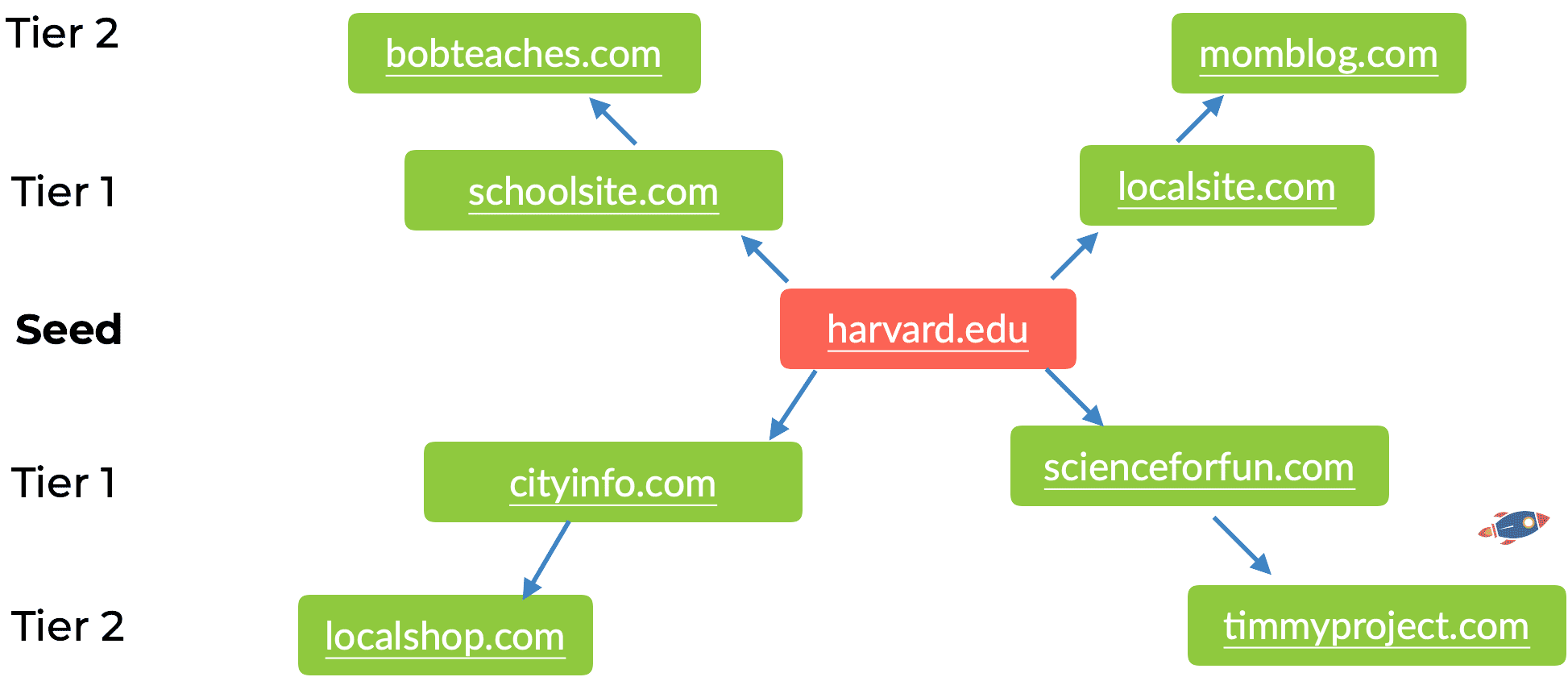

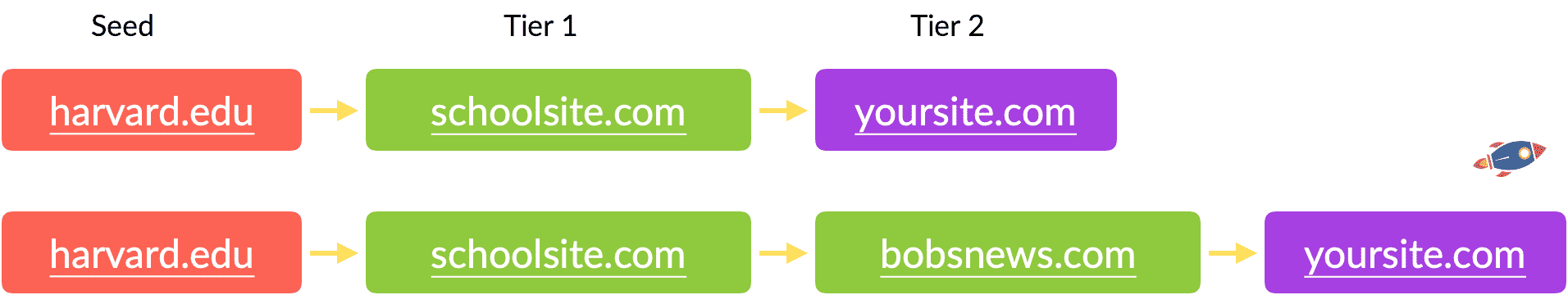

Seed based Pagerank measures for how far from the main seed the link is.

Hypothetically, Google can have a pre-determined a list of 1000 trusted seed sites.

If you get a link from a seed site, you get the most power. If you get a link from a site that has a link from a seed site, then you are getting a tier 1 link.

The further away from the central seeds, the less the link is worth.

In a practical sense, if Google were to set https://www.harvard.edu as a seed site.

The closer your link is to the "seed" site, the better is it.

In the opposite scenario, if you were to get a link from "tier 45 website" (a site that is very far away from a seed site) ... it would be almost worthless. This is why getting links from very small websites can sometimes have little to no effect, even if the link is coming from related content.

So now the burning question...

What are the seed sites used by Google?

We don't know the exact list (and of course, they won't tell us).

However, we do know that seed sites are typically highly moderated sites that exclusively link to trusted properties.

Among them, you would expect to find some (but not all):

.edu Trusted education institutions

.gov Governement websites

.mil Military website

For example, it might be reasonable to assume that these might be seed sites:

harvard.edu

mit.edu

stanford.edu

cornell.edu

berkeley.edu

academia.edu

yale.edu

columbia.edu

umich.edu

upenn.edu

washington.edu

psu.edu

umn.edu

jhu.edu

si.edu

princeton.edu

uchicago.edu

wisc.edu

ucla.edu

cmu.edu

nyu.edu

utexas.edu

usc.edu

purdue.edu

northwestern.edu

uci.edu

unc.edu

illinois.edu

ufl.edu

ucdavis.edu

msu.edu

ucsd.edu

brookings.edu

umd.edu

duke.edu

hbs.edu

osu.edu

tamu.edu

rutgers.edu

asu.edu

arizona.edu

ncsu.edu

bu.edu

georgetown.edu

colorado.edu

virginia.edu

utah.edu

tsinghua.edu.cn

unl.edu

nih.gov

cdc.gov

privacyshield.gov

ca.gov

dataprivacyframework.gov

irs.gov

ftc.gov

fda.gov

epa.gov

usda.gov

nasa.gov

hhs.gov

sec.gov

hud.gov

whitehouse.gov

texas.gov

state.gov

ny.gov

noaa.gov

nps.gov

loc.gov

ed.gov

ssa.gov

census.gov

bls.gov

nist.gov

va.gov

cms.gov

sba.gov

copyright.gov

house.gov

energy.gov

dol.gov

justice.gov

medlineplus.gov

wa.gov

usa.gov

congress.gov

senate.gov

dot.gov

fcc.gov

osha.gov

treasury.gov

archives.gov

usgs.gov

weather.gov

dhs.gov

fema.gov

nyc.gov

army.mil

navy.mil

af.mil

uscg.mil

osd.mil

dtic.mil

militaryonesource.mil

darpa.mil

marines.mil

tricare.mil

health.mil

disa.mil

dla.mil

nga.mil

dod.mil

whs.mil

dodlive.mil

defenselink.mil

spaceforce.mil

dfas.mil

cyber.mil

esgr.mil

usmc.mil

dcsa.mil

arlingtoncemetery.mil

dodig.mil

ng.mil

jcs.mil

nationalguard.mil

dcoe.mil

centcom.mil

dsca.mil

dia.mil

doded.mil

dtra.mil

socom.mil

pentagon.mil

dpaa.mil

pacom.mil

dau.mil

sigar.mil

mda.mil

dren.mil

dma.mil

norad.mil

africom.mil

dss.mil

southcom.mil

stratcom.mil

In my experience, I discovered that with a bit of outreach, it's possible to get links from local cities and from universities. For local cities, I sometimes offer specific discounts to residents and for universities, I'll try to tie into research and/or contact active graduate students that might have access to a part of the university site. I make sure to remain on the exact top level domain (I don't want a link from a sub-domain).

Finally, I previously used to use scholarships as a link building tactic HOWEVER Google addressed this technique directly (possibly by flagging the giant scholarship pages so they don't pass any power) so this is no longer a viable technique. I still believe in supporting students but beware this will no longer be as beneficial from a link building perspective.

Link: NSR

nsr - This NSR value has range [0,1000] and is the original value [0.0,1.0] multiplied by 1000 rounded to an integer.

This part is incredibly important and I believe has been overlooked by a large portion of the SEO community. This is the first mention of NSR and has a huge multiplier attached to it to amplify it's effect within the algorithm.

While Google doesn't explicitly disclose what NSR stands for in the documentation, after analyzing hints from dozens of descriptions, brainstorming dozens of different possibilities and weighing the most likely answer, we can conclude that NSR likely stands for Normalized Site Rank.

How You Can Use This To Build Better Links

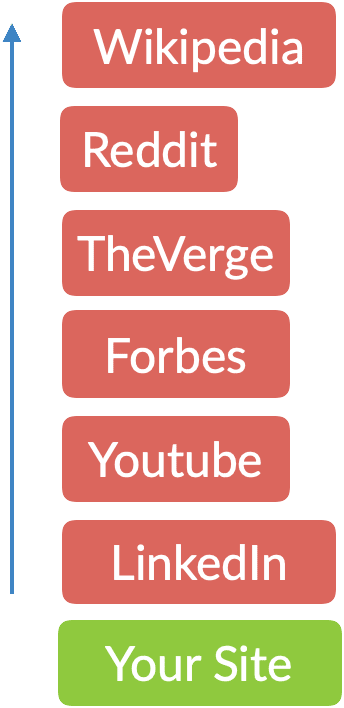

Normalized Site Rank is incredibly important.

It is very likely a comparison of your site's click / engagement performance versus other sites.

For example,

Rank #1 Wikipedia

Rank #1000 BigSite.com

Rank #300000 smallnichesite.com

Normalized, from 0 to 1 in terms of how prominent a site is compared to others... and then they multiple this by 1000 to get a huge weight that influences rankings.

This ultimately means...

When you're link building, you want to get links from the biggest players in the industry. This is because the sites that are ranking for everything in your industry will likely have the highest NSR.

Get a guest post there, a news article about you, something... and that link will be worth considerably more than a bunch of smaller links from non-authoritative sites.

I will share tricks on how you can potentially increase the NSR for your site within the site quality section.

When link building, I personally sign up as a contributor to all the major sites within my industry. It's a slow process, but I start by contributing a handful of articles without any commercial links. Once I've established a solid reputation on a site, I subtly link back to my own site. It can sometimes take 6 months to get a single link... however I believe it's because I'm willing to do the things that other web owners aren't willing to do that I can surpass them.

Link Relevance

Another link section includes an interesting API called "score".

score - Score in [0, \infty) that represents how relatively likely it is to see that entity cooccurring with the main entity (in the entity join)

With regards to the score, I believe they might be referring to the anchor text itself (as in, how likely is the anchor text to appear inside the text that it is linking to).

Essentially, this could prevent misalignment with regards to anchor text. So for example, if you were to create anchor text links for "cell phone cases" but you pointed them to a page about "skin care", then the score would be extremely low as it is very unlikely to appear naturally within the content.

Bottom line: We want links from relevant content, with relevant anchor text, pointing back to our documents.

Link Location

boostSourceBlocker - Defined as a source-blocker, a result which can be a boost target but should itself not be boosted (e.g. roboted documents)

inbodyTargetLink

outlinksTargetLink

One interesting API reference is the "boostSourceBlocker" which essentially means that a page can potentially be a valuable source for links while not ranking itself.

Historically, SEO professionals (myself included) might been wary of receiving links from those pages that weren't ranking... however, according to this API reference, in certain cases, we might be missing out.

It would make sense that links from directories, category pages, rss feeds and historical records could potentially be valuable even if they don't show up in search.

With regards to the other two API references, I believe that Google identifies the location of the link, making a distinction between links within the main body of a document (Main content) and links outside the main content (header, sidebar, footer, etc).

If this is the case, then it might be reasonable to assume that that links from main content are worth more.

Local Mentions

Because "mentions" might be play a role in SEO, I thought it might be appropriate to cover it here.

annotationConfidence - Confidence score for business mention annotations

confidence - Probability that this is the authority page of the business

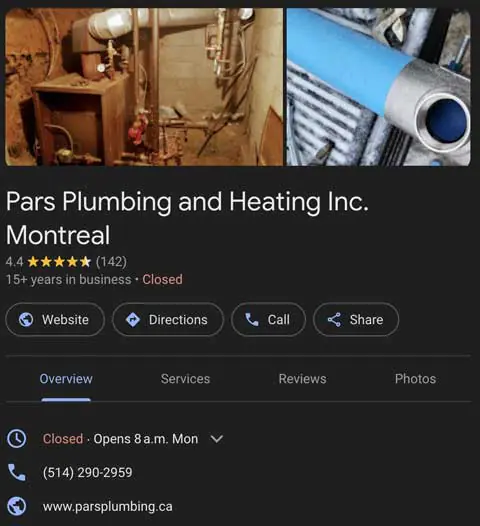

The first API reference is: "AnnotationConfidence". I believe this might be the confidence in the mentions / annotations (citations/business name mentions) of the business. We've known for a while that citations can play a role with local SEO however I haven't seen as much testing with just mentioning the business name.

This might play a role and would be interesting to test.

In addition, I never knew that Google checks the relevance of the website linked within a Google listing. This makes sense to prevent spam as we wouldn't want a local daycare Google listing to link to a supplement website.

Better Local Ranking

It is important to fill out ALL the possible options in your local Google listing in order to maximize ranking.

In addition, we want the entities used on the local Google listing to MATCH the entities on the website.

So for example, if your local business description includes: "plumber", then make sure it says "plumber" on the homepage of the site.

(This goes without saying however for local rankings, I personally do everything I can to get links from the official city & from State/Province websites. These tend to have incredible power and getting just a single link from the official city site can sometimes make my listing skyrocket. Don't tell anyone!)

Anchors For Rank

I wasn't expecting this much documentation on anchor texts however it appears as if Google uses them extensively when evaluating pages and entire websites.

SimplifiedAnchor from the anchor data of the docjoins, by specifying the option separate_onsite_anchors to SimplifiedAnchorsBuilder, we can also separate the onsite anchors from the other (offdomain) anchors

anchorText - The anchor text. Note that the normalized text is not populated

count - The number of times we see this anchor text

countFromOffdomain - Count, score, normalized score, and volume of offdomain anchors

countFromOnsite - Count, score, normalized score, and volume of onsite anchors

normalizedScore - The normalized score, which is computed from the score and the total_volume

normalizedScoreFromOffdomain

normalizedScoreFromOnsite

score - The sum/aggregate of the anchor scores that have the same text

scoreFromFragment - The sum/aggregate of the anchor scores that direct to a fragment and have the same text

The first notable discovery is that Google combines external and internal text to determine anchor relevance.

In addition, they create a score out of many different metrics:

- How many different anchor texts a page has.

- How frequently do we see the same anchor text,

- How many external anchor texts are there,

- How many internal anchor texts

And finally, it puts it all together to create a final anchor text score.

scoreFromOffdomain

scoreFromOffdomainFragment

scoreFromOnsite

scoreFromOnsiteFragment

scoreFromRedirect - The sum/aggregate of the anchor scores that direct to a different wiki title and have the same text.

totalVolume - The total score volume used for normalization

totalVolumeFromOffdomain

totalVolumeFromOnsite

We also see that fragmented anchor texts (partial anchor text) still seems to count towards the main anchor text score... even if it's not an exact match.

Better Link Building

This highlights the importance of having MANY internal links every time you want something to rank.

(While it takes quite a lot of effort to acquire external links, you can easily create a handful of internal links within a few minutes.)

Each internal anchor text should be highly relevant AND should vary to avoid too high of a count. (They'll still be counted by the fragmented part)

For example:

"fishing poles"

"great fishing poles"

"poles for fishing"

"fishing"

(I personally believe it's crucial to have relevant internal links between all your content. To build topical clusters and enhance topical relevance, your articles need to be properly linked together. This strategy can potentially boost the rankings of your entire website as it gains topical authority. I will expand on this further in the topical authority section.)

Validating Anchor Text

This section highlights some of the more clever tricks that Google uses to rank a wide range pages online.

matchedScore - Difference in KL-divergence from spam and non-spam anchors

matchedScoreInfo - Detailed debug information about computation of trusted anchors match

phrasesScore - Count of anchors classified as spam using anchor text

site - Site name from anchor.source().site()

text - Tokenized text of all anchors from the site

All these scores, from matchedScore, phrasesScore seem to be a calculation of the total anchor texts pointing to a site in order to determine the site's relevance. This re-emphasises the importance of anchor texts when ranking.

What's really interesting is the solution that Google devised simultaneously avoid spam and while ranking queries for people that are searching for sensitive topics. (I'll describe how it works down below.)

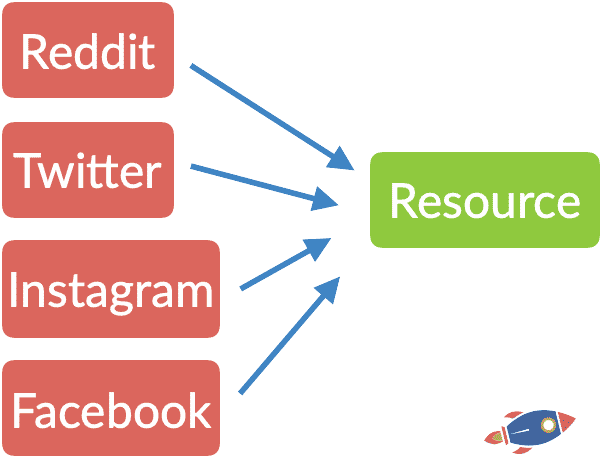

Better Link Building

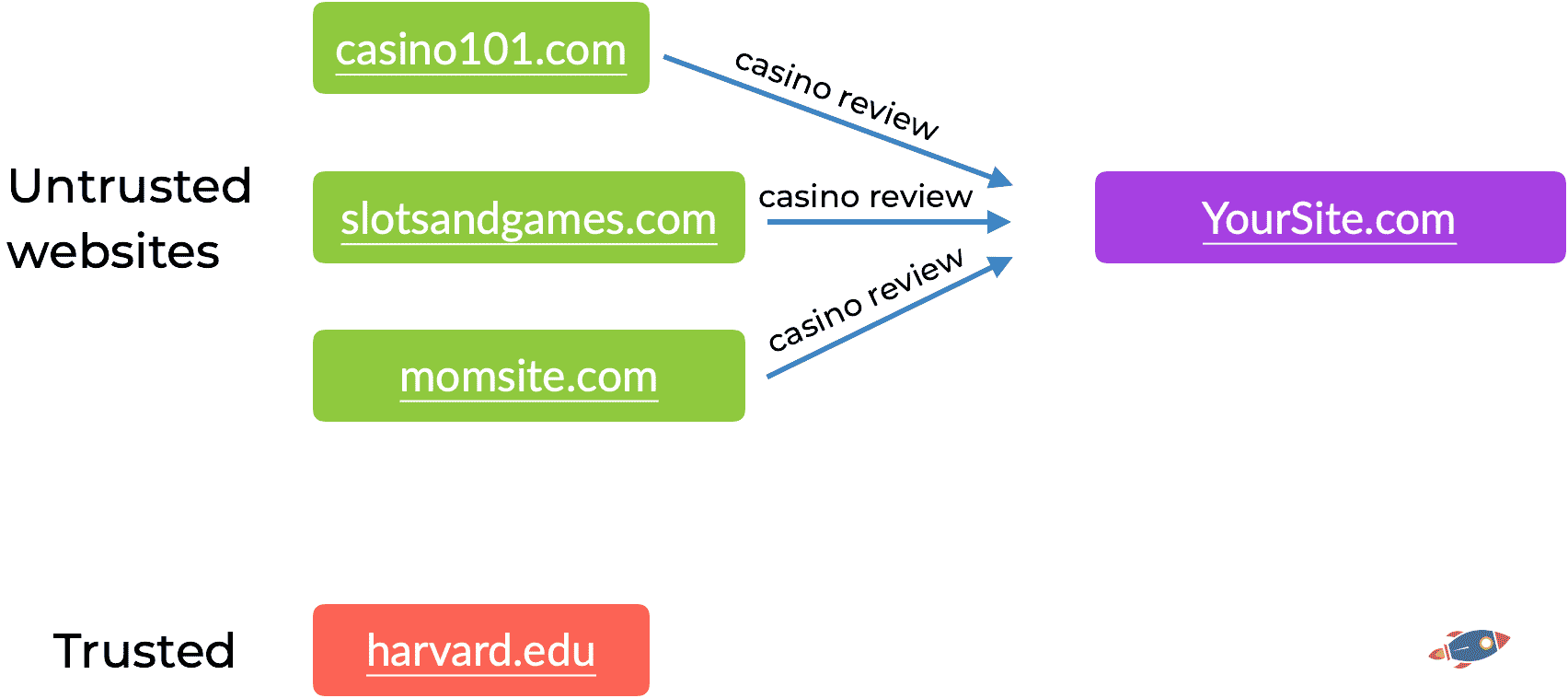

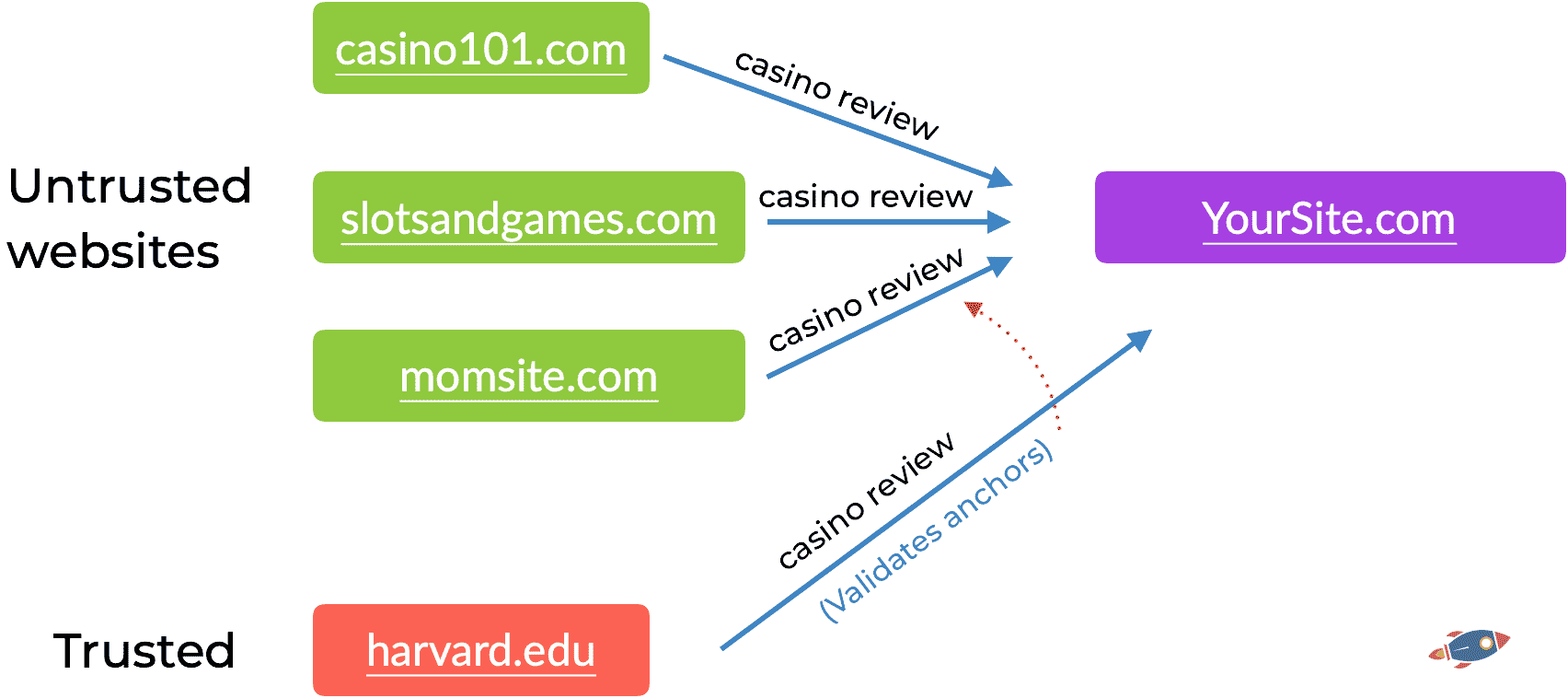

This is incredibly smart... if you're in a business such as casinos, CBD or any "high risk" industry.

Then Google will look at anchors from trusted sites in order to VALIDATE the links/anchors from less trusted sites.

In practice, this means is that IF you get 10 links from randomsite.com pointing to your site with the anchor "casino slots", it will think it's spam.

However, if you then get a trusted site, harvard.edu to link to you with "casino slots", then Google will not only accept it... it will ALSO validate and pass the power from randomsite.com

In the example above, NONE of the links are trusted. This is because none of the websites linking with the shadier anchor text are considered trusted.

In this instance, ALL of the links are trusted. The link from the trusted site (in this case, Harvard), validates the link anchors from the other websites.

In practice, if you're trying to rank for terms that might be fall into this "high risk" category, then it is critical that you mix in links from trusted websites in order to validate other links.

(I personally used this technique extensively in the past when I was building link for HIGHLY competitive terms in a sensitive industry. At one point I had links from 17 different sites that I owned pointing to a single place and in order to get it 'over the top', I also acquired 1 link from an industry site via a contribution I made. This one link seemed to have made all the 17 other links work better and the page shot up to #1 for my target keyword.)

trustedScore - Fraction of pages with newsy anchors on the site, >0 for trusted sites

Finally, we also see they have a trustedScore that verifies if the site is in the news (news links!). Here's how it can help you do better SEO...

Better Link Building

Google TRUSTS sites that link out frequently using "newsy" anchor texts.

This is HUGE for determining if a site is a good source for a link.

YOU WANT LINKS FROM SITES THAT HAVE MANY "NEWSY" related outgoing links.

For example, if a site is frequently linking out with anchors such as:

"Website"

"Here"

"Click here"

Then it is likely a news website that can be trusted.

Conversely, if it's a site that is frequently linking out with commercial terms such as:

"best drone reviews"

"blue t-shirts"

"best CBD oil"

Then the trusted score for the entire website will be VERY low. (And then you don't want a link from it)

The implications are HUGE... and I almost don't want to say it...

Knowing that TrustedScore is determined by the anchor texts profile of the website means that...

Manipulating TrustedScore

Disclaimer: I do not recommend doing this. Proceed at your own risk. You are responsible for any changes you make to your site.

Link sellers, PBNs and other sites that want to increase their "TrustedScore" might be able to artificially link out with a TON of anchor texts with:

"website"

"click here"

"read here"

"site"

"here"

And that should, in theory, increase the TrustedScore of the site by altering the ratio of commercial to 'newsy' anchor text... which will make all the other links work better.

Note: The more outgoing links there are, the more it dilutes Pagerank. Hypothetically, if I were to do such a thing, I would create a category with only 1 link to minimize Pagerank loss. Within that category, I would create all the anchors text links.

Bad Links

Due to the importance of links and anchor texts, SEOs have been artificially creating them since the dawn of SEO. Here's how Google deals with link abuse.

penguinLastUpdate - BEGIN: Penguin related fields. Timestamp when penguin scores were last updated. Measured in days since Jan. 1st 1995

anchorCount

badbacklinksPenalized - Whether this doc is penalized by BadBackLinks, in which case we should not use improvanchor score in mustang ascorer

penguinPenalty - Page-level penguin penalty (0 = good, 1 = bad)

minHostHomePageLocalOutdegree - Minimum local outdegree of all anchor sources that are host home pages as well as on the same host as the current target URL

droppedRedundantAnchorCount - Sum of anchors_dropped in the repeated group RedundantAnchorInfo, but can go higher if the latter reaches the cap of kMaxRecordsToKeep

Nearly every reference in here is important.

First, it confirms that Penguin updates periodically which means that if you are hit by a Penguin penalty, you'll have to wait until the next update to know if you recovered or not.

In addition, we can clearly see that one of the major components is counting anchor text. This indicates that they likely penalized webpages when too many repeating anchor text are detected.

We also see that pages can have a 'bad links' flag. While it isn't clear how a page gets this flag, I speculate that it might be manually assigned. In the early days of Penguin, Google used a lot of manual reviewers.

Finally, they check homepage links between sites hosted on the same server. For instance, if you have 10 or more sites all on the same host, and all the homepages link to a central location, they'll likely penalize the site for link abuse.

nonLocalAnchorCount

mediumCorpusAnchorCount

penguinEarlyAnchorProtected - Doc is protected by goodness of early anchors

droppedHomepageAnchorCount

redundantanchorinfoforphrasecap

forwardedOffdomainAnchorCount

droppedNonLocalAnchorCount

perdupstats

onsiteAnchorCount

droppedLocalAnchorCount

penguinTooManySources - Doc not scored because it has too many anchor sources

forwardedAnchorCount

anchorSpamInfo - This structure contains signals and penalties of AnchorSpamPenalizer

Here we see that Penguin is looking specifically at the external anchor text links. While we haven't seen any benefits to repeating the same internal anchor text link over and over again, internal links seem to be sparred from any Penguin penalty.

Another interesting reference is that there is a mechanism by which a page can be "protected" by early, trusted anchors texts. This was likely put in place to shield against negative SEO.

Finally, it's interesting that there is actually an anchor text limit! You can have too many links from different sources. For example, if a page receives 500,000 links (don't laugh, it happens), then Google will not calculate the score.

lowCorpusAnchorCount

lowCorpusOffdomainAnchorCount

baseAnchorCount

minDomainHomePageLocalOutdegree - Minimum local outdegree of all anchor sources that are domain home pages as well as on the same domain as the current target URL

skippedAccumulate - A count of the number of times anchor accumulation has been skipped for this document

topPrOnsiteAnchorCount - According to anchor quality bucket, anchor with pagrank > 51000 is the best anchor. anchors with pagerank < 47000 are all same

pageMismatchTaggedAnchors

spamLog10Odds - The log base 10 odds that this set of anchors exhibits spammy behavior

redundantanchorinfo

pageFromExpiredTaggedAnchors - Set in SignalPenalizer::FillInAnchorStatistics

baseOffdomainAnchorCount

phraseAnchorSpamInfo - Following signals identify spike of spammy anchor phrases

anchorPhraseCount - The number of unique anchor phrases

ondomainAnchorCount

totalDomainsAbovePhraseCap - Number of domains above per domain phrase cap

When it comes to backlink abuse, Google really hates homepage links from PBNs. They have a dedicated section checking for PBN homepage links. If most of your incoming links come from homepages... then you're in trouble.

We see that there's a special trust factor for anchors with PR5+ of power. This is interesting and lines up with what I have personally experienced in the past: Having one single link from a high PR page with relevant anchor text makes a huge difference.

In addition, we see that Google pays attention to anchors coming from pages marked with "expired". The mechanism behind this is that Google likely applies a flag to an entire domain if they notice that an expired domain has been revived with the same content. Then all the outgoing links from that domain will be marked with "expired" for a certain period of time.

As there are surely legitimate cases where someone might re-use an old expired domain, I speculate there must be some time limit associated with the expired flag.

And finally, as we have previously seen, Google does have limits for the quantity of incoming link. Here they clarify that you can have a maximum of 5000 anchors pointing to a page. After that, they might ignore them or perhaps assign a penalty.

totalDomainsSeen - Number of domains seen in total

topPrOffdomainAnchorCount

scannedAnchorCount - The total number of anchors being scanned from storage

localAnchorCount

linkBeforeSitechangeTaggedAnchors

globalAnchorDelta - Metric of number of changed global anchors computed as, size - intersection

topPrOndomainAnchorCount

mediumCorpusOffdomainAnchorCount

offdomainAnchorCount

totalDomainPhrasePairsSeenApprox - Number of domain/phrase pairs in total

skippedOrReusedReason - Reason to skip accumulate, when skipped, or Reason for reprocessing when not skipped

anchorsWithDedupedImprovanchors - The number of anchors for which some ImprovAnchors phrases have been removed due to duplication within source org

fakeAnchorCount

redundantAnchorForPhraseCapCount - Total anchor dropped due to exceed per domain phrase cap

totalDomainPhrasePairsAboveLimit - The following should be equal to the size of the following repeated group, except that it can go higher than 10,000

timestamp - Walltime of when anchors were accumulated last

When it comes to link building, Google calculates the quantity of redundant anchors texts. (Repeating the same anchor likely gets you into trouble.)

They also look at the total quantity of domains linking, go too far and it can set off red flags.

And of course, they look at how many anchors per domain you have. (ie: In other words, avoid site wide links from another domain as it will surely set off a red flag within the Penguin algorithm.)

Avoiding Link Penalties

The MAIN thing you should remember should you engage in link building is that you want to VARY your anchor text.

Too many exact anchor texts, built too quickly, from too many sources... will trigger a penguin penalty.

Therefore, you'll likely want to build links slowly over time while varying the anchor text. While it might be fine to have a few repeating anchor texts I am very cautious to avoid excessive repetition.

(In the past, I have personally acquired websites that have never expired / never dropped from the index. I then made sure to maintain them for a long period of time, 6 months to a year, before using them to build links. I never linked from the homepage and instead, I created hyper-relevant articles and linked out from the main content in a contextual manner. In addition, I timed my links so I never received more than 1 link per day and ideally, I only acquired 1 link every few days. Of course, the anchor text always changed in order to avoid anchor text issues.

Finally, when I did acquire sites with a potentially troubled past, I swiftly redirected them to sub-directories of new sites in order to filter out any flags associated with them. I avoided redirecting websites to the root of other websites and I always redirected into a directory. None of this is ranking advice as it is against Google's official guidelines to partake in any link building...

Plus, these days I have migrated to acquiring more 'whitehat' links from large authority sites.)

Negative SEO

I was pleasantly surprised to discover a section dedicated to protecting websites from negative SEO attacks.

(It appears as if Google cares about SEOs)

demotedEnd - End date of the demotion period

demotedStart - Start date of the demotion period

phraseCount - Following fields record signals used in anchor spam classification. How many spam phrases found in the anchors among unique domains

phraseDays - Over how many days 80% of these phrases were discovered

phraseFraq - Spam phrases fraction of all anchors of the document

phraseRate - Average daily rate of spam anchor discovery

It's very cool to see this: It appears as if there's an active "spam shield" that is activated when it detects a negative SEO attack.

If an abnormal quantity of links begins to point towards a specific page, it will temporarily demote the page while the attack is underway. Once the attack is over, it preserves the good anchor text links (built before the attack) while eliminating the bad ones created during the attack phase.

This approach is clever because it makes the negative SEO attacker think their techniques are working, as they might see a temporary demotion of their target. Then, once they stop, the page magically bounces back to where it was.

Site Quality - Rewards & Penalties

Core Updates, Panda and Topical Authority

This might be one of the most important sections of the entire API reference repository, as it explains how Google evaluates entire websites. Site quality dictates how all the pages on a website will rank; thus, understanding the site quality algorithm is critical for good SEO practices.

Furthermore, if you've been impacted by a recent penalty, the site's quality is likely the root cause.

ugcDiscussionEffortScore - UGC page quality signals

productReviewPDemoteSite - Product review demotion/promotion confidences

exactMatchDomainDemotion - Page quality signals converted from fields in proto QualityBoost in quality/q2/proto/quality-boost.proto

The first API reference discusses user generated content which is likely used to evaluate the effort that goes into forum threads, comments and possibly sites like Reddit. What is interesting here is that they are measuring the "effort" of the discussion.

I suspect that the page might get a boost if the discussion is deemed high effort.

Next, we a have "Product Review site" promotion or demotion used to identify and rate product reviews. (Secretly, we all know this is demotion as it has been difficult to rank product reviews unless you're on a major authority website.)

Last, we have an "Exact match domain demotion" which was likely introduced to prevent sites like: best-drone-reviews.com from ranking. The act of registering exact match domains for ranking purposes was quite popular back a decade ago... these days, I personally recommend creating a memorable brand name for you

nsrConfidence - NSR confidence score: converted from quality_nsr.NsrData

lowQuality - S2V low quality score: converted from quality_nsr.NsrData, applied in Qstar

navDemotion - nav_demotion: converted from QualityBoost.nav_demoted.boost

siteAuthority - site_authority: converted from quality_nsr.SiteAuthority, applied in Qstar

Now we get to the "site wide" elements that can make an entire site tank.

We have "nsrConfidence" which is the confidence in the "Normalized Site Rank" score. As we previously covered, the Normalized Site Score is most likely a measure of how the site performs compared to the rest of the industry. I believe it is one of the most important metrics and this "nsrConfidence" evaluates how trustworthy the score is.

Then we have "lowQuality" which is likely a flag for a bad site. We see that it is pulled from the NSR data which means that when the normalized site rank is too low, the site receives a "low quality" flag... and then likely doesn't rank at all. If you've seen websites remain in the index but refuse to rank, then this is probably the reason.

The "navDemotion" is likely a demotion related to NavBoost, perhaps calculating how much it should drop a site in rankings.

And of course... *drumroll* they have a measure of site authority in the form of "siteAuthority".

This is notable for two reasons:

1. Google has denied having a site authority metric in the past. They LITERALLY have an API reference called SiteAuthority so I don't feel as if the Google spokesperson was being straightforward.

2. Once again, we see that the "site authority" metric is derived from the normalized site rank! If you weren't already convinced, hopefully you can see how important normalized site rank is within the entire algorithm.

Site Quality

BOTH the "Low Quality" flag and the "Site Authority" metric come from NSR data.

It appears as if a large portion of Google's algorithm revolves around user interactions with the website which would explain why some sites are struggling to rank after the latest core updates.

Websites can be hit by MULTIPLE demotions originating from one measurement: NSR.

babyPandaV2Demotion - New BabyPanda demotion, applied on top of Panda

authorityPromotion - authority promotion

anchormismatchdemotion - anchor_mismatch_demotion

crapsAbsoluteHostSignals - Impressions, unsquashed, host level, not to be used with compressed ratios

I knew that they had worked on revisions of Panda a few years ago as I was involved in recovering websites affected by Panda (I created case studies on how to systematically recover websites from Panda)

However, I wrongfully assumed that they had simply updated the existing Panda algorithm... It seems as if introduced a NEW panda algorithm called: "Baby panda". Surprisingly, it seems as if this new baby Panda (user experience related algorithm) is applied ON TOP of the original Panda?

Yikes! This means you can potentially be penalized twice for poor user experience.

Conversely, there also seems to be a boost for 'authority sites' so perhaps if your user signals are great, it go the other way. And of course, a demotion if your anchor text links don't match the content.

Finally, raw click signals are used to evaluate the site's performance as well. Click signals (from Chrome) are incredibly important for determining how a site performs in search.

topicEmbeddingsVersionedData - Versioned TopicEmbeddings data to be populated later into superroot / used directly in scorers

scamness - Scam model score

unauthoritativeScore - Unauthoritative score

We see that the topics of the site can be accessed by the algorithm. This makes sense as Google needs to be able to retrieve sites that are topically relevant to the query.

Then we have a measure of "Scamness" for a website. While we don't know much about this API reference, we can infer that they are using AI to measure it.

And finally, an unauthoritative score. Perhaps this is based on links, user experience or originality of content.

pandaDemotion - This is the encoding of Panda fields in the proto SiteQualityFeatures in quality/q2/proto/site_quality_features.proto

Here we find the original Panda!!

The Original Panda Algorithm

The Panda algorithm has a soft spot in my heart as I was on the main stage of Traffic & Conversions in front of 2000 entrepreneurs explaining how to recover websites from a Panda penalty. I spoke around the world explaining how to take advantage of Google algorithms to rank better online.

For those wondering, the trick to recovering from Panda is to trim the fat from the site while focusing on user accomplishment / experience. We want every 'landing page' from Google to provide a good user experience. In my presentation, I would share a repeatable process for making a site 'sticky' and share how to remove all the redundant/duplicate/low quality pages as determined by visitor analytics.

While the Google algorithm has changed significantly since then, Google is still focused on the overall user experience... albeilt they are measuring it in slightly different ways.

The recent Helpful Core Update, March Core update and more are all focus on the user... and they are likely using additional signals (ie: from clicks) to measure it.

It does seem somewhat unfair that all of these penalties stack on top of each other.

From unauthoritative score, to click signals, to normalized site rank, to Panda, and even BabyPanda—if you're affected by one, then you're likely to be affected by all of them. This is the main reason why some websites impacted by one algorithm change notice a subsequent drop after the next update, and so forth.

Site Score

I found a section that discussed an explicit "siteScore" and dove into what that could possibly entail.

siteFrac - What fraction of the site went into the computation of the site_score

siteScore - Site-level aggregated keto score

versionId - Unique id of the version

First, I think it's notable because just like site authority, Google has also mentioned there is no site score...

Well, there is.

Interestingly, they don't use the entire site to calculate the site score. Instead, they note the percentage of the site used to estimate the score. This is likely to save resources.

pageEmbedding

siteEmbedding - Compressed site/page embeddings

siteFocusScore - Number denoting how much a site is focused on one topic

siteRadius - The measure of how far page_embeddings deviate from the site_embedding

versionId

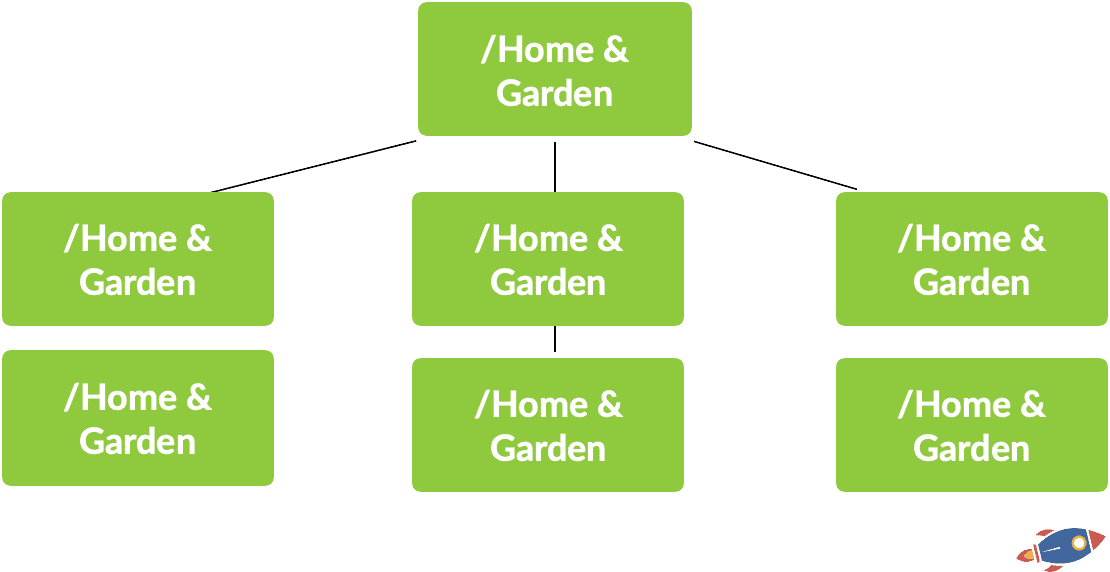

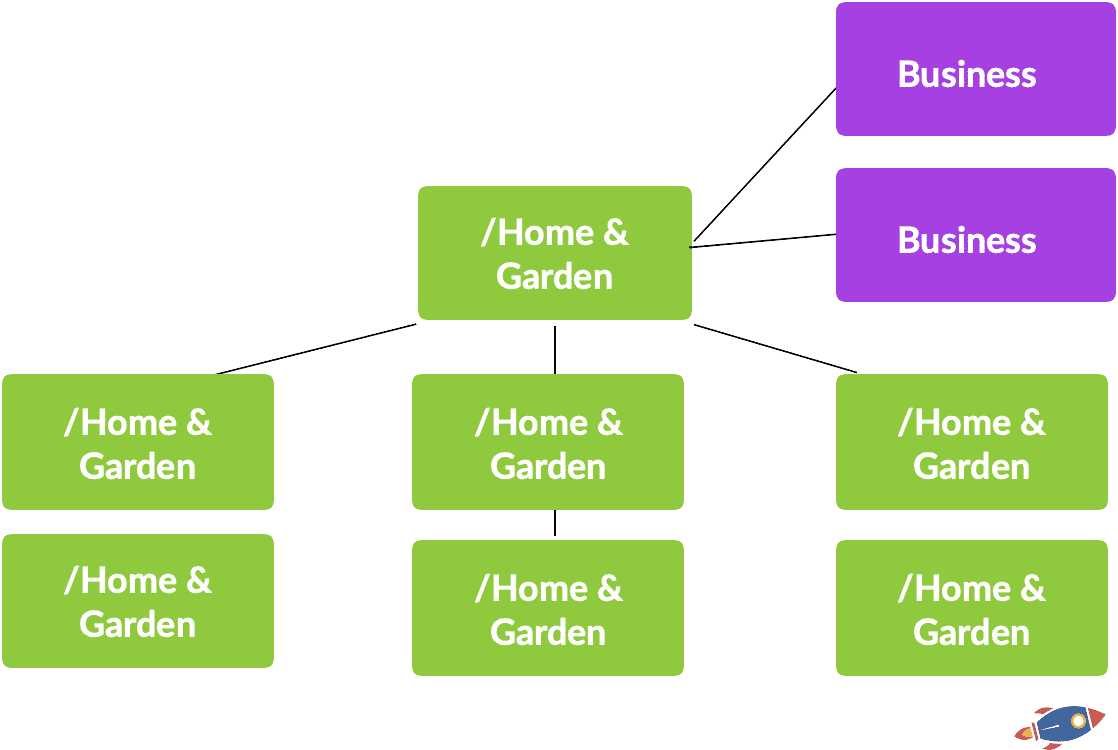

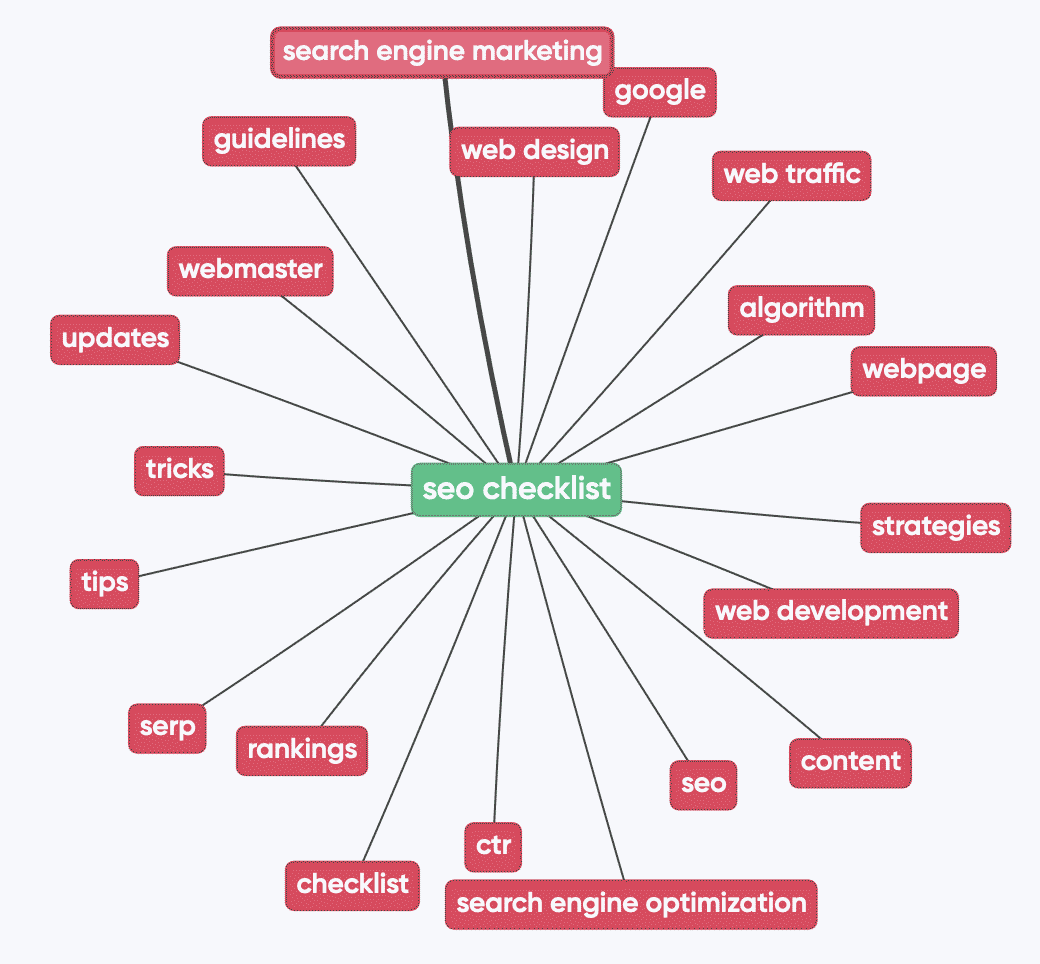

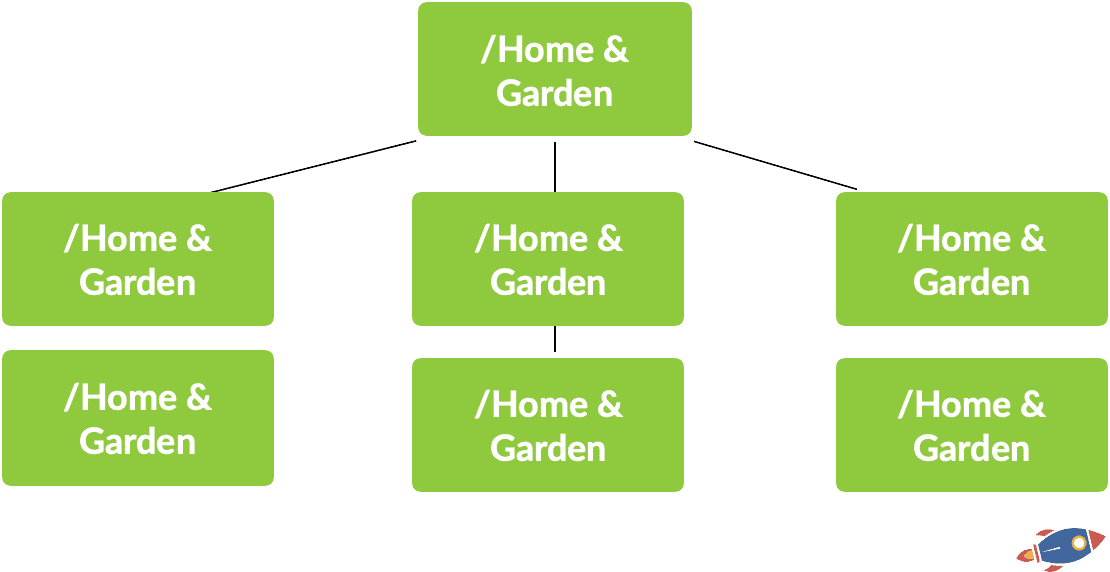

Here we have a section that many SEOs will be interested in... topical authority.

When Google refers to embeddings in the context of search, it means transforming words and phrases from web content into vector representations. These vectors help Google's algorithms understand and quantify the relationships and relevance among different textual entities, enhancing the accuracy of search results.

In plain English, it's like Google creating a digital map of all the words and phrases found on the website.

We see with the "SiteEmbedding", "SiteFocusScore" and "SiteRadius" that Google looks at both the page embedding AND the site embedding to determine the topic. This means that other content on your site will dictate how well you rank.

In addition, it also measures how focused on a topic a site is... very likely providing a significant ranking boost for sites that have a narrow focus.

And finally, it will measure how 'unrelated' a page is to the rest of the site. Creating unrelated content on a website will likely not rank as well.

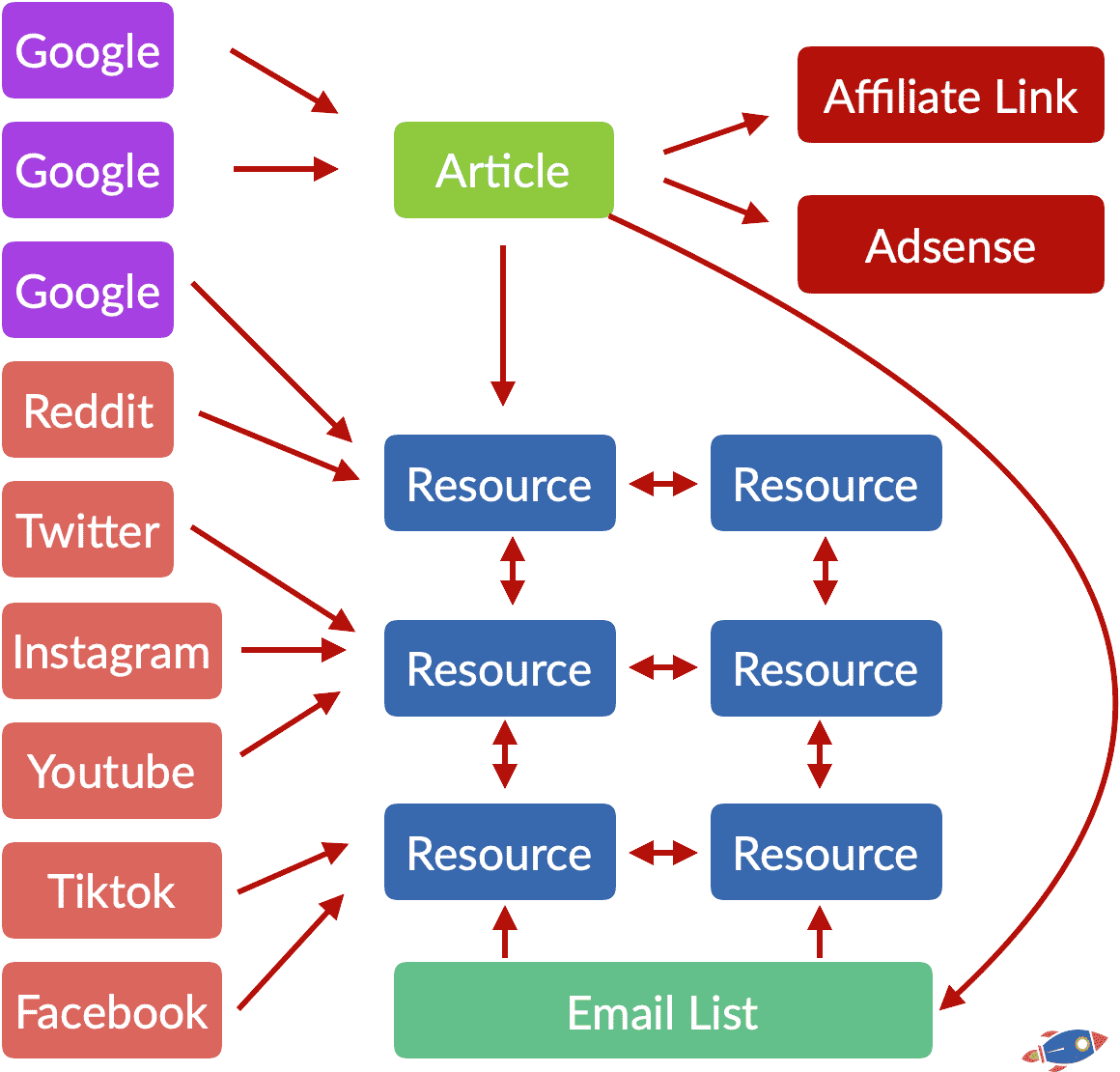

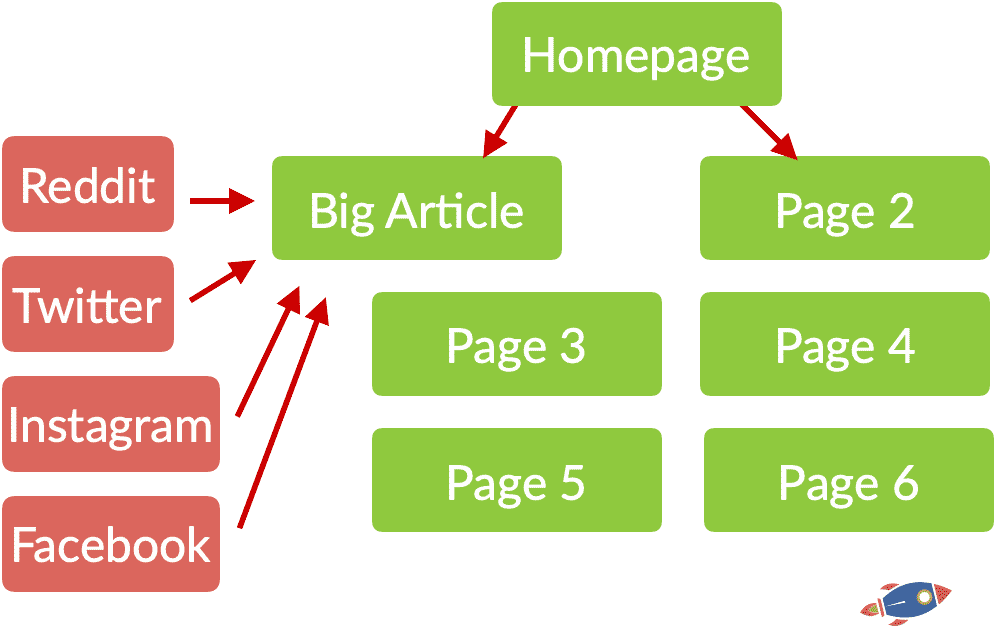

Ranking With Topical Authority

Building a topical authority site plays a key role in ranking for terms. Sites with a narrow focus will fare better as Google measure how related (or unrelated) a page is when compared to the rest of the site.

This boost might be necessary to compete against giant websites.

So it is likely a good idea to start a site with a narrow focus and expand over time as the site builds up power.

Eventually when the site accumulates enough links and authority, you'll likely want to branch out to other topics. These topics might take a little time to take off as you build up content in that section however they too, will end up ranking.

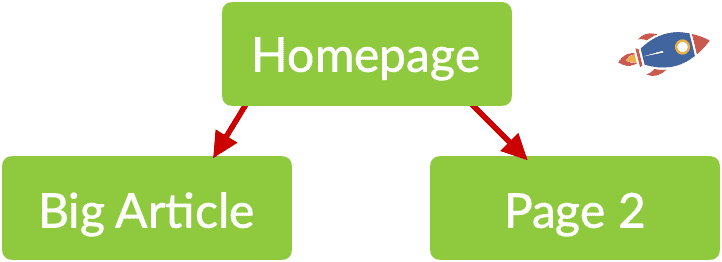

(I personally start with 1 single piece of content that acts as the centerpiece for my site.

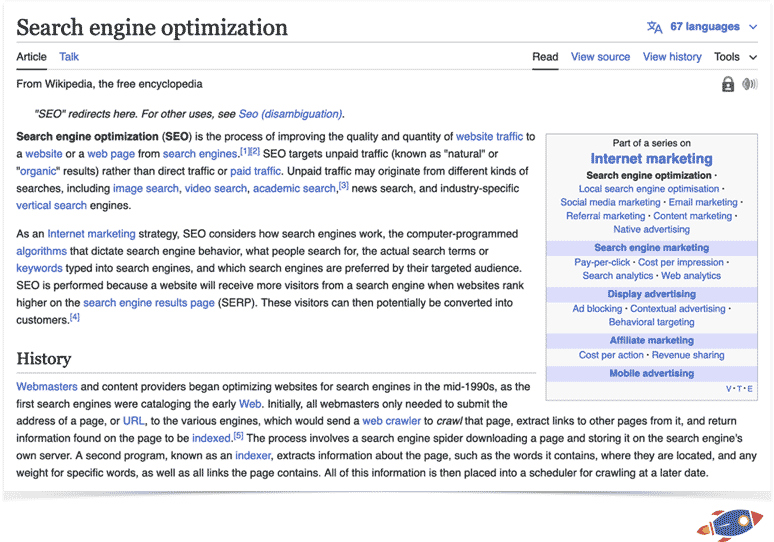

I then map out a list of subsequent, semantically related content. To find these semantically related ideas, I'll typically go to Wikipedia and read the page most closely related to my main topic. For example, if the center of my cluster is on SEO, I'll find the Wikipedia page most closely related to Search Engine Optimization.

In addition, I try determine the Google NLP category of the keyword by looking analyzing at the top ranking results on Google. (If I see that the top 3 results for that topic all land in the same category, I can reasonably predict that if I write an article on that topic, it will also land in that category).

It isn't perfect, however I try to make sure that follow-up content lines up with existing categories.

Finally, clusters also require internal links so I will interlink similar and complimentary topics together with relevant anchor text within my content.)

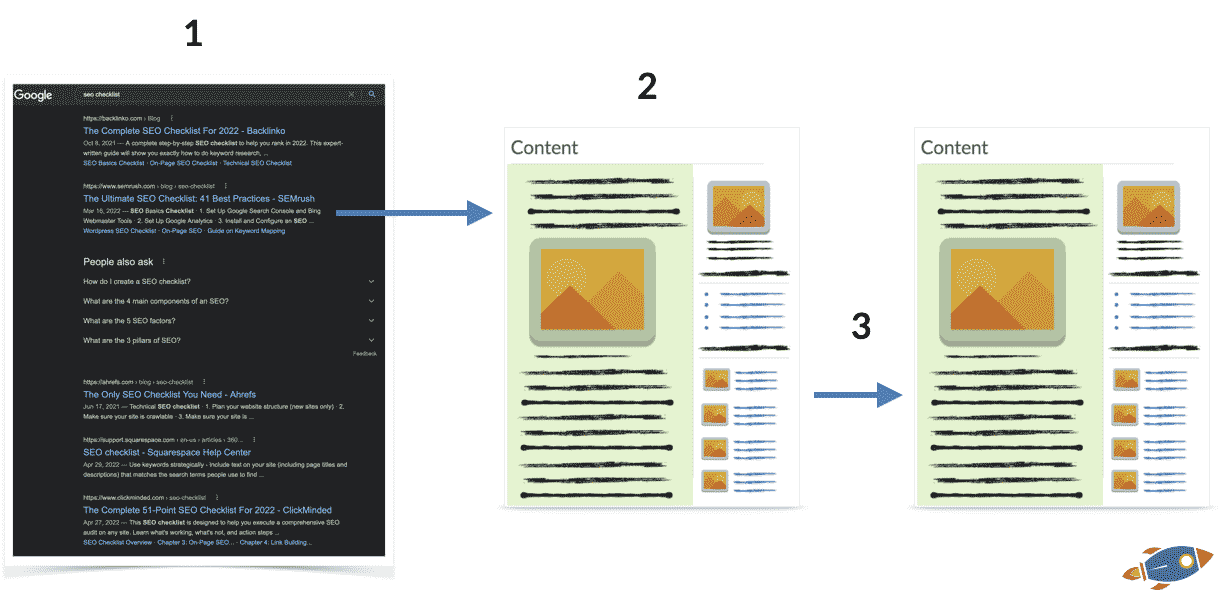

Content That Ranks

One of the my favorite areas of SEO, on-page optimization! In this section of the API reference documentation, we dive into how Google looks at content.

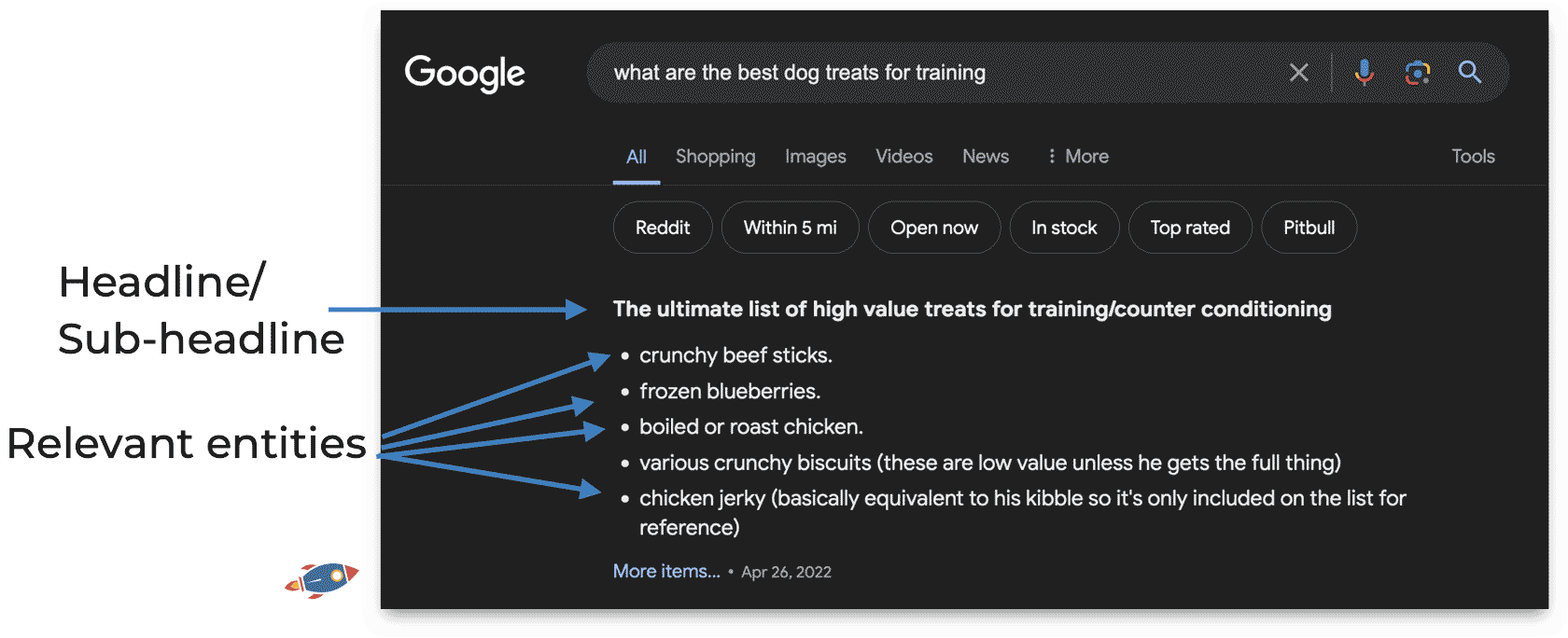

entity - Entities in the document

semanticNode - The semantic nodes for the document represent arbitrary types of higher-level abstractions beyond entity mention coreference and binary relations between entities

hyperlink - The hyperlinks in the document. Multiple hyperlinks are sorted in left-to-right order

lastSignificantUpdate - Last significant update of the page content, in the same format as the contentage field.

The first thing we notice is that they are looking at both the entities AND the semantic node in which this document lies when evaluating content. In other words, if this document is part of a related, relevant set of documents.

This is just another reason to have topically aligned content.

Next, they are looking at the outgoing links in a document. While we had already tested for this (pages with relevant outgoing links ranked better than pages without any links), it's nice to confirm that they are monitoring it.

And of course, they are looking for fresh & updated content, as noted by "lastSignificantUpdate".

Google knows the difference between minor updates and significant updates.

Optimizing Content For Rankings

When optimizing a page, if you want Google to recrawl and rescore the page, you have to modify a significant amount of text on the page.

I have found that adding an extra paragraph of text will typically be enough to trigger a full re-evaluation of the content.

In contrast, adding 4-5 words won't be enough. Google likely saves resources when there are only small modifications to the page.

So if you're optimizing and trying to add more relevant entities into your content, aim to perform a significant update.

(In my experience, it typically takes approximately 3-4 weeks for Google to fully re-crawl and re-calculate a page score after you've performed a significant update. You might get a freshness boost before that though!)

entityLabel - Entity labels used in this document

topic

golden - Flag for indicating that the document is a gold-standard document

Once again, we see that Google sort documents by using entities.

Entities are specific words or phrases that are recognized as representing distinct and well-defined concepts or objects, each carrying an associated meaning based on real-world references.

For example, the word "party" can have multiple meanings.

1. A party of 5 people

2. A political party

3. Let's go to the party!

4. I like to party with friends

While the word stays the same, the entity identifies which one of these categories the word falls into. Within a machine learning context, it is very important to make a distinction between a political party... and politicians having a party! (And that's why Google uses entities to classify and rank all documents on the web)

Closely related to entities are topical categories, which they mention when discussing "topics".

Surprisingly, they can also flag "Golden" documents that human reviewers deem important or as a gold standard. I'm not sure to which capacity the golden flag is used however it would surely give the document an unfair advantage over all documents.

focusEntity - Focus entity. For lexicon articles, like Wikipedia pages, a document is often about a certain entity

syntacticDate - Document's syntactic date

privacySensitive - True if this document contains privacy sensitive data. When the document is transferred in RPC calls the RPC should use

Here we see that when Google is analyzing a document, they try to identify ONE main focus entity. I'll sometimes refer to this as main keyword of a document even though it should probably technically be called the "focus entity" (somehow that just doesn't have the same ring to it).

An interesting bit is that they also note if a date is mentioned in the title / URL, then they check if this aligns with the other dates found within the document.

A while ago, a Google engineer mentioned that you should avoid just updating the date in the title without updating any other information on the page... this is likely why.

Finally, there's a special note for checking if the page contains private information. (For example, a person's home address, credit card, maybe social security number or phone number.) In my experience, when Google finds private and sensitive information, the page is less likely to rank. <cough> negative seo tactic</cough>

Better On-Page Ranking

Google uses entities, topics and semantic nodes to classify a document.

This means that your page can appear for queries even if the words don't appear on the page (because that term might be recognized as a topic or might appear in the semantic nodes).

They also have a focus entity... which tries to identify the MAIN focus of your page.

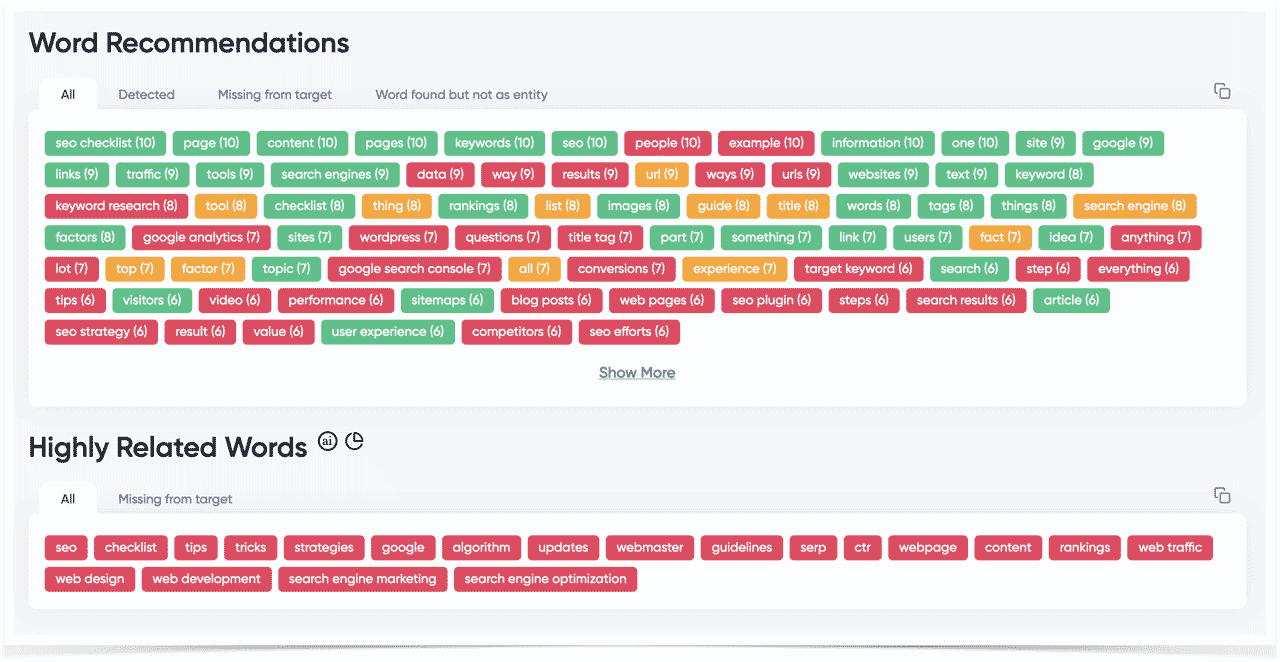

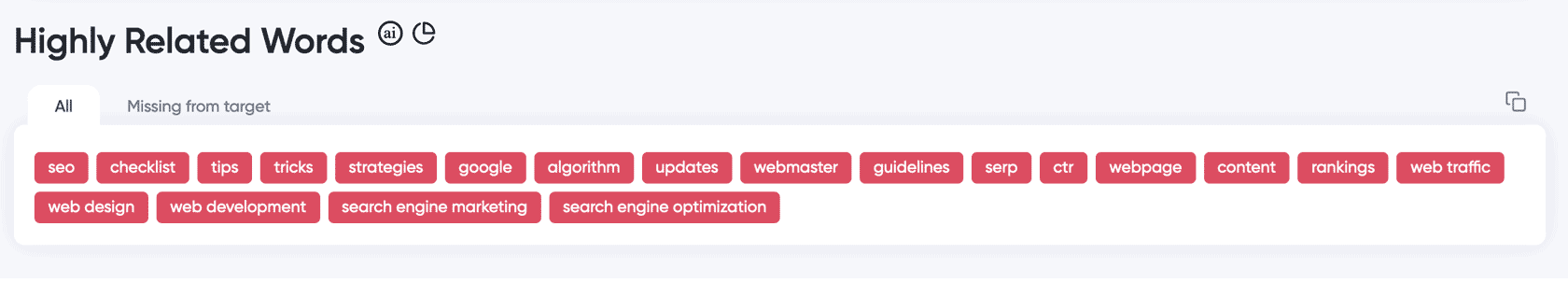

Highly Related Entities

http://on-page.ai

I personally try to add all the top related entities multiple times on my page.

In addition, I will add the focus entity (the one I want to rank for) within the title, headline, text and images.

Top Entities (Analyzed With Google's NLP Model)

http://on-page.ai

This helps Google build a highly relevant embedding, it sets the correct focus entity and will maximize my chances of ranking.

(During the recent core updates, I have noticed a trend in which pages that have a higher exact & related entity density ranked above pages with a lower density of related entities. I try to use all the top entities multiple times within my content and compare the density of my pages against my competitors. I don't stop until I have a higher entity density.)

Page Information

Within this section of the API reference material, Google shows us the information they store about webpages.

cdoc - This field contains reference pages for this entity

Apparently they have reference pages for entities... like Wikipedia!

That's cool... because it means if you want to be highly relevant for an entity, you might be able to look up the Wikipedia page associated with the entity and include similar terms.

linkInfo - Contains all links (with scores) that Webref knows for this entity. Links are relationships between entities

nameInfo - Contains all names (with scores) that Webref knows for this entity

In addition, there's an API to list all the links with scores associate with a page! (This would be cool to use. Imagine Ahrefs site explorer... but with Google's own data. It would be incredible to see the actual scores associated with each link built to a page. This would make link building SO much easier.)

We can also confirm that links are relationships between entities. We already knew this however it re-confirms that you want to get links from related content.

We also see that Google makes a note of all the names associated with page. This is likely very useful when deciding which documents to retrieve from the index.

Better Ranking

Ranking on Google can sometimes get fairly complex. So when strangers ask me how to rank, I usually share that it comes down to 3 primary things:

1. Good site

2. Good content

3. Good links

If you can deliver on all 3, then you'll usually be just fine (Assuming Chrome visitors agree that your content is good... which leads to Google thinking you have a good site.)

Plus, once I know I'm working on a site that is in good standing... then it becomes really easy. All I need to do is produce highly optimized content (with a lot of entities) that lines up with the pre-existing topical content. Add a few relevant internal links... and it ranks!

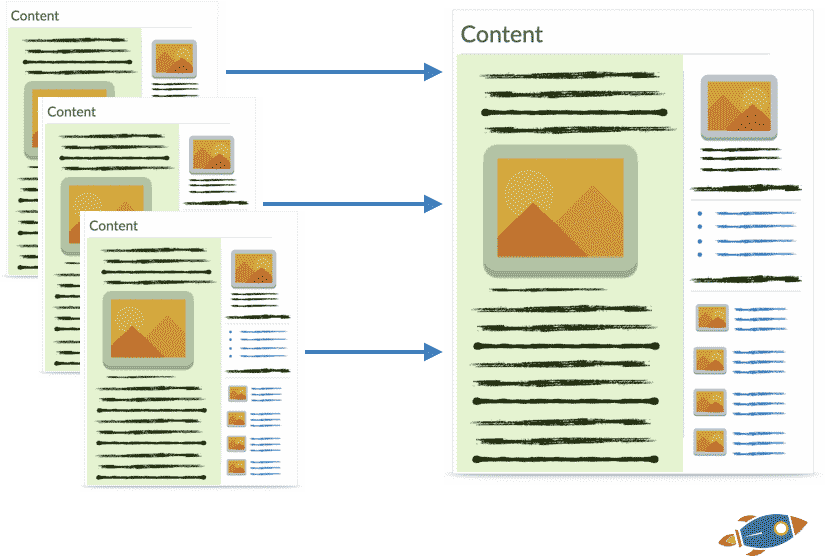

We continue digging into the page information and see they have a section for original content.

originalcontent

Personally, I believe this refers to original content vs duplicate content.

And not, 'how original' content is.

badSslCertificate - This field is present iff the page has a bad SSL certificate itself or in its redirect chain

Google will likely not rank you if you have a bad SSL certificate.

registrationinfo - Information about the most recent creation and expiration of this domain

Google cares a LOT about the recent creation and expiration date of a domain. In this section they elaborate (not shown) on something called a "DomainEdge signal" that I suspect is likely used to fight PBNs.

richsnippet - rich snippet extracted from the content of a document

It's interesting to see that every webpage has a rich snippet (even if it's not shown). More on how Google evaluates rich snippets later on.

Document Info

sitemap - Sitelinks: a collection of interesting links a user might be interested in, given they are interested in this document

csePagerankCutoff - URL should only be selected for CSE Index if it's pagerank is higher than cse_pagerank_cutoff

Interestingly, they store a list the related pages to a document which is likely determined by user behavior. Within patents, they describe that they create associations between subsequent user searches.

Perhaps if you search for document X and THEN search for document Y, an association is created between X and Y.

Another small discovery within the document information section is that Google has an option to NOT show a page if it's Pagerank is lower than a pre-determined amount.

Auto-Suggest SEO

While this isn't exactly what the API is referencing, in the past, I might or might not have manipulated Google search suggestions.

Using mobile devices, I may have instructed searchers to search for an initial term, click search and then return to Google to search for a different, associated term. Through consistent daily searches, the association may have eventually linked up and auto-suggest may have shown suggestions for the complimentary search.

This is how many of the search terms now have "reddit" at the end... except this works for different website brand names. The only pitfall is that it requires consistent searches over a long period of time so it could be a hassle to get going and maintain.

Avoiding Penalties - Content Evaluation

This section dives deep into content, penalties and spam. Our aim is to understand what constitutes great optimized content while avoiding over-optimization

uacSpamScore - The uac spam score is represented in 7 bits, going from 0 to 127

spamtokensContentScore - For SpamTokens content scores. Used in SiteBoostTwiddler to determine whether a page is UGC Spam

trendspamScore - For now, the count of matching trendspam queries

ScaledSpamScoreYoram - Spamscores are represented as a 7-bit integer, going from 0 to 127

Inside the documentation, there are quite a few spam flags. The first, "uacSpamScore" might stand for User Automation or User Activity.

The next "Spam tokens content score" & "trendspamScore" references suggests that Google might have a list of spammy words they use to measure spam. Perhaps many mentions of casino / viagra might trigger it.

Trending Spam Topics

Each year, there are new spam topics that trend on the internet.

From the age old viagra... to new protein powders, gummy CBD edibles, new bitcoin slot machines, etc.

Spam evolves through the years and it appears as if Google keeps track of it. (TrendSpamScore)

Unless you are explicitly targeting a high risk term, avoid having comments / multiple spam recognized entities on the page.

datesInfo - Stores dates-related info (e.g. page is old based on its date annotations

ymylHealthScore - Stores scores of ymyl health classifier as defined at go/ymyl-classifier-dd

ymylNewsScore - Stores scores of ymyl news classifier as defined at go/ymyl-classifier-dd

Within the date section, Google mentions a "FreshnessTwiddler" (Twiddlers are modifiers used by Google, usually to boost rankings). In spite of what Google has claimed in the past, this is very likely a freshness ranking boost given to fresh content.

In addition, we see that they do, in fact, have a "YourMoneyYourLife" score. Whenever you're posting content on the web, Google is checking to see if it falls within this category and if it does, there might be an additional layer of verifications and/or requirements.

The next API reference is incredibly important:

topPetacatTaxId - Top petacat of the site

The 'TopPetacatTaxID' API reference, in my opinion, indicates that topical relevance is very important when it comes to Google ranking. This suggests that Google is classifying websites and assigning ONE MAIN category to them.

It is likely that this categorization is used throughout Google's algorithm, influencing both content and link building.

The bottom line is that content aligned with the site's primary topic receives a ranking boost. For example, imagine a user searching for 'best dog food for puppies.' The 'SiteboostTwiddler' would analyze the query, recognize that it pertains to pet food, and then use the 'TopPetacatTaxID' to prioritize results from the top category of pet food.

Topical Authority Gets A Boost

Google rewards topical authority sites in multiple ways and TopPetacatTaxID, used in SiteBoostTwiddler is just another boost used reward sites that are focused on a specific topic.

For example, if the query is in /home & garden/ and your site's main category is /home & garden/, then it would be logical to assume that the site should get a boost.

While we don't know exactly the new categories that Google is using, I believe this is the old list they might have used in the past (sample down below):

/Arts & Entertainment/Celebrities & Entertainment News

/Arts & Entertainment/Other

/Arts & Entertainment/Comics & Animation/Anime & Manga

/Arts & Entertainment/Comics & Animation/Cartoons

/Arts & Entertainment/Comics & Animation/Comics

/Arts & Entertainment/Comics & Animation/Other

/Arts & Entertainment/Entertainment Industry/Film & TV Industry

/Arts & Entertainment/Entertainment Industry/Recording Industry

/Arts & Entertainment/Entertainment Industry/Other

/Arts & Entertainment/Events & Listings/Bars, Clubs & Nightlife

/Arts & Entertainment/Events & Listings/Concerts & Music Festivals

/Arts & Entertainment/Events & Listings/Event Ticket Sales

/Arts & Entertainment/Events & Listings/Expos & Conventions

/Arts & Entertainment/Events & Listings/Film Festivals

/Arts & Entertainment/Events & Listings/Food & Beverage Events

/Arts & Entertainment/Events & Listings/Live Sporting Events

/Arts & Entertainment/Events & Listings/Movie Listings & Theater Showtimes

/Arts & Entertainment/Events & Listings/Other

/Arts & Entertainment/Fun & Trivia/Flash-Based Entertainment

/Arts & Entertainment/Fun & Trivia/Fun Tests & Silly Surveys

/Arts & Entertainment/Fun & Trivia/Other

/Arts & Entertainment/Humor/Funny Pictures & Videos

/Arts & Entertainment/Humor/Live Comedy

..

From https://cloud.google.com/natural-language/docs/categories

While they might have a new category system that is being used internally, we're still using the old category list to classify websites. It isn't perfect however it does give us a fairly decent idea of the top category.

Top Categories (Analyzed With Google's NLP Classification Model)

https://on-page.ai

(Topical authority requires a significant quantity of content. However, the benefit is that when Google recognizes your authority on a subject, ranking becomes significantly easier and requires less links.

My strategy:

1. I will, as previously stated, look up the Wikipedia page for my main focus entity in order to get ideas for the topics I want to cover.

2. I scrape competitors' website sitemaps to gather more topically related keyword ideas. Since not all articles will be relevant, I run 100-200 of their pages through the NLP category checker. This allows me to easily identify content that falls within the same category.

When building topical authority sites, remember to create relevant internal links. You can't have clusters & nodes without links!)

Continuing with the content, we see that they have an explicit mention of: "OriginalContentScore".

OriginalContentScore - The original content score is represented as a 7-bits, going from 0 to 127

DocLevelSpamScore - The document spam score is represented as a 7-bits, going from 0 to 127

Although it might still be a ratio of duplicate content versus original content... I believe that this API reference is measuring how original the content is versus your competitors. Google has long encouraged publishers to create new, original content and this seems to be an attempt to measure that effort.

I believe that if you're just creating an carbon copy of the existing search engine results, there is no real incentive for Google to rank you above the rest. That's why I encourage webmasters to go above and beyond the #1 result, using entities that your competitors aren't currently using and adding unique information on your subject.

freshnessEncodedSignals - Stores freshness and aging related data, such as time-related quality metrics predicted from url-pattern level signals

ScaledSpamScoreEric

biasingdata

ScaledExptSpamScoreEric

Once again, we see another freshness signal that indicates that Google is rewarding new and recently updated content.

And...

There's spam score if your name is Eric? That's not nice 🙁

Now here's something I didn't expect:

biasingdata2 - A replacement for BiasingPerDocData that is more space efficient

spamCookbookAction - Actions based on Cookbook recipes that match the page

To my surprise, they seem to have "Biasing data" entry. This might be a signal that measure how neutral or bias the content is. ie: overly salesy affiliate pages might not rank as well.

And finally, spamCookbookAction hints that there are a specific set of rules that trigger spam on pages. For example, invisible text and other shadier tactics might fall into this category.

Document Quality: Bias

It's super interesting to discover a mention of bias in the algorithm.

Anecdotally, I noticed that overly promotional / highly biased affiliate articles weren't performing as well as my more neutral articles.

This might be why!

From now on, I will avoid overly positive articles and will adopt a more professional, neutral tone when reviewing products.

As we continue looking into the content documentation...

localizedCluster - Information on localized clusters, which is the relationship of translated and/or localized pages

KeywordStuffingScore - The keyword stuffing score is represented in 7 bits, going from 0 to 127

spambrainTotalDocSpamScore - The document total spam score identified by spambrain, going from 0 to 1

We see even more indications that topical relevance plays a significant role in ranking. Whenever Google speaks of clusters (the relationship between local pages), they are denoting the topical content. The idea is that if you're an expert on a specific cluster, then you will likely rank better when there are queries within that cluster.

Next, they have a specific keyword stuffing score! We've long known that Google prohibits keywords stuffing so it's nice to see it here in the flesh. Even today, I still see instances of webmasters keyword stuffing their titles (don't do this).

Finally, the spambrainTotalDocSpamScore is an indication that they also use AI to estimate the document spam score. Whenever Google mentions "brain" in an algorithm, it is their way of saying they are using machine learning to accomplish the task. This implies that they have trained a machine learning algorithm on a slew of spammy documents and then ask the AI to classify your document's spam level.

We don't know exactly what kind of training data Google originally provided for the AI spam algorithm however it's logical to assume that if your document looks like a spammy document, then it will likely be classified as one.

spamrank - The spamrank measures the likelihood that this document links to known spammers

As we continue digging deeper into the data used within content classification, we discover that "spamrank" is a measure of the likelihood that document links to spam.

Suffice to say, if you link to bad places within your content, this will hurt your rankings.

This also means we have to watch out for accidental links within our content. Sometimes there are malicious actors that will try to add hidden links within content, sometimes people will add redirects after a link is placed and sometimes we can make typos in our URLs leading to incorrect placements.

compressedQualitySignals

crowdingdata

QualitySignals & Crowingdata both likely represent user signals. Google cares a lot about user signals, to the point of saying that "they don't understand content, they fake it".

New websites have a sandbox period to prevent spam.

Crowd Data

We've known that Google measures how humans react to the content and this confirms it.

When creating content, I try to make it as captivating as possible for humans.

1. I aim to make the reader feel "as if they are at the right place for the information" as quickly as possible.

2. I quicky establish myself as a trusted authority on the subject.

3. I include charts, tables and other imagery to captivate my reader's attention.

(One of my favorite tricks is to include a relevant chart, graph or image that cuts off at the fold.

This gets people scrolling and I noticed that when people start scrolling down a page, they are MUCH more likely to consume the rest of the page. The most frequent bounces come from people that never start scrolling!)

hostAge - The earliest firstseen date of all pages in this host/domain. These data are used in twiddler to sandbox fresh spam in serving time.

We've long know that there was a sandbox period for new websites however some Google employees denied this in the past. Now we see that new websites in fact DO have a sandbox period to prevent spam. It is determined by hostAge.

Spam & Content

As we continue on our journey into Google's spam filters for content, there are a few more interesting tid-bits:

GibberishScore - The gibberish score is represented in 7 bits, going from 0 to 127

freshboxArticleScores - Stores scores of freshness-related classifiers

onsiteProminence - Onsite prominence measures the importance of the document within its site