Google's March Core Update 2024

Full Review, Analysis and Recovery

Author Eric Lancheres

SEO Researcher and Founder of On-Page.ai

Last updated May 6th 2024

1. Google March Core Update Timeline

Forced To Take Drastic Action

As pressure mounts on Google to address the rise in low quality AI content within the search engine results, drastic solutions have been employed.

In the past, Google has attempted to avoid collateral damage as much as possible by carefully rolling out updates that err on the side of caution; however, with the March Core Update, this is one of the few times in search history that Google has taken drastic measures to curb spam, even at the cost of impacting legitimate, valuable web publishers.

This report is dedicated to those web legitimate publishers and SEO agencies that have been unfairly impacted by the latest draconian Google March core update.

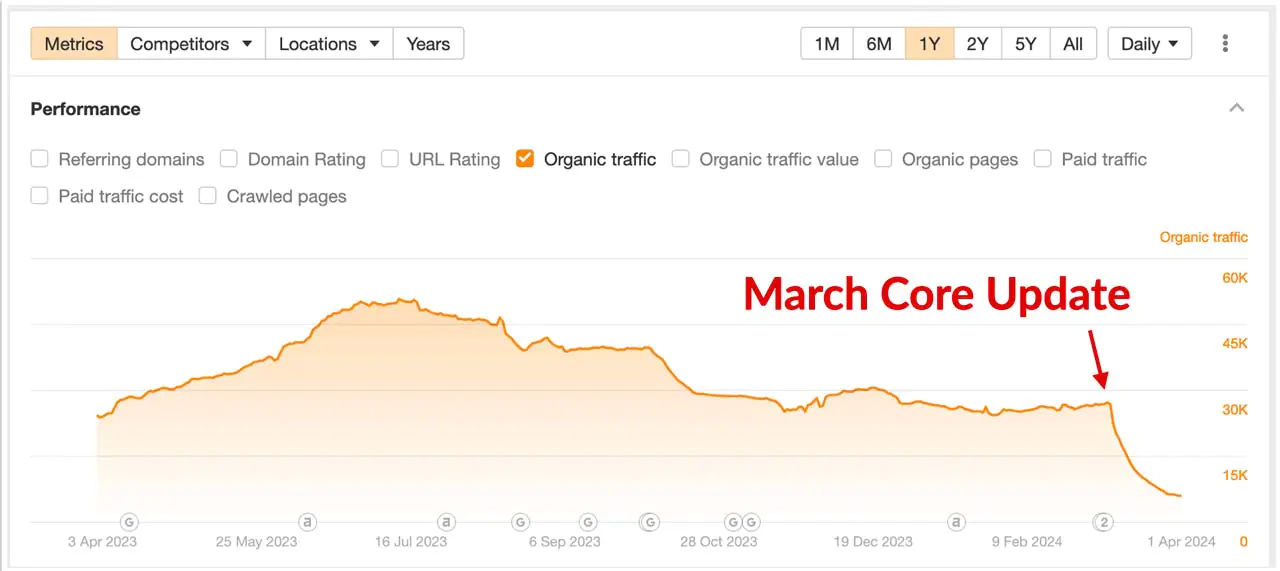

Launched officially on March 5th, 2024, the March core update rolled out alongside the March Link Spam update. Impacts from the March Core Update (MCU) began on March 5th with many webmasters experiencing severe drops on March 8th. The rollout completed on April 19th 2024. (Source)

May 6th update: Despite Google's announcement of a significant rollout on May 5th to address parasite SEO, no major ranking fluctuations have been observed.

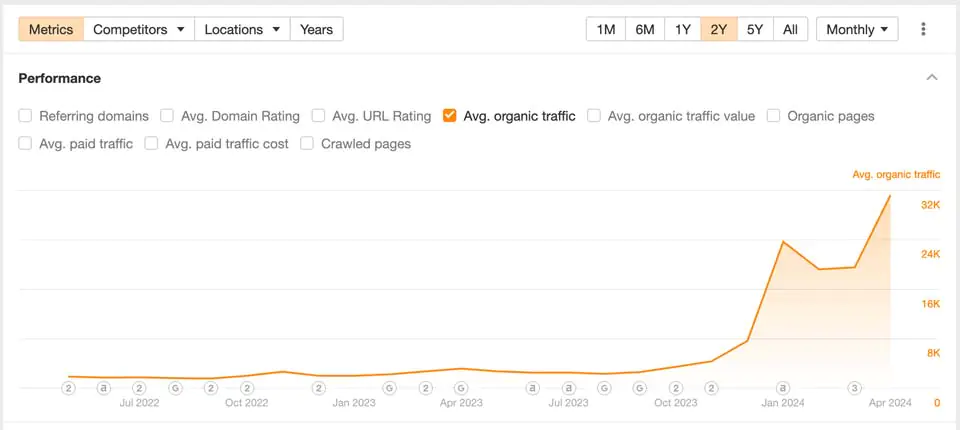

Ahref organic traffic graph of a website affected by the March Core Update. A sharp traffic drop appears on March 5th 2024 and continues downward.

The March Core builds on top of the recent Helpful Content update and if you were affected by the previous update, you were likely further affected by the March Core Update. In addition, Google also released a Link Spam update (Source) which I will cover within this report.

If you've been experiencing drops for the past few months and have seen your traffic drop to record-lows, rest assured—you've come to the right place to get answers and solutions.

2. Official Statements About The March 2024 Core Update

(From the Official Blog )

While I agree with many search engine experts that Google will sometimes present slightly misleading information to the SEO community, I still believe there is some value in reviewing the official documentations for clues.

Here are some official statements about the March Core Update:

"New ways we’re tackling spammy, low-quality content on Search. [...] We’re enhancing Search so you see more useful information, and fewer results that feel made for search engines." (Source)

While I try not to get caught up in the details too often, the headline and sub-headline immediately announce that:

A) They introduced a "new" way of tackling low quality content. (New algorithm)

B) They are going after results made specifically for search engines.

It's interesting that Google is no longer just mentioned pure spam and instead, they are now treating "low quality" with the same severity as pure spam.

"In 2022, we began tuning our ranking systems to reduce unhelpful, unoriginal content on Search and keep it at very low levels. We're bringing what we learned from that work into the March 2024 core update.

This update involves refining some of our core ranking systems to help us better understand if webpages are unhelpful, have a poor user experience or feel like they were created for search engines instead of people. This could include sites created primarily to match very specific search queries." (Source)

As many webmasters have noticed, Google states that this is a continuation of the Helpful Content Update. Hence, if you were impacted by the Helpful Content Update, you are likely to also have been impacted by the March Core Update. The silver lining however, is that if you can recover from the March Core update, then you will also likely recover from the Helpful Content Update.

Once again, they are saying that they are focusing on "user accomplishment" which has historically been measured through diverse methods ranging from tracking search result clicks to using web data from various third-party sources (Chrome, Android, etc). In addition, this is the first time they mention that they are going after sites designed to match "very specific search queries".

I will attempt to explain why this is the case and what you can do about it further in the report.

"We’ve long had a policy against using automation to generate low-quality or unoriginal content at scale with the goal of manipulating search rankings. This policy was originally designed to address instances of content being generated at scale where it was clear that automation was involved.

Today, scaled content creation methods are more sophisticated, and whether content is created purely through automation isn't always as clear." (Source)

In this latest update, Google claims to have introduced measures to address content at scale. While this is not new, what is interesting is that Google admits that these days, it isn't always clear when content has been created through automation. While many AI detection methods do exist (we developed and have a tool within On-Page), the detection rate does vary quite a bit and many false positives can occur. We recommend users be cautious of relying too much on the results of AI detection systems and it appears as if Google is taking a similar stance.

Google admits that using direct AI detection is not a reliable way of detecting low quality content and therefore, it is assumed they have developed an alternate method of detecting low quality content.

"This will allow us to take action on more types of content with little to no value created at scale, like pages that pretend to have answers to popular searches but fail to deliver helpful content." (Source)

Once again, Google is stating that they are targeting pages that "fail to deliver". Historically, Google has accomplished this by measuring estimating user accomplishment. If Google sends a user to a webpage and that user comes back to Google to search again for the same term, Google can deduce that the user did not locate the pertinent information and therefore the page failed to deliver the promised information.

"Site Reputation Abuse. We’ll now consider very low-value, third-party content produced primarily for ranking purposes and without close oversight of a website owner to be spam. We're publishing this policy two months in advance of enforcement on May 5, to give site owners time to make any needed changes." (Source)

At the time of the report, this hasn't been implemented yet. This is likely going to be manual actions reserved for a smaller subset of websites hosting parasite content.

"Expired domains that are purchased and repurposed with the intention of boosting the search ranking of low-quality content are now considered spam." (Source)

Google has been saying this for over a decade. While it's odd that they are restating that repurposed expired domains are considered spam, it is likely just a hint that they updated / improved part of their detection algorithm during the March Core update.

This is likely a minor update that addresses the following scenario.

IF (conditions are met):

1. Website ownership has been transferred less than 3 months ago, according to WHOIS records.

2. Website begins deploying content at scale. (ie: thousands of posts per day after a WHOIS transfer)

3. Content is not topically aligned with the content before the website ownership transfer.

THEN (action to be taken):

- Penalize website.

This would address the most brazen abuse of expired domains filled with AI content. However, for those that want to continue this practice, the likely solution is to purchase an expired domain that is topically aligned with the content you plan on publishing. Then, instead of immediately opening the floodgates, you can scale the content by slowly drip-feeding it, scheduling its release at a linear rate.

"As this is a complex update, the rollout may take up to a month. It's likely there will be more fluctuations in rankings than with a regular core update, as different systems get fully updated and reinforce each other." (Source)

This conveys to me that the update involved changes to the link algorithm. The REASON why it takes up to a month for link changes to take place is because the link power for one page will affect a subsequent pages.

When Google announces fluctuations over a period of a month, it usually means that the link nodes are changing in power (and things bounce around a lot when that happens as link scores are recalculated).

It is VERY possible that Google introduced a new link metric component within their linking system which means the link score for every page needs to be recalculated.

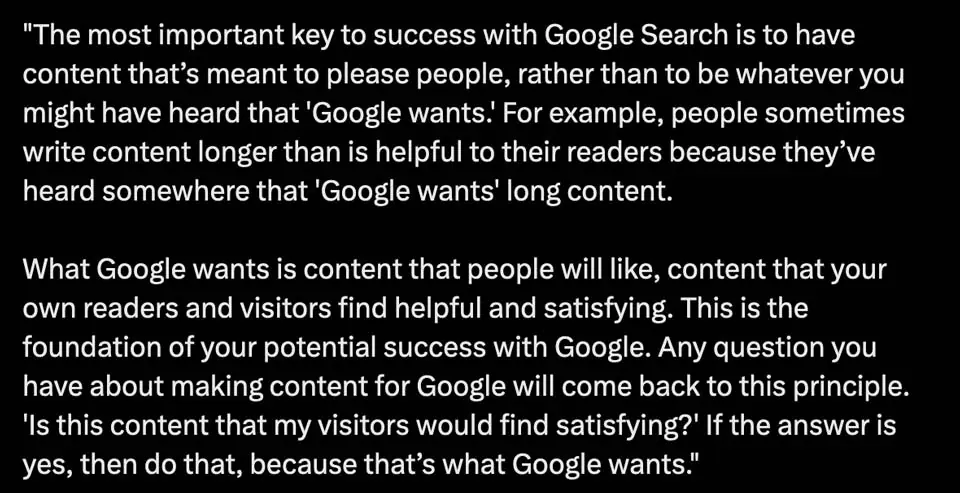

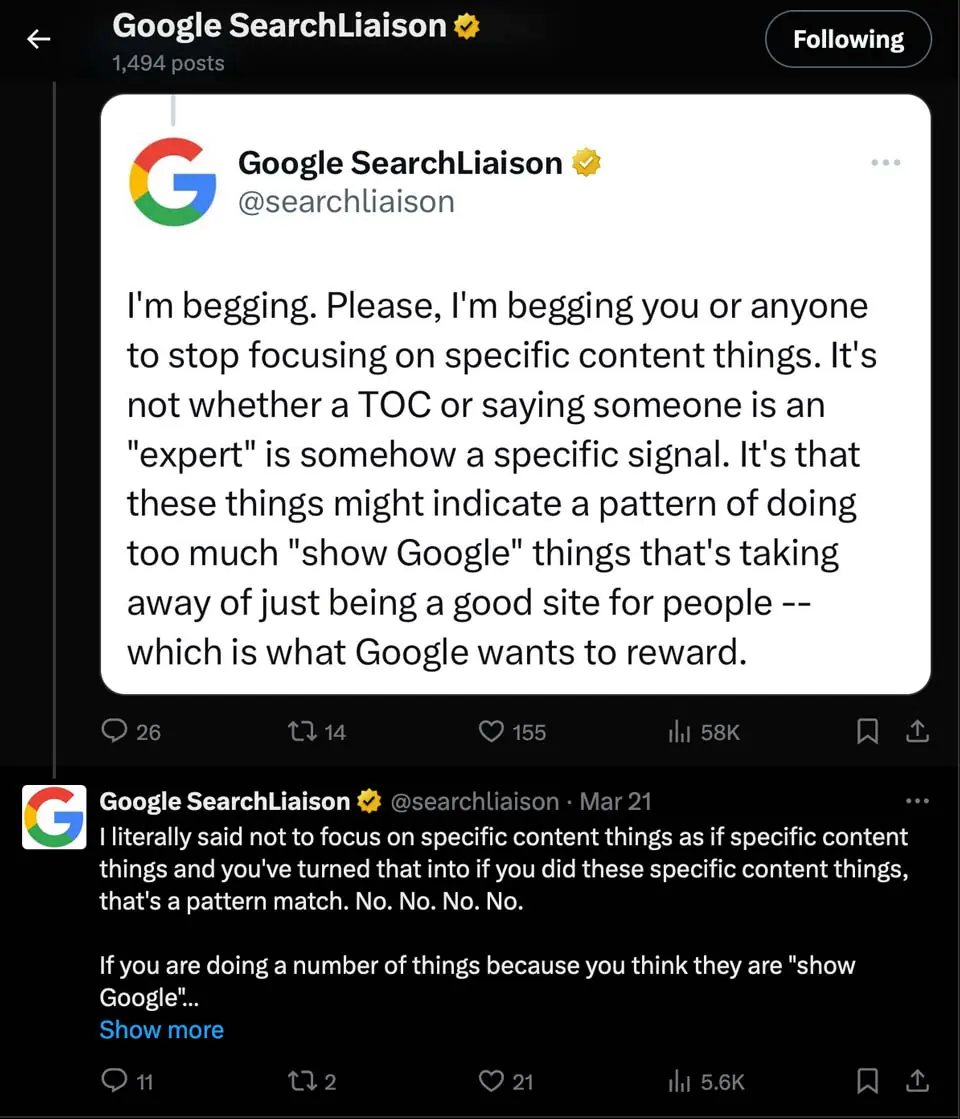

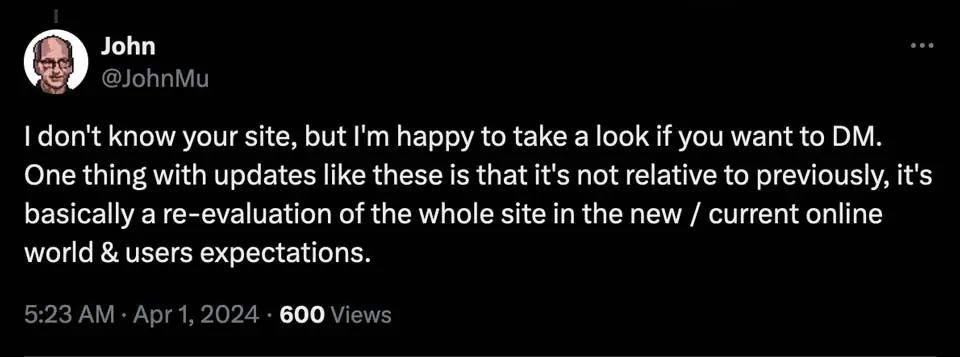

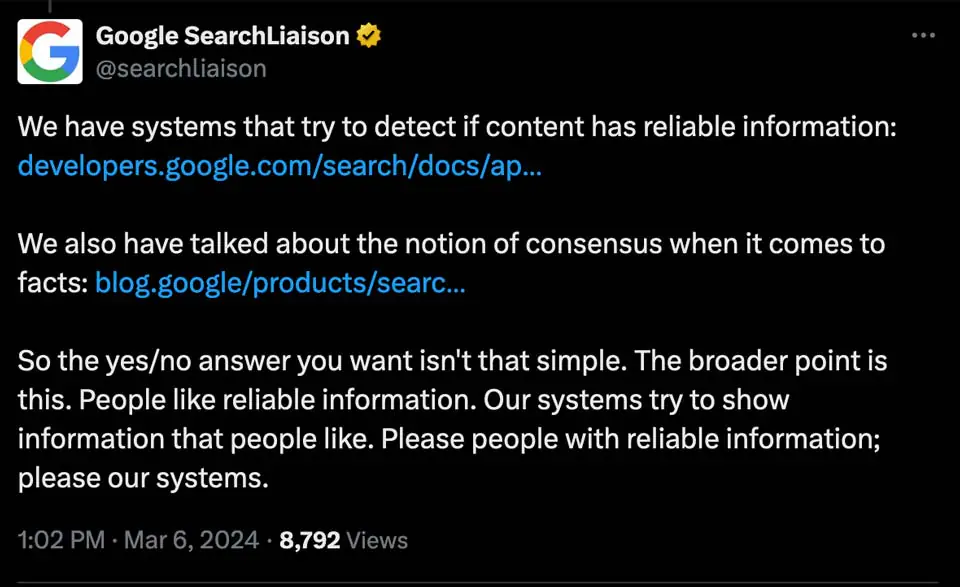

3. Statements From Google Search Liaison & Google Engineers

In addition, we have had a series of recent unusual (and quite honestly, slightly brazen) Twitter posts from the Official Google Search Liaison Twitter profile. While I was initially taken aback by the uncharacteristically unpolished and impromptu posts coming from an official Google property, the more impromptu a post is, the more likely it is to contain subtle information that was not meant to be shared.

In addition to posts from the Twitter Search Liaison channel, we've examined hints from John Mueller, a Google engineer.

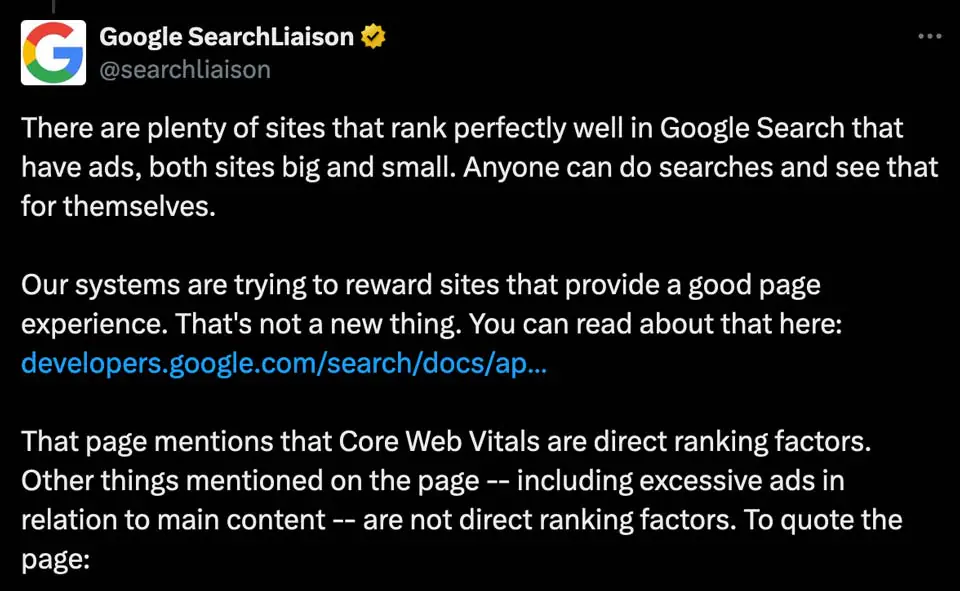

This official statement from Search Liaison highlights a few elements:

1. Google is trying to reward page experience. (We can assume that many good page experiences equate to a good site experience)

2. Excessive ads may indirectly hinder rankings however they are not a direct ranking factor.

3. Core Web Vitals are a direct ranking factor.

Throughout the entire month of March, Google Search Liaison has been heavily focused on promoting a good user experience as the primary thing that Google attempts to reward.

This passage further suggests that they are paying very close attention to how users interact with your content.

Surprisingly, it is the first time I see Google mention "satisfying" which originates from satisfying the user intent. This is verbiage used within patents but rarely mentioned by Google's front facing representatives.

Uncharacteristically unpolished and impromptu posts coming from the official Google Search Liaison hints at Google's frustration with SEOs. While there isn't any new information here, it's a reminder that Google is not looking for a specific element on a page in order to determine quality and instead, is focusing on how users interact with the page.

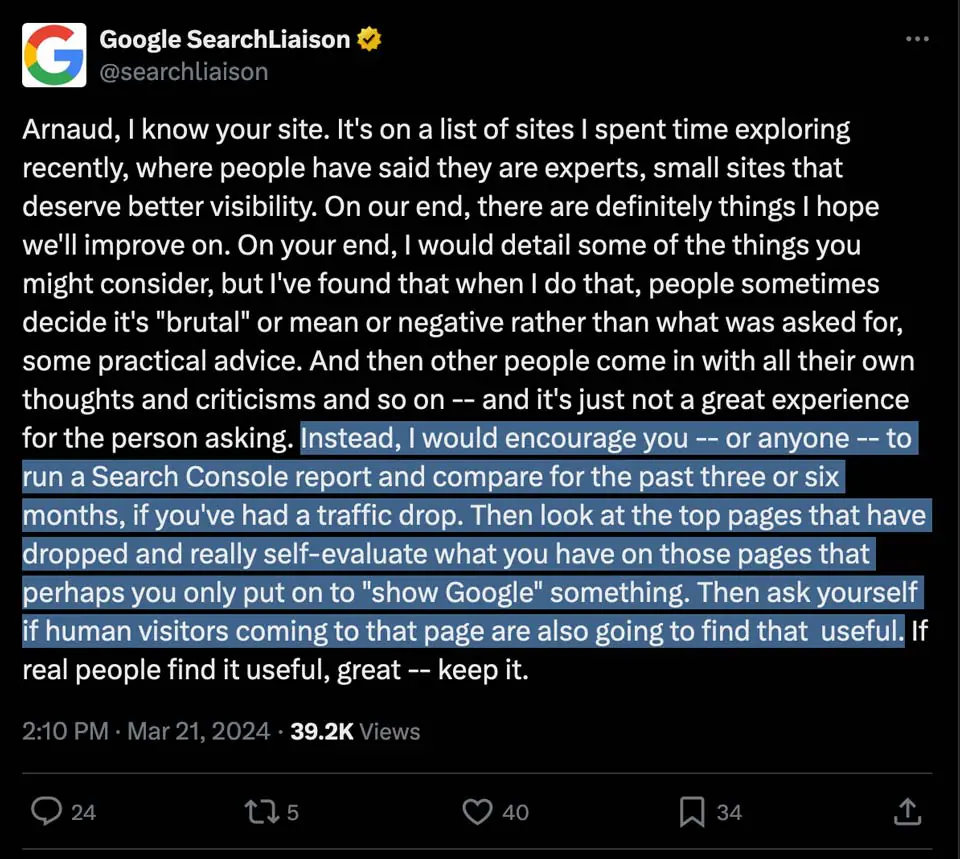

The official advice from Google is to:

1. Access Search Console

2. Identify the top pages on your site

3. Compare traffic for the past 3-6 months.

4. If a page has dropped, examine the page itself in detail to determine if the content is deemed useful.

While examining your top pages is good advice as it will usually highlight recurring issues that might replicated throughout your entire website. (And we can assume that the top pages that drive the most traffic will also be responsible for the bulk of your site's user experience)

It hilariously contradicts the advice from John Mueller, Google engineer, down below.

Ultimately, they are both correct and suggesting different approaches to solve an ultimate problem. John Mueller seems to acknowledge that the current March Core update is a site-wide update and therefore, you must improve the entire website in order to recover.

Search Liaison, on the other hand, is likely suggesting that in order to improve an entire site, you must first start with a single page and then work your way through the rest once you identify recurring issues.

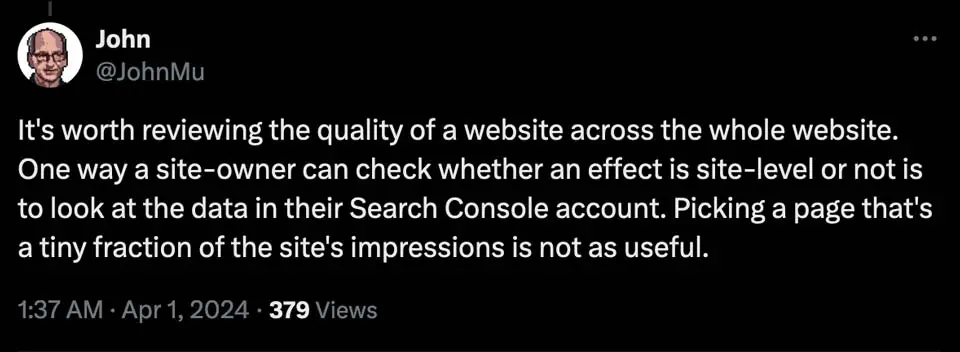

This is further confirmed by John Mueller, as he states that the March Core update is:

1) A re-evaluation of the entire site. (Site wide signal / Score)

2) Based on user expectations (Experience / Satisfying user intent / etc)

This is why we have seen entire websites drop even if they have some good pages.

Finally, Google states that they are able to identify 'reliable information' which might just be matching information against the existing knowledge base... however in my experience, they are mostly just watching how people interact with content to determine if the content is 'reliable'.

We have seen many instances of Google presenting false/inaccurate data within the search snippet so I highly doubt that there are processes in place to de-rank inaccurate information.

In my opinion, the important takeaway from the Google communication is that the March Core update introduced a new site wide signal that attempts to measure and quantify the user experience of the website. While this isn't new (Google has been focused on site wide user experience for nearly a decade), they do appear to have taken a new approach that has had serious repercussions on some legitimately helpful sites.

4. Statistics, Charts, Figures

(Before & After Data)

Before we dive into the theories, manual analysis and potential recovery paths, I thought it would be useful to go over the specific March Core data. I have access to quite a lot of data and as we have been tracking search engine fluctuations for years for previous Google Core Updates.

Here is the result of over 121,000 data points comparing the BEFORE and AFTER during the March Core Update. Please note that correlation does not mean causation. These charts provide a snapshot into the ever-changing search landscape.

Before: Mid-Late February 2024

After: Early April 2024

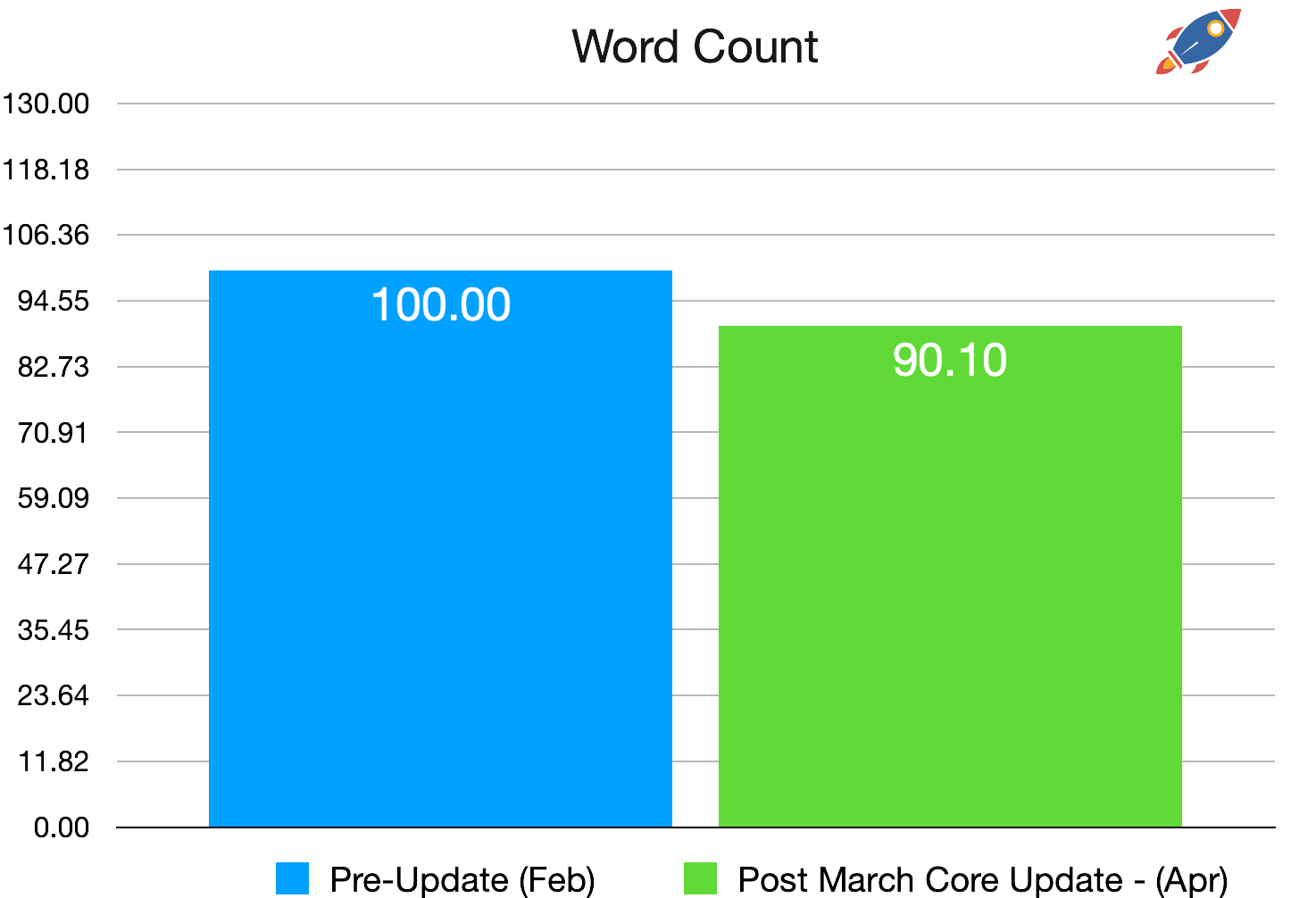

Average Page #1 Word Count

Historically, the average word count has been fairly stable. This is why I believe that a 9.9% reduction in average word count after the March 2024 Core Update is a little unusual.

This indicates that, on average, there is more short form content ranking in the search engine results.

In spite of this result, I must stress that the range of content length for competitive keywords can vary tremendously. (From as low as 350 words to 4000 or more words for more complex terms).

While the world wide average seems to have lowered it is, in my opinion, a mistake to attempt to make all your content shorter (or longer) based on this data.

First, Google has openly stated that word count itself is not a ranking factor. (Source)

Second, according to my internal testing, shorter content often (but not always), leads to lower engagement as visitors bounce off faster. All things being equal, longer, more in depth content typically leads to longer visitor sessions and appears more valuable. If you can maintain a high entity density (more on this later), then longer content will usually win.

If you want more precision on how your article length compares to the competition, there are excellent optimization tools that can give you an exact target word count for your specific keyword.

Optimization report comparing word count, sub-headline, image and entities.

I personally believe that Reddit posts and threads might have significantly influenced the word count metric as most Reddit pages often only have 350-800 words... and Reddit is ranking for almost everything these days.

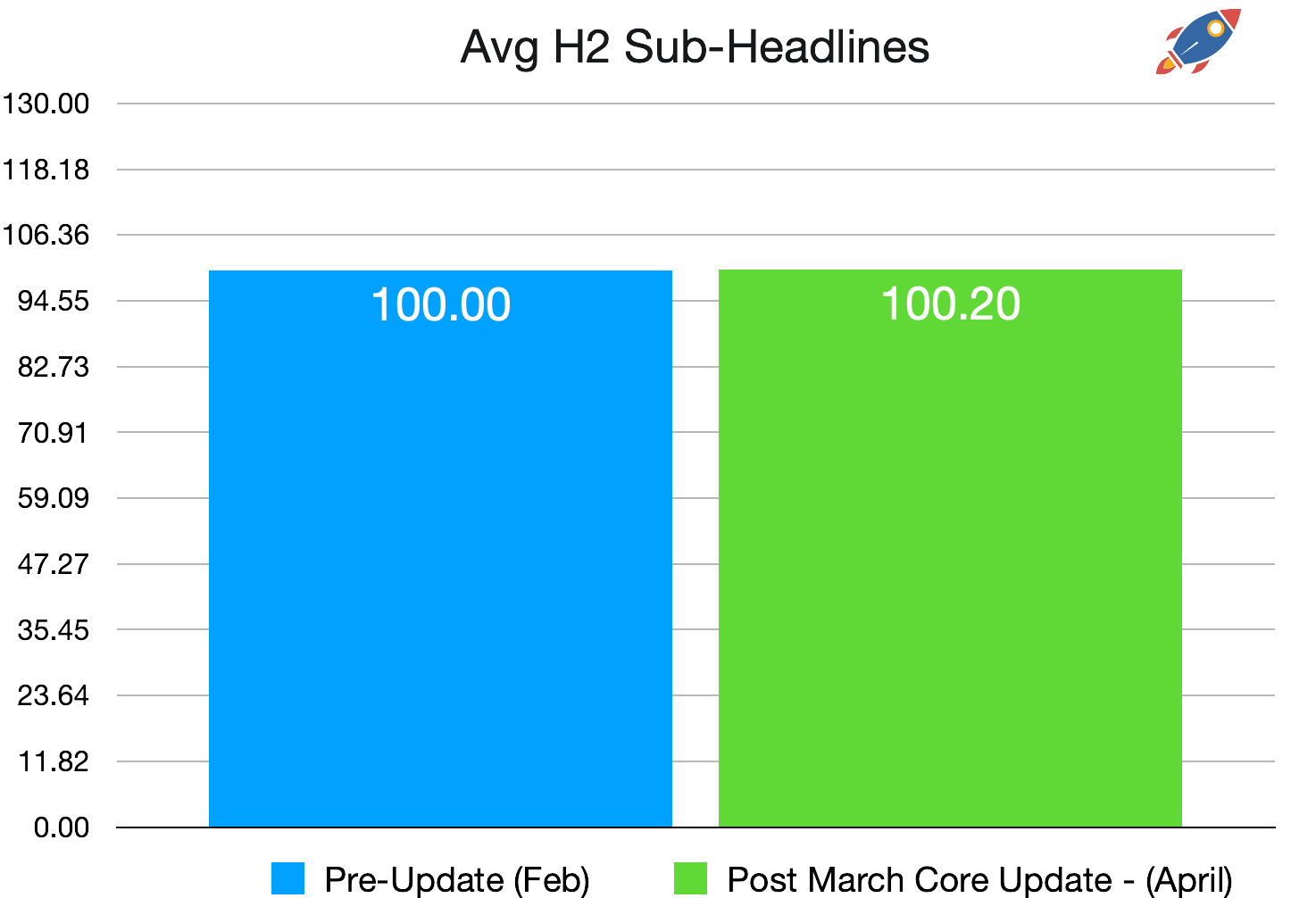

Average Quantity Of H2 Sub-Headlines

The average quantity of H2 sub-headlines on page 1 of Google results remained essentially the same. I believe that this is an indication that the on-page portion of Google's algorithm has not changed during this update.

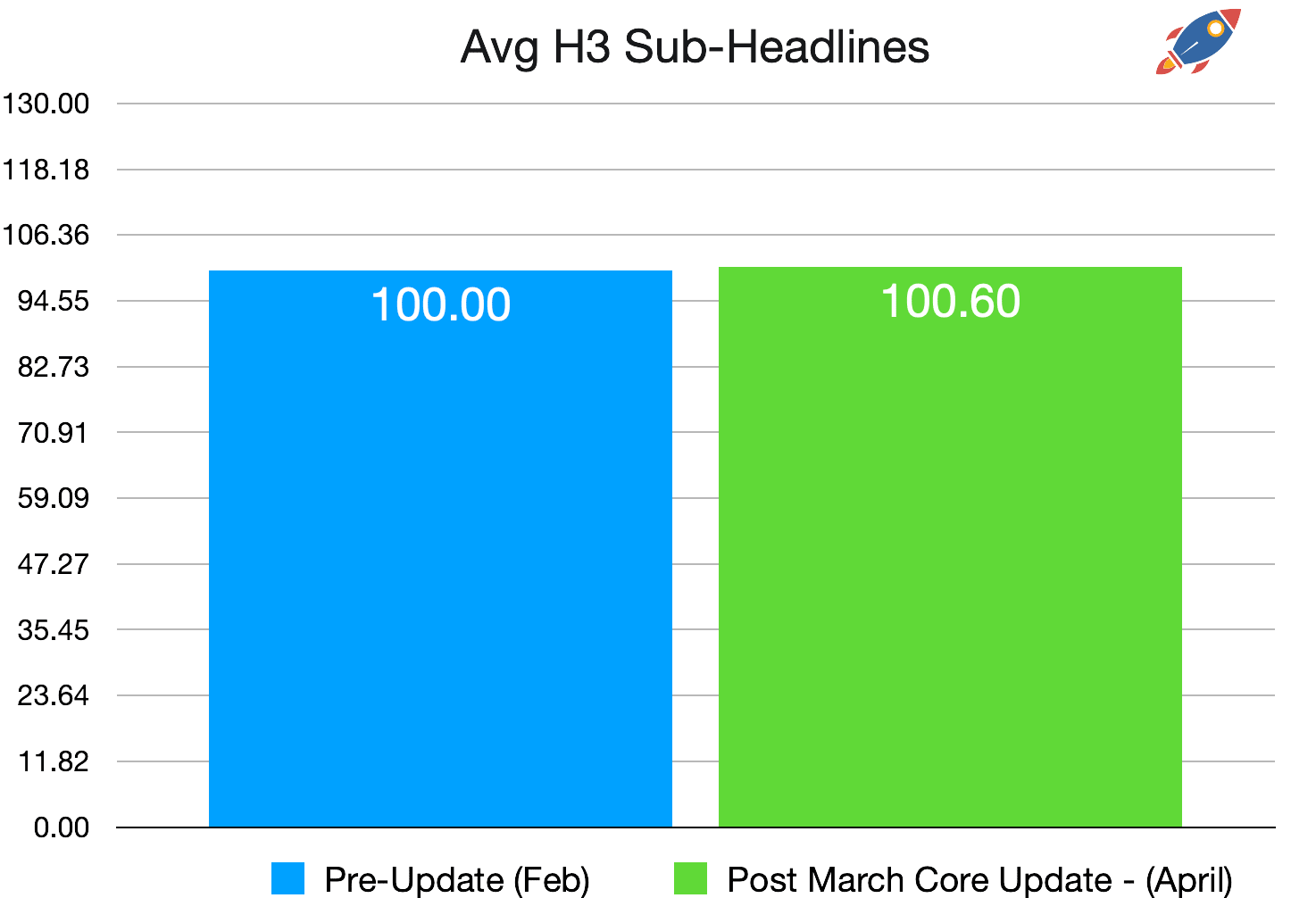

Average Quantity Of H3 Sub-Headlines

The average quantity of H3 sub-headlines on page 1 of Google results remains the same. I believe this is an indication that Google did not make any meaningful changes to how it evaluates traditional on-page ranking factors.

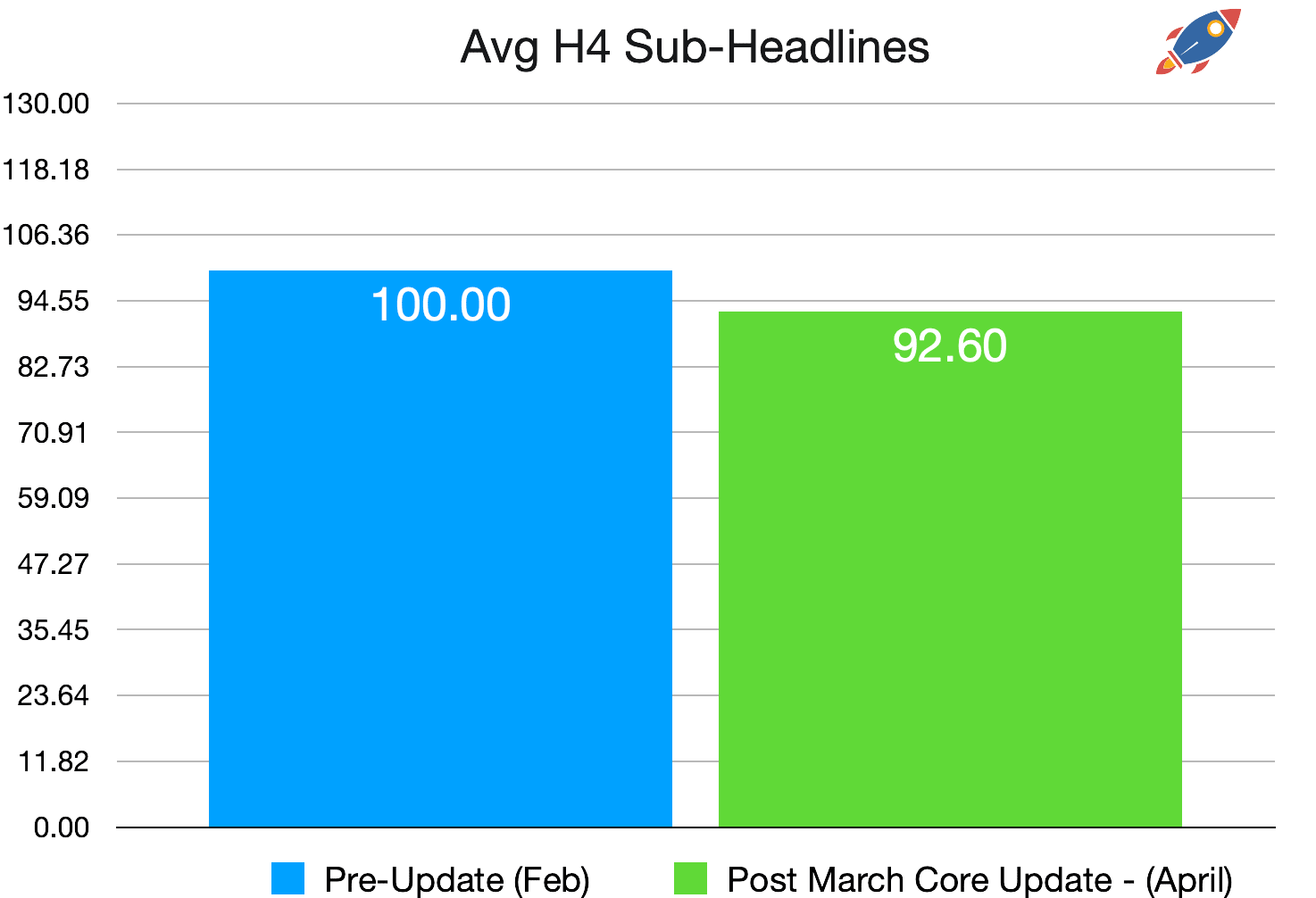

Average Quantity Of H4 Sub-Headlines

The average quantity of H4 sub-headlines diminishing by 7.4%. I personally believe that this is also within the margin of error as H4 sub-headlines are rarely used when compared to H2, H3 sub-headlines and we expect this figure to fluctuate more than others depending on the sites that are ranking.

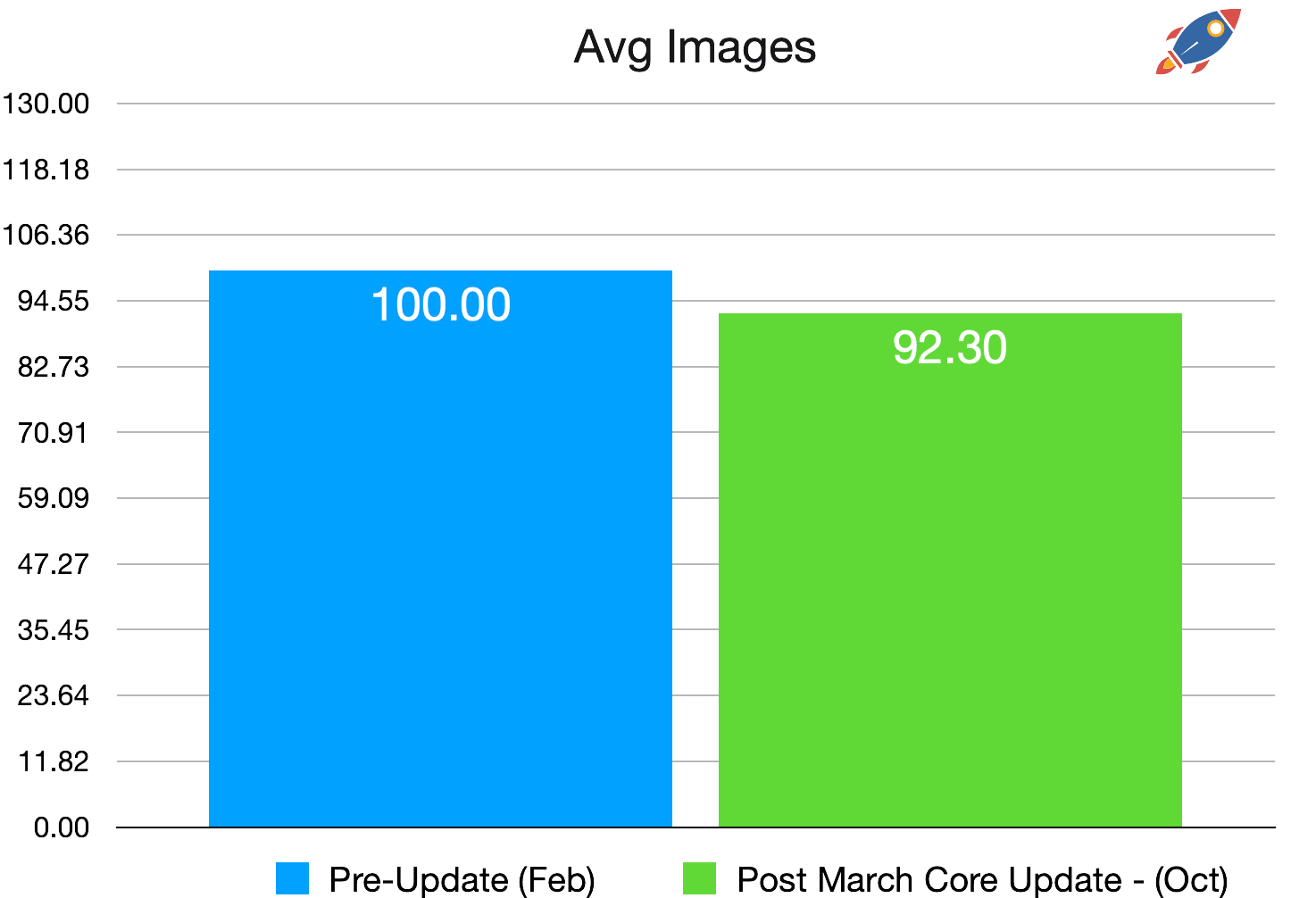

Average Quantity Of Images Within Main Content

The average quantity of images within the main content dropped by 7.7%.

Similarly to words, I would argue that images can be beneficial and lead to a better overall page experience. In my personal testing, articles that contained 3 or more images outperformed articles with a single image.

In addition, I performed an extensive case study back in 2021 comparing articles with no images and images. To no one's surprise, the case study results revealed that articles with images are more likely to rank.

Google has recently been favoring sites like Reddit, LinkedIn, and old TripAdvisor forum threads, which typically contain few to no images. I'd argue that the observed drop in average images is not because images lack value, but rather because of the type of sites Google is rewarding.

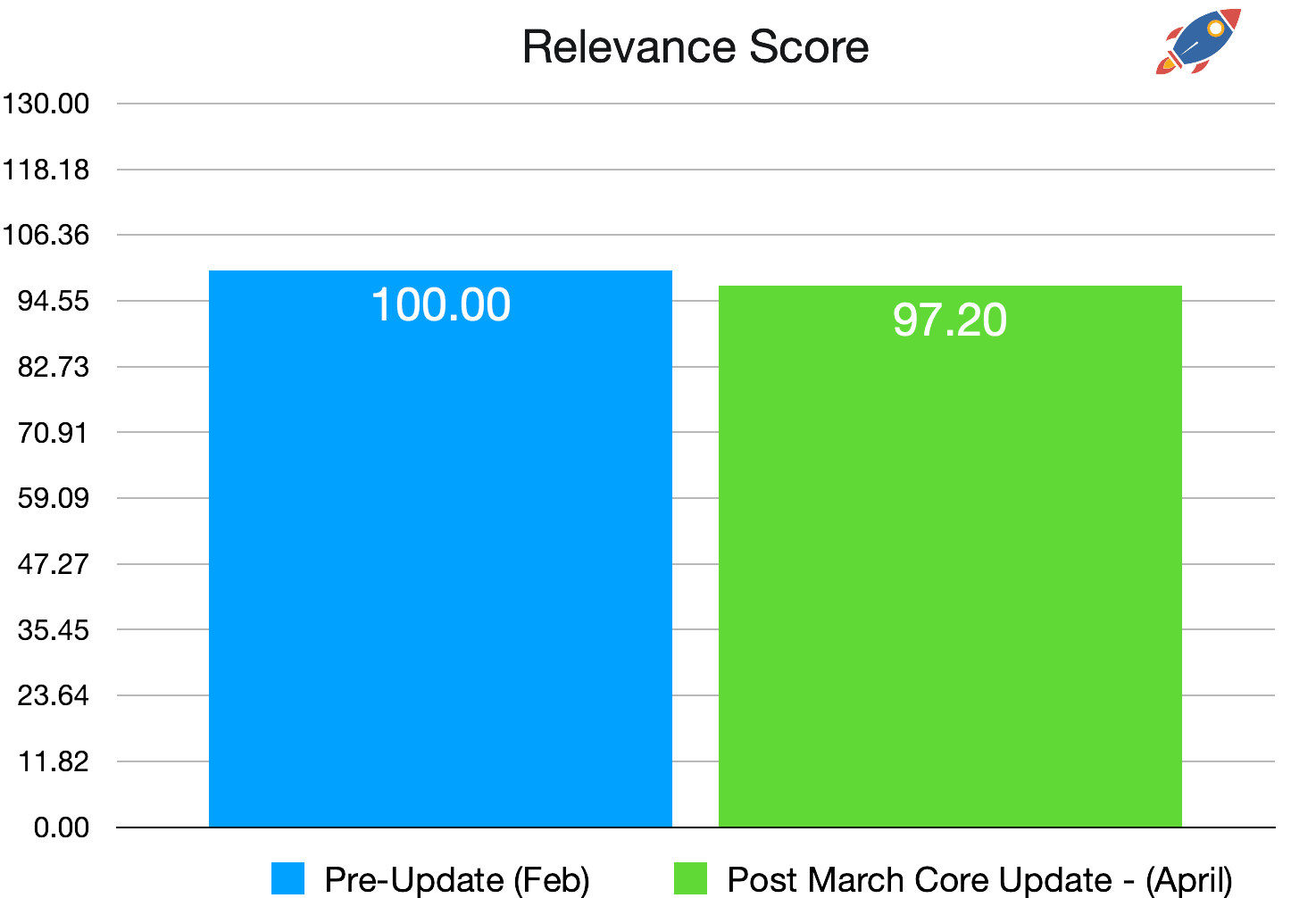

Relevance Score

The relevance score is a unique On-Page.ai metric that mimics modern search engines to measures the relevance of the document for a specific term based on the search term, related entities, word count and entity density.

It aims to help you optimize your page by adding related entities to your content while minimizing fluff and irrelevant text.

The slight 2.8% in reduce of relevance score indicates that Google is favoring slightly less SEO optimized documents after the update. Overall however, I'd say that this indicates that Google has not really touched the on-page portion of the algorithm.

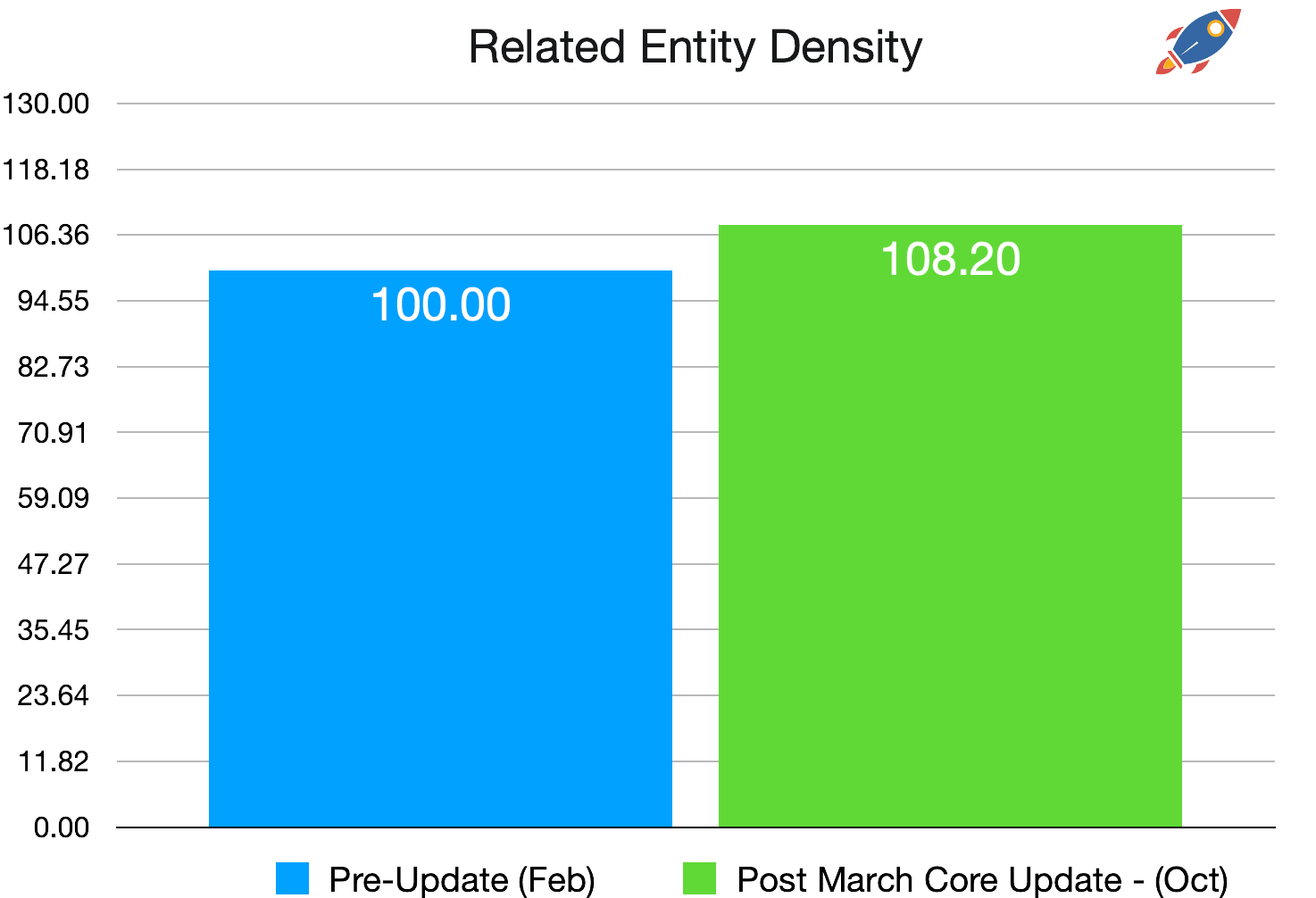

Entity Density

Related entity density measures how often related entities are found within the text in relation to filler / other words. While the overall raw entity count decreased due to the word count diminishing, the entity density actually increased by 8.2%.

This indicates that Google's algorithm still reads, understands and seeks out content with related entities, regardless of the length. As we previously discovered, related entities are extremely important and are one of the driving factors once Google trusts your website.

5. Links & Guest Posts

(Paid vs Natural Links)

Alongside the March Core Update, Google released a separate Link Spam update, specifically targeting unnatural links that were influencing rankings.

Whenever there are significant link updates, the values of links tend to fluctuate tremendously as adjustments to one link’s value can alter another’s. During these updates, it is not uncommon for website rankings to increase and then decrease.

Link Lists & Brokers

It wouldn't be out of the ordinary for Google to accumulate and take action on a massive collection of sites that sell guest posts. (John Mueller has long teased that he is collecting links being marketed to him.)

Some link brokers have lists of 55,000+ sites available for guest posting and if I personally have access to multiple lists of this size, then it wouldn't be far fetched to think that Google might have access as well.

(Link brokers are even using Google Sheets to share them!)

While this is unconfirmed at the moment, it is possible that Google has passed these site lists onto manual reviewer lists which would then confirm if a site is selling links. (It wouldn't make sense to just blanket process a list of sites without checking them first as you could easily add competitor sites within the list of 40,000 sites)

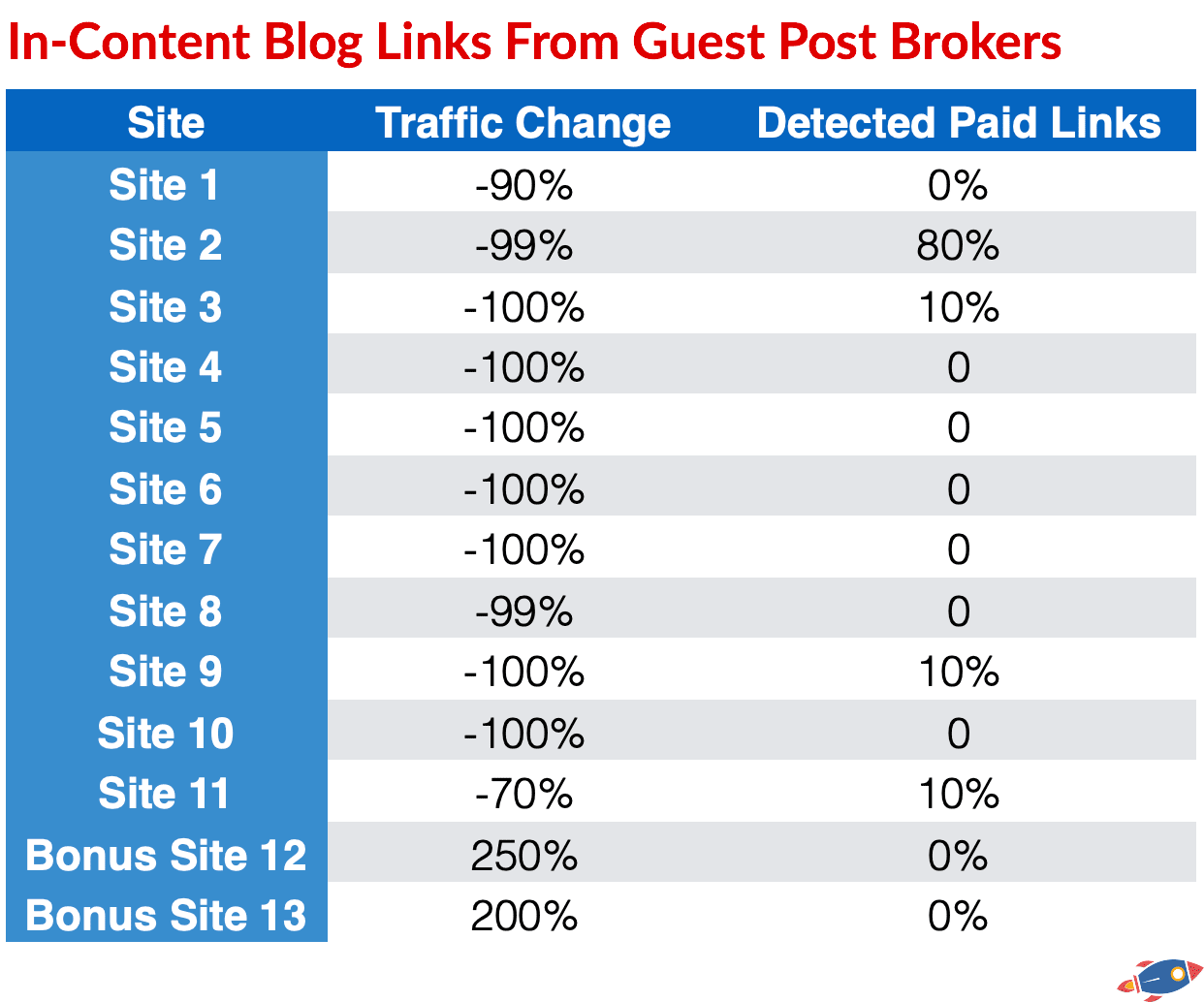

I thought it would be a worthwhile to check in order to prove or disprove that Google has taken specific action on sites selling guest posts.

Link Test Methodology:

In order to test my theory, my team and I verified the link profile of 11 penalized websites (and 2 sites that were rewarded) against popular guest post lists.

Step 1) Extract the link profile for each website and compile a list of the "do-follow" and "in-content" from WordPress sites.

Step 2) Sample the top 15 links and cross check them with our list(s) of known guest post sellers.

Step 3) Calculate the percentage of links originating from websites known to sell links.

After verifying the link profiles of 11 penalized websites, only one website had an abnormal quantity of links originating from guest post sellers.

This isn't to say that the penalized sites didn't have any paid links... just that they were not found in within the list of vendors. While this doesn't rule out the possibility that Google did take action on certain sites, it is unlikely that the link spam update specifically targeted vendor lists.

In our reverse search, starting with link seller websites and analyzing the performance of sites with paid links, we found no clear patterns. Some paid guest post links seem effective, while others were not.

Ultimately Google operates on link ratios. Even if you did have a few spammy links, they would likely be overlooked as natural links will typically balance them out. That said, I still caution people against having an entirely artificial link profile as they are more likely to be affected during these updates.

6. AI Content Detection

(Low Quality Vs High Quality AI Content)

The first thing that came to mind when Google released the update is: "Did they go after AI content?"

It's no surprise that low quality AI content has been an issue lately as it's incredibly easy and cheap to produce. I personally believe that Google has been very smart regarding AI content and that it took quite some foresight in order to declare that AI content can be "OK": As long as it provides value.

Search Engines Have Limited AI Detection Capabilities (But Don't Take Action On Them)

It would be foolish to believe that because Google allows AI content, it cannot detect AI content.

Google published a research paper back in 2021 claiming they were using bERT based AI detection to assess the search landscape. (Source)

(Note: This does not mean that this went into production. They might have a drastically different process / tool internally at this point. What happens during the research phase does not always make into the production phase)

While I do not have access to internal tools from Google, I personally believe most modern search engines CAN detect generic, straight out of the box AI content from popular AI models.

However! With the frequent release of new language models each month, it would be foolish to believe that Google (and other search engines) could continue to accurately detect all AI content, forever. I suspect that most modern search engines started with an internal AI detection system however they soon realized that trying to keep up with all the new AI changes would be futile effort.

The newest models are increasingly difficult to detect, and anecdotally, some of the best-performing content on my test sites is entirely AI-written, even outperforming the human-written content hosted on the same site.

In addition, AI content with a focus on high engagement, hosted on high quality sites, has seen a surge in rankings in some of my tests. It really seems as if it's not about the content itself... but rather the site that is hosting it.

However! I believe that generic AI content will lead to poor metrics which will then lead to a poor performing website...

In order to exploit low quality AI content, you'll need to leverage the reputation of a good website.

It might be an unpopular opinion, however I believe that good AI content can be quite valuable and is already prevalent on the internet. We just don't realize it's AI powered content.

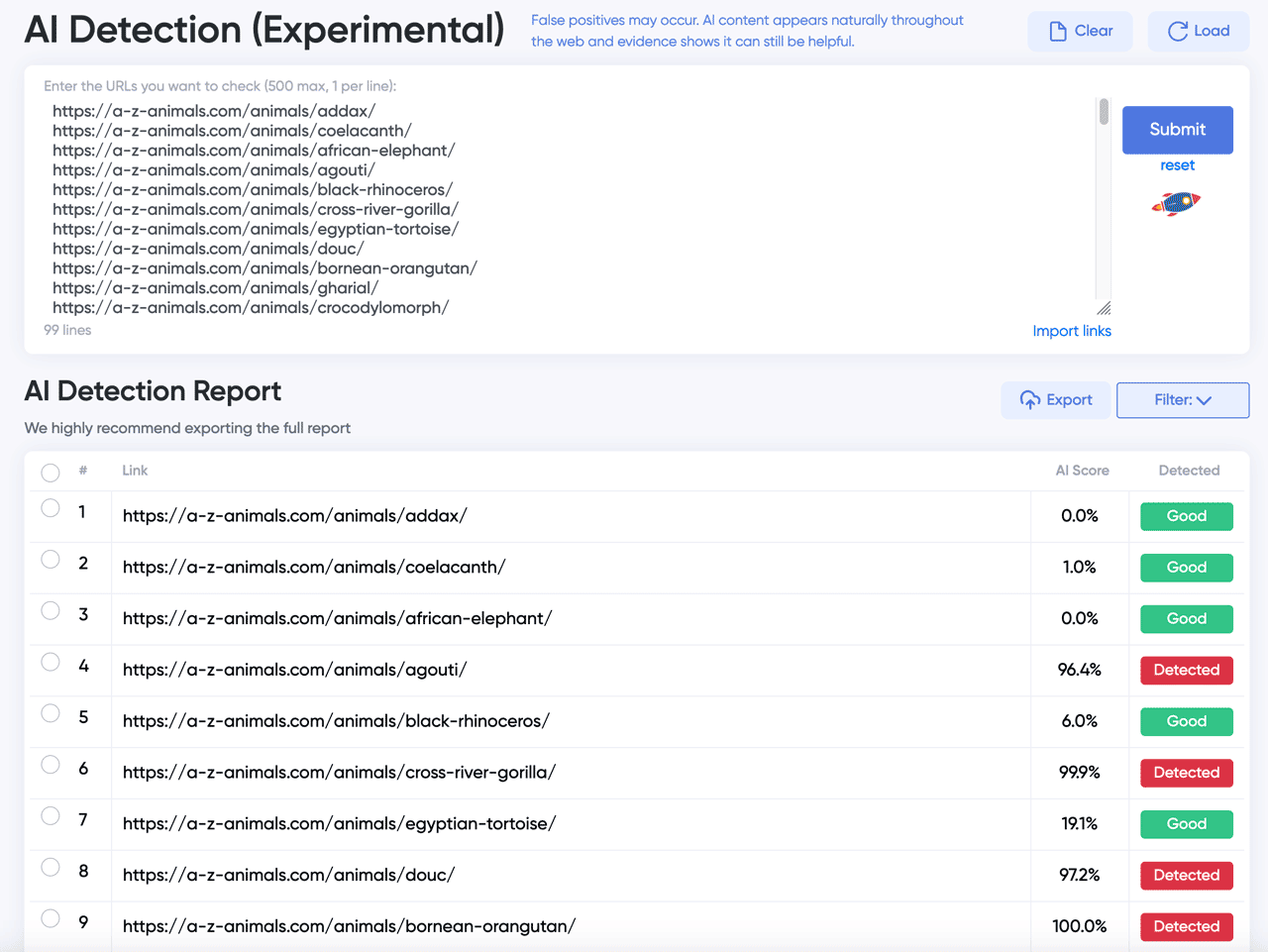

Mass AI Testing Methodology

In order to determine if Google specifically targeted AI content, we analyzed the same series of 11 penalized sites (and 2 rewarded sites) to determine the percentage of AI content on each.

Step 1) Extract the top 300 pages from each website, omitting pages such as privacy policy, contact us, etc.

Step 2) Process each of the top 300 pages checking for an AI detection rate of 50% or above using a bERT-based AI detection system. (The same type of system that was described inside Google's research paper)

Step 3) Noted the percentage of pages detected for each site.

Example of a mass AI detection scan using On-Page.ai bERT-based AI detection module.

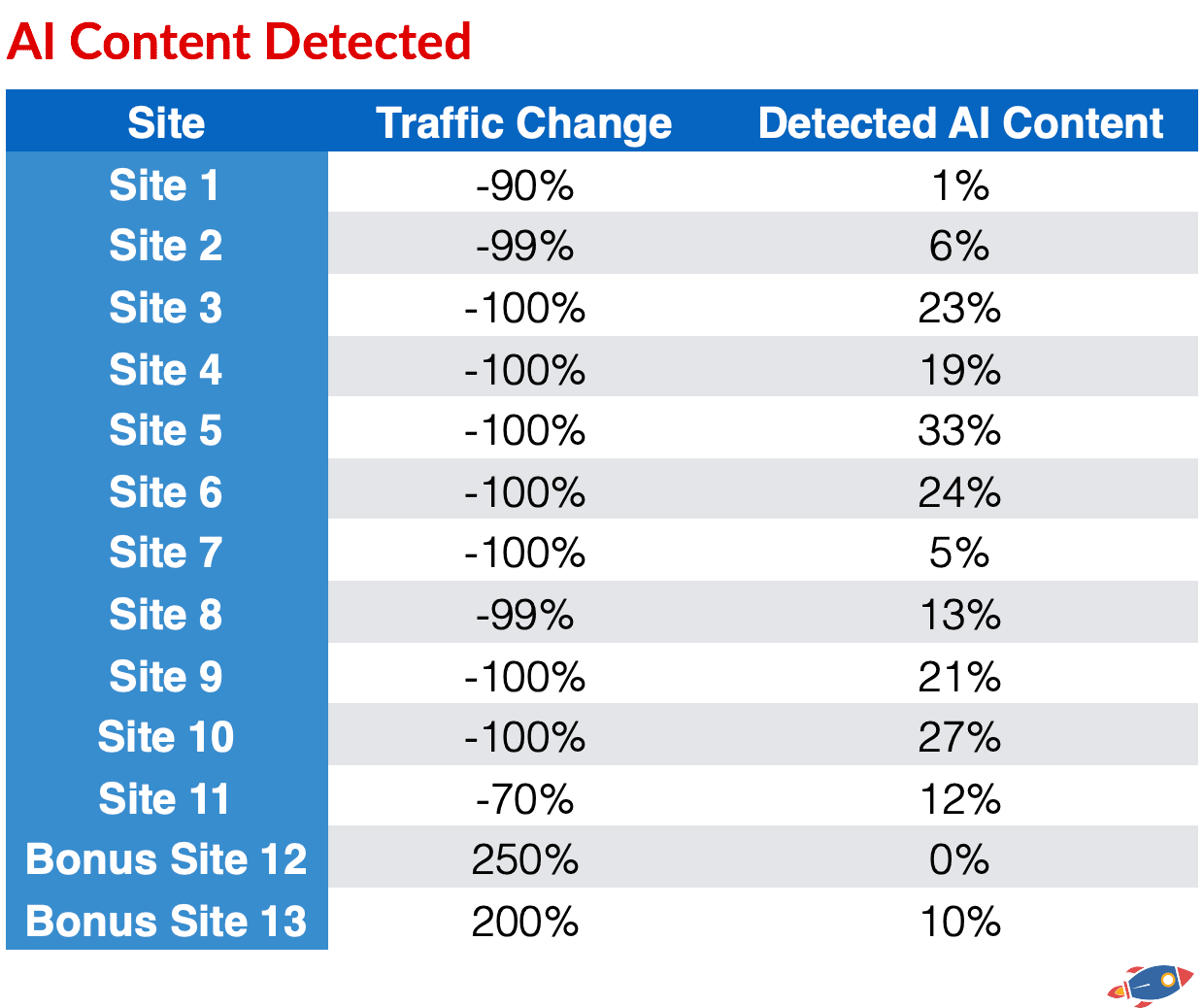

AI Public Sites Results:

The result of the AI detection test scanning 300 pages from each penalized website.

Before analyzing the results, it’s important to understand that our AI detection assigns confidence levels to how likely it is that a document is AI-generated. In addition, we do not know the type or source of AI used on these sites. We were relatively conservative with our detection system in order to avoid false positives (unlike some other AI detection tools that are overly aggressive, falsely detecting content).

While the sample size of data is relatively small, we do see some interesting trends:

First, it appears as if most sites on the internet are using some level of AI within it's content. (Even outside this relatively small list, we've scanned thousands of sites using our mass AI detection and we'll usually be able to identify some AI content, even on prominent website.)

There are reports that even performing grammar corrections with a tool such as Grammarly or asking for a suggestion from ChatGPT can sometimes lead to AI being detected.

We do see that some sites were penalized using next-to-no AI content (Site 1 and site 7) and on the opposite side of the spectrum, we saw websites heavily utilizing AI content also be impacted. (Site 3, 5, 6 and 10).

Interestingly, the sites that saw significant increases seemed to have more human content / content that evaded our detection. That said, even site 13, which saw a significant traffic increase after the March Core update, contained AI generated content.

However, even sites with absolutely no AI content still experienced some penalties, as demonstrated down below.

Overall, because of the prevalence of AI content, it is difficult to pinpoint a direct correlation. Anecdotally however, it does however appear as if sites using generic AI "introduction, questions, conclusion" articles did feel a larger impact.

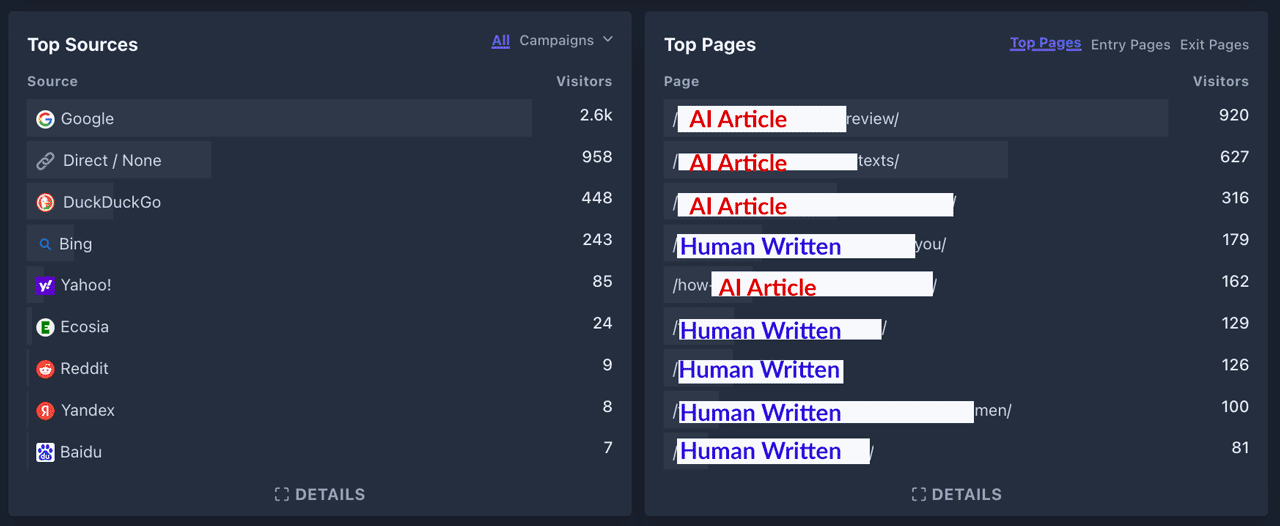

Additional In-House AI Test Data:

In addition to monitoring public websites, I also manage a portfolio of websites that are specifically designed to preserve a particular mix of article types. This allows me to quickly monitor Google's reaction to AI content.

1. Sites with human only articles

2. Sites a mix of human & AI articles

3. Sites with AI only articles.

Within the "AI only" site category, I have sub-categories comparing different version of AI articles for performance, often split testing different articles to measure ranking performance, user experience and engagement. In all cases, the articles are targeting real keywords and are being monitored for search engine changes.

Site 1: Full AI (High Engagement AI Content)

To my surprise, one of the test sites hosted on a domain with considerable authority, hosting full AI articles, saw an increase in rankings after the recent March core update.

While I understand this isn't representative of all AI (after all, it only contains one type of AI content and this is only one website), this does indicate that Google did NOT explicitly target all AI content.

Site 2: Hybrid AI & Human Content

The rankings of the second website remained exactly the same with no significant increases or decreases in web rankings. What is interesting is that high quality AI content is still significantly outperforming the human written content on the same website.

The AI content on this specific website outranks the human written content and also produces longer session times (more engaging articles). This is why I believe that good AI content can be beneficial, even after the March Core Update.

7. Testing Platforms

(WordPress Performance)

One rumor that seems to have been repeated online is that Google is purposely penalizing certain themes, layouts or specific sites.

While it's true that more as popular affiliate themes might have seen more of an impact (because the affiliate lends itself to creating a certain type of content), I thought it would be valuable to examine the performance of a popular affiliate theme.

Platform Testing Methodology

Step 1) Identify a popular WordPress theme and track down websites using that theme. We chose the Astra WordPress theme and used the footprint "Powered by Astra"

Step 2) Locate multiple sites using the same theme and compare the performance.

Credit https://www.wpcrafter.com/ runs the Astra Theme and contains the "Powered by Astra" in the footer.

Site #1 - Astra Theme Increases In Traffic

The first website we identified experienced a significant increase after the March Core Update.

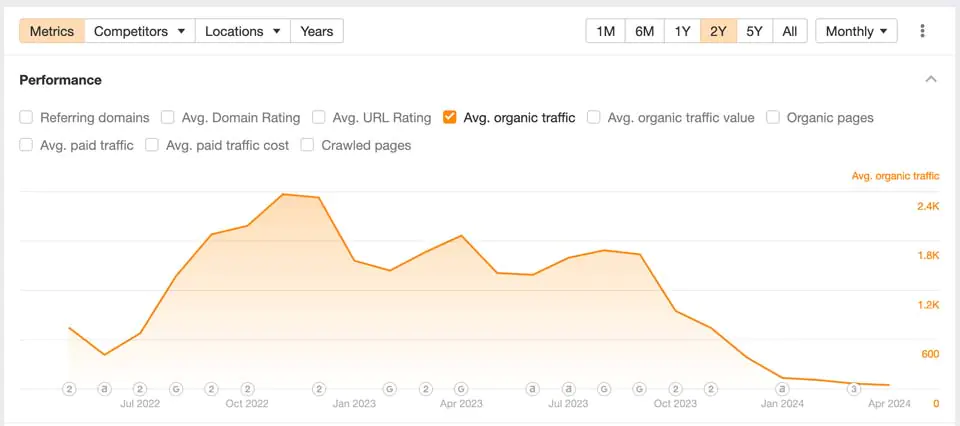

However... not all sites running the Astra theme experienced the same fate.

A second site, running the exact same WordPress theme, with a similar layout, css and appearance, experienced a significant drop in traffic after the March Core update.

We can conclude that themes/Wordpress CMS and CSS are NOT responsible for the penalty.

It would be unusual for Google to penalize a certain CMS, theme or stylesheet (all things that have been suggested online) so I felt the need to present evidence to the contrary. While a poor looking website can likely contribute to overall bad metrics which might in turn contribute to lower rankings, it does not appear to be a direct ranking factor.

8. In-Depth Site Analysis

Trends & Observations

The process of reviewing dozens of sites manually is not very scientific yet I feel it remains one of the best ways to identify trends, develop new ideas and understand a new algorithm update. It is often by identifying the exceptions that we learn the most and can validate our general theories on the current algorithm.

Although I manually reviewed over 87 sites (with more being sent to me via daily) over a period of 3 weeks in March and April 2024, I didn't think it would be useful to include a breakdown of every single site. This is just a tiny fraction of exemplary sites that stood out.

Here's what I discovered:

Site #1: Programmatic Website Gone Wrong

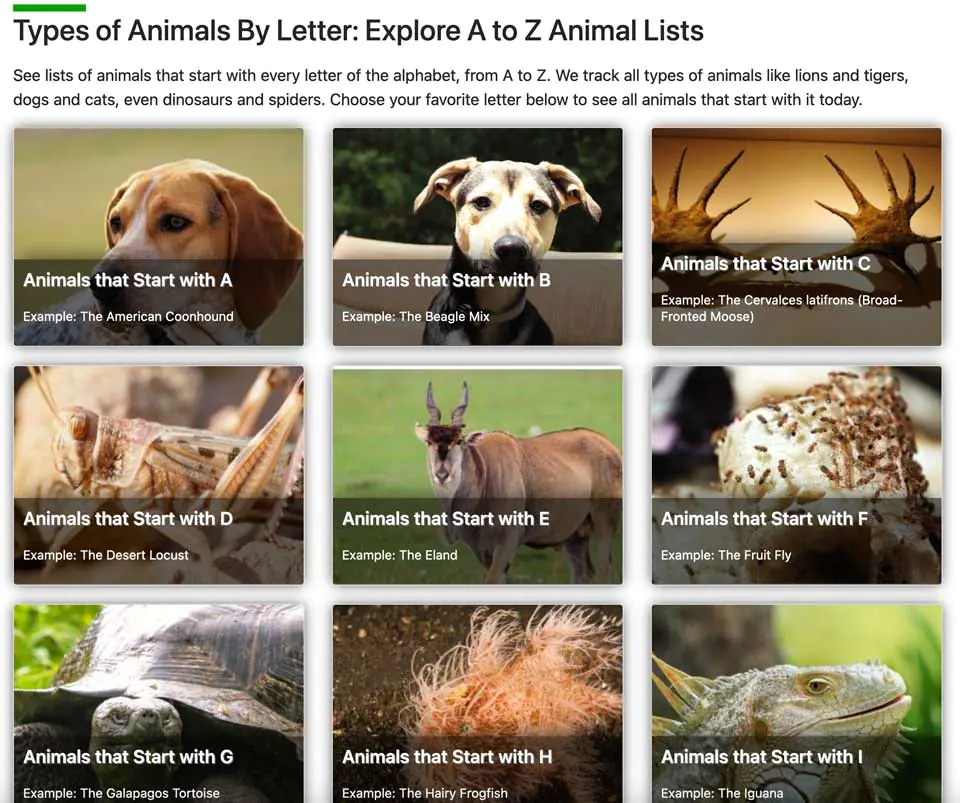

One website that was previously doing very well, reaching an estimated 10 million organic search visitors according to Ahrefs, saw a massive decline after the March Core update. I believe this site is interesting because I believe it is right on the edge... both containing good and bad content.

I wouldn't be surprised if, with a bit of housekeeping, this site could recover in a future update.

Ahrefs traffic graph of a site penalized by the March 2024 Core update.

The website begun it's life as a great resource and is an example of a well executed programmatic website. They originally utilized AI to create a large quantity of pages that provided value to users.

The screenshot below shows lists of animals sorted by the first letter, a resource that could be useful to anyone that needs a hint while working on a challenging word puzzle. (This is the "good" part of the website)

AZAnimals site provides useful programmatic content

Unfortunately... a bit of good content cannot outweigh the sheer quantity of lower quality content that the site subsequently started to publish.

As demonstrated below with this auto-generated Amazon affiliate 'review' page.

AI generated review page promoting Amazon products

This low-quality AI-generated Amazon listicle page is just one example of the type of content found throughout the site. It isn't difficult to scrape the titles and images of Amazon products and then add an AI-generated list of bullet points alongside them.

(As someone that performed affiliate marketing for 10 years, I get it... the appeal of being able to put up an affiliate list page in seconds is enticing. However from a user's point of view, there's nothing more I hate online when I'm looking for legitimate opinions about a product.)

I suspect that the site originally had good metrics due to the animal resource pages...

Unfortunately, it appears that the website then continued to add too many "low quality pages" which eventually tipped the scale. This would explain why it was ranking during the Helpful Content Update and was finally penalized after the March Core update.

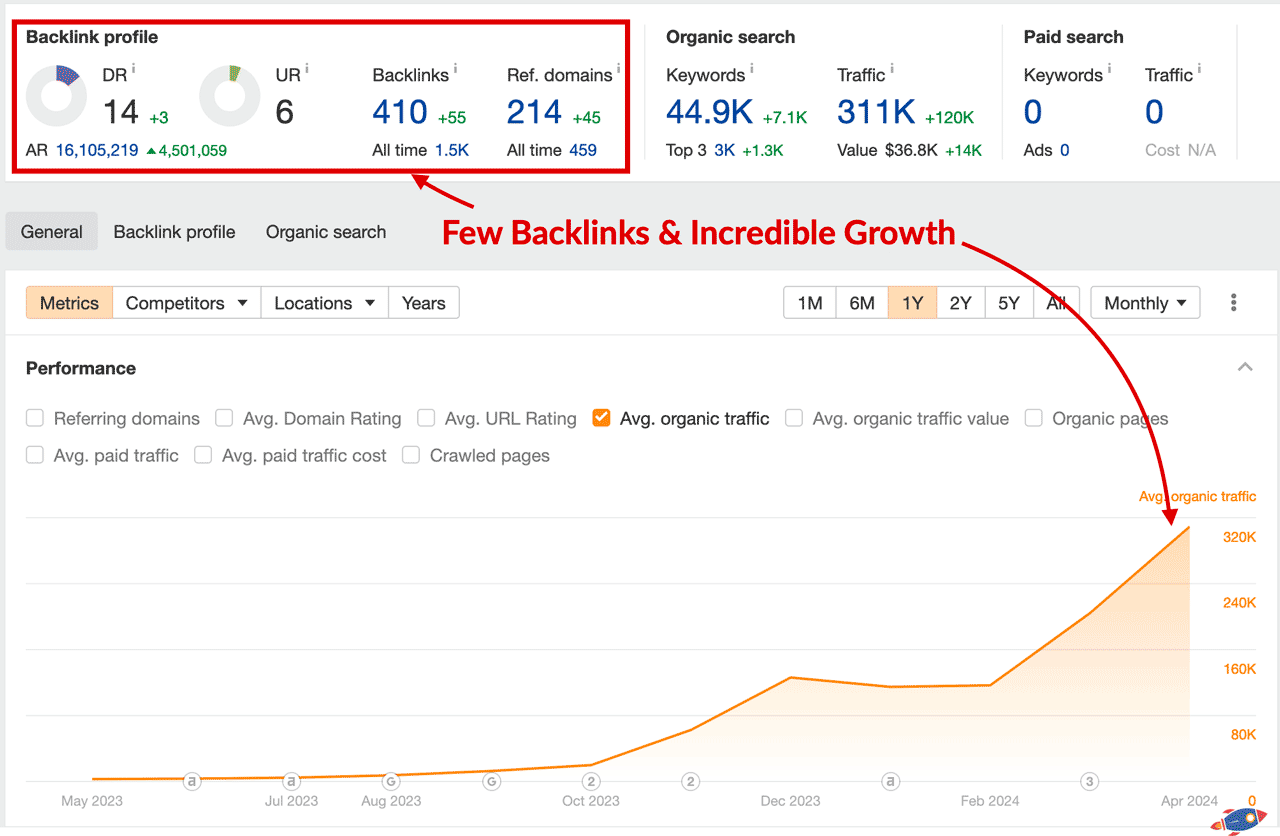

Site #2: Dogster Experiences Incredible Growth

While performing an extensive manual review of countless websites penalized by the Google update, I continued to observe a similar trend: sites with average content consistently lost traffic.

That's why when I discovered a website that defied all the odds and experienced incredible growth, I was intrigued.

Ahrefs Traffic Graph of the Dogster site

Wow!

As webmasters complain about Google heavily favoring larger sites such as Reddit, this site defies all odds by succeeding in spite of the recent algorithm changes.

What kind of magical content are they producing?

"Can Dogs Eat Pineberries?" Dogster article

Don't get me wrong, it's a good page... however it's not that good.

It contains many "trust" elements for the reader to feel safe such as a badge claiming to be veterinarian verified, it features good navigation and even adds a custom paw decoration to break up large portions of text.

I would personally rate the text as "adequate" in the sense that it answers a series of basic questions that an owner might have about feeding pineberries to their dog however it does not excel in any category.

In fact, quite the opposite!

The entire article could have easily been written by an LLM or a freelancer with no actual experience.

It even ends with a generic "Conclusion" paragraph! (Generic AI loves to create outlines that end with conclusion. I avoid this at all cost.)

Generic conclusion paragraph in the Dogster article

So if the articles are adequate at best, then what could possibly be driving the incredible growth of the site? Surely it isn't the definitive source of dog information on the internet, is it?

It turns out, Dogster is clever and has something going for it that other, similar dog advice sites, don't have going for them:

An engaged audience!

Here is just one of their social media properties with 183k followers. They are leveraging Facebook, Instagram and Twitter to drive traffic from social profiles to their website.

Dogster's Facebook Page with 183k Followers

Dog lovers are passionate people and I assume they are coming from social media accounts, browsing the "Dogster" website for a while before ultimately leaving.

In addition, they have an Ecommerce section of the site that likely encourages people to browse multiple products.

While I don't have access to their analytic accounts, I believe it's reasonable to assume visitors are, on average, visiting multiple pages and spending significant time on the site. (Considerably more than if visitors landed on a page for a simple query and immediately left)

This extra site-wide engagement is likely what is driving the massive growth.

Dogster's Ecommerce Section

In order to eliminate other potential factors, I performed a deep dive on the Dogster site, looking at the external links, internal links, navigation, on-page elements and more under a microscope to determine if there was another explanation for the massive growth.

Even their SEO optimization is adequate at best (just like their articles).

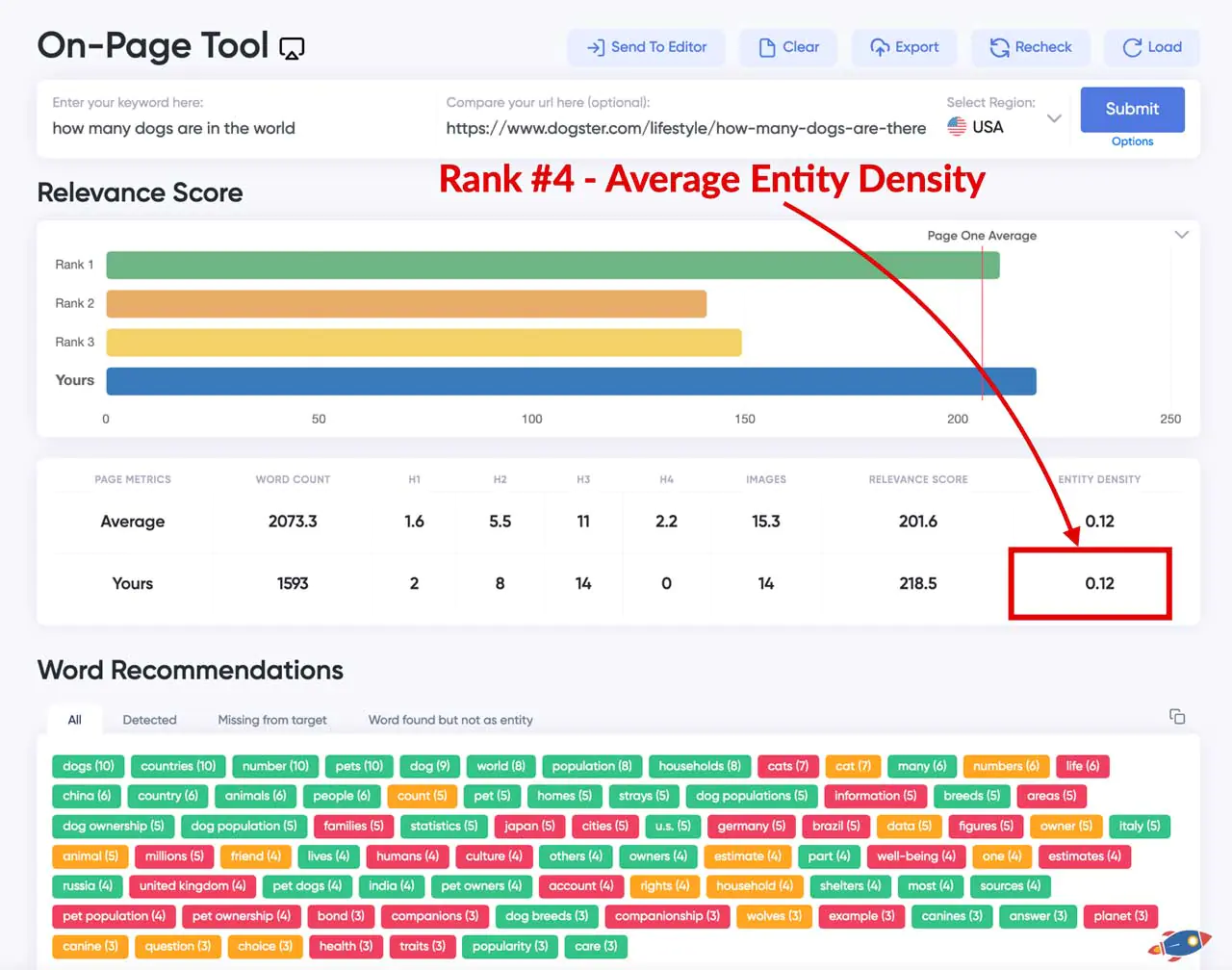

For example, at the time of writing, they are currently ranking in position #4 for the 'how many dogs are in the world' keyword below:

On-Page Analysis of Dogster's "How Many Dogs" Article

They are doing quite well with a high relevance score however as we saw in the metrics section of this report, having a higher entity density can be beneficial. The Dogster site has a 0.12 entity density which matches the average.

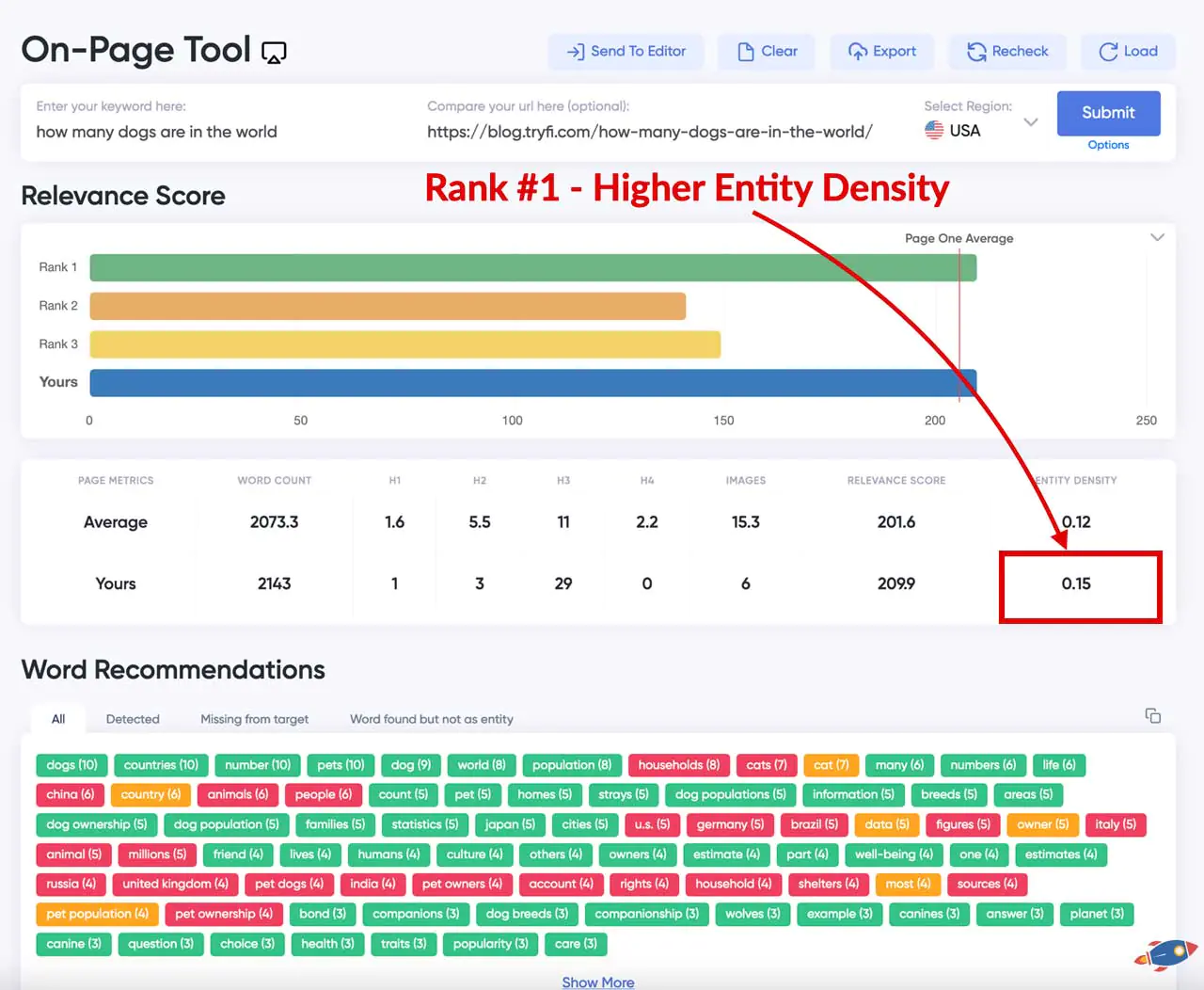

However, the #1 result for the keyword, hosted on a competitor's less powerful domain and is likely ranking by virtue of having a higher entity density (see below).

On-Page Analysis of the #1 Site For "How Many Dogs"

It appears as if once Google determines that your site is trustworthy and has a high rate of engagement, then it will try to locate the content with the most relevant entities.

This means that the Dogster site could likely rank even higher!

The Dogster model:

Step 1) Find a passionate crowd of dog lovers on social media. Feed them memes, inspiration posters and tips for their dogs.

Step 2) Funnel the social media traffic to their site and ecommerce site, increasing the overall engagement of the site as visitors browse through the site.

Step 3) Produce high quantities of average (but still adequate) dog specific content.

The last little bit that I feel is helping them is the overall appearance of the site. I must give them credit on the quality of their logo (which looks like a magazine logo such as Marie Claire) which gives the site a more authoritative presence.

Similarly, the use of "Verified by a veterinarian" boxes goes a long way towards convincing visitors (not Google) that the site is authentic.

Now the question is: Would this process work for a smaller site with less links?

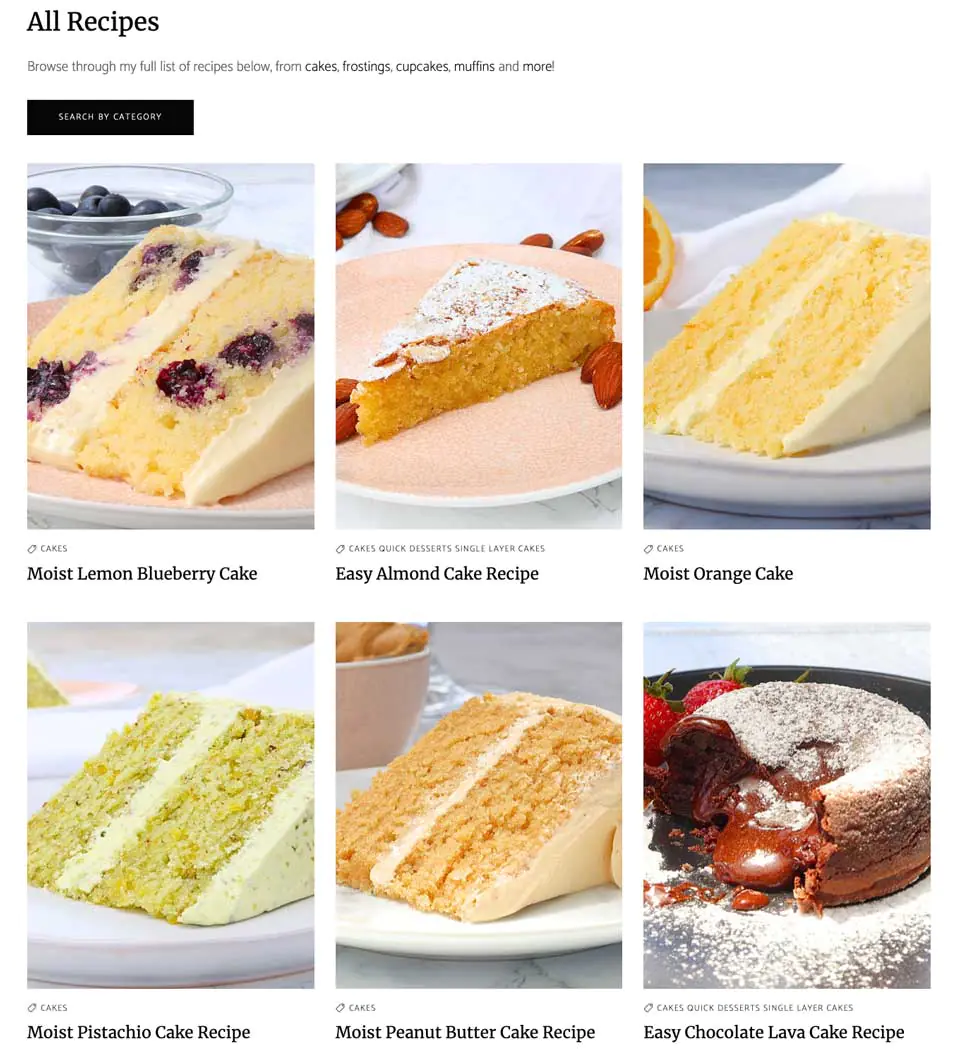

Site #3: CakesByMK's Incredible "Low Backlink" Growth

While going through the winners of the March Core Update, this website stood out as one of the best examples of what to do in order to succeed. It experienced tremendous growth with few backlinks and is one of my favorite sites to model.

Let's explore how they achieved incredible growth in the face of Google's most recent update.

Ahrefs Traffic Graph for CakesbyMK

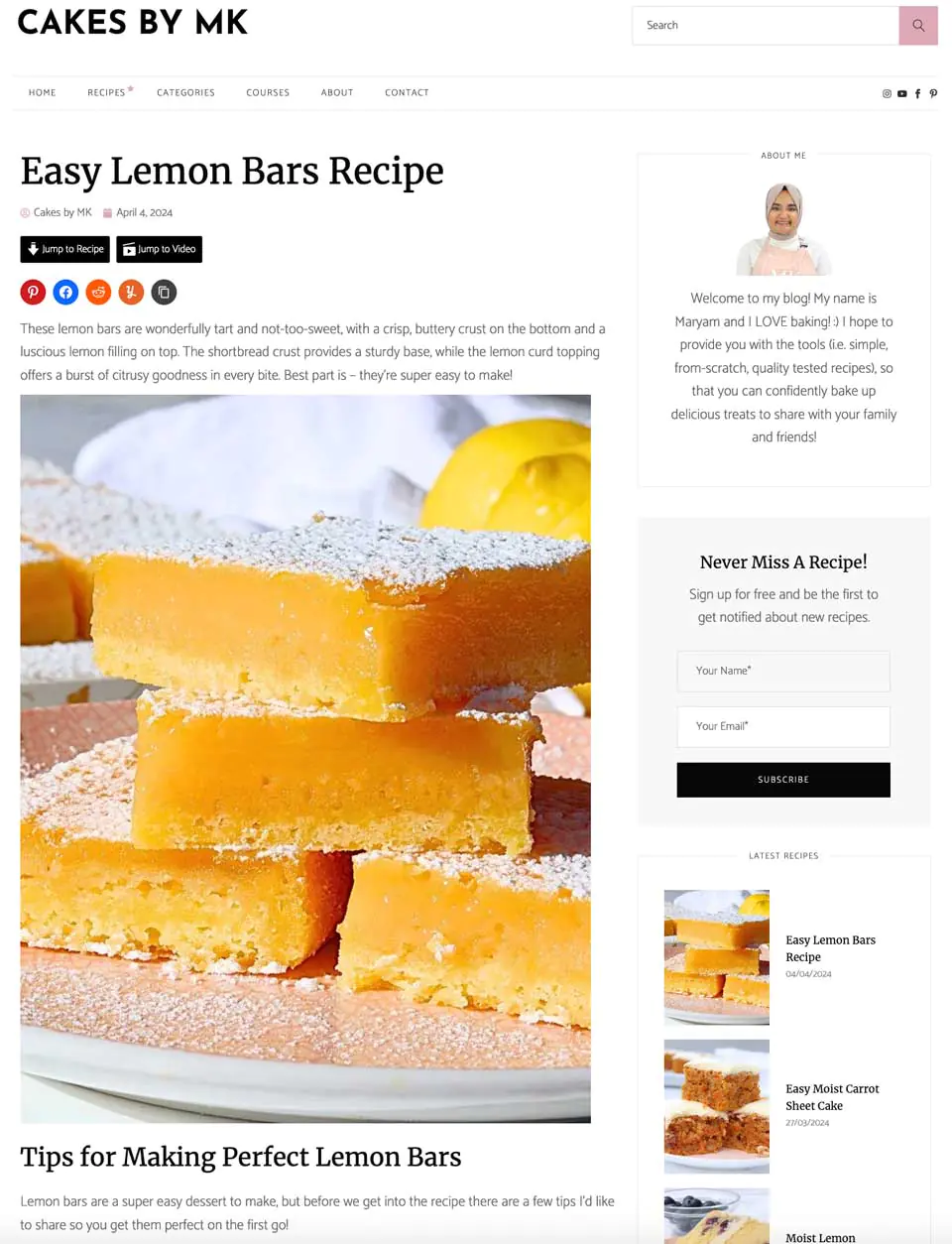

The CakesByMK website is a site dedicated to cake recipes. Each article is very well crafted, with beautiful images, detailed descriptions and features a link to a video example of the recipe. Overall, a very high quality production however from an algorithmic point of view, nothing that couldn't be replicated elsewhere.

There are no trust badges (my term for adding elements to a page for the sake of increasing visitor trust), the sidebar isn't very interesting and barring the incredible images, there isn't anything extra special within the text that would make the article stand out. I like this site specifically because it proves that you don't NEED to do anything extraordinary in order to rank.

They don't even have many links!

So what HAVE they done to warrant such growth?

CakesbyMK Article

In my opinion, the reason the site is performing so well is because it's built from the ground up as a resource for visitors. Instead of being just a collection of recipes (which traditionally receives a quick visit from Google and then bounces off), the author went above and beyond creating an entire catalog of their recipes to choose from.

The difference is that users are no longer coming in for a quick recipe from Google and then leaving... and instead, they are browsing the site, exploring the recipes, the courses, the shop... everything!

See the category page below:

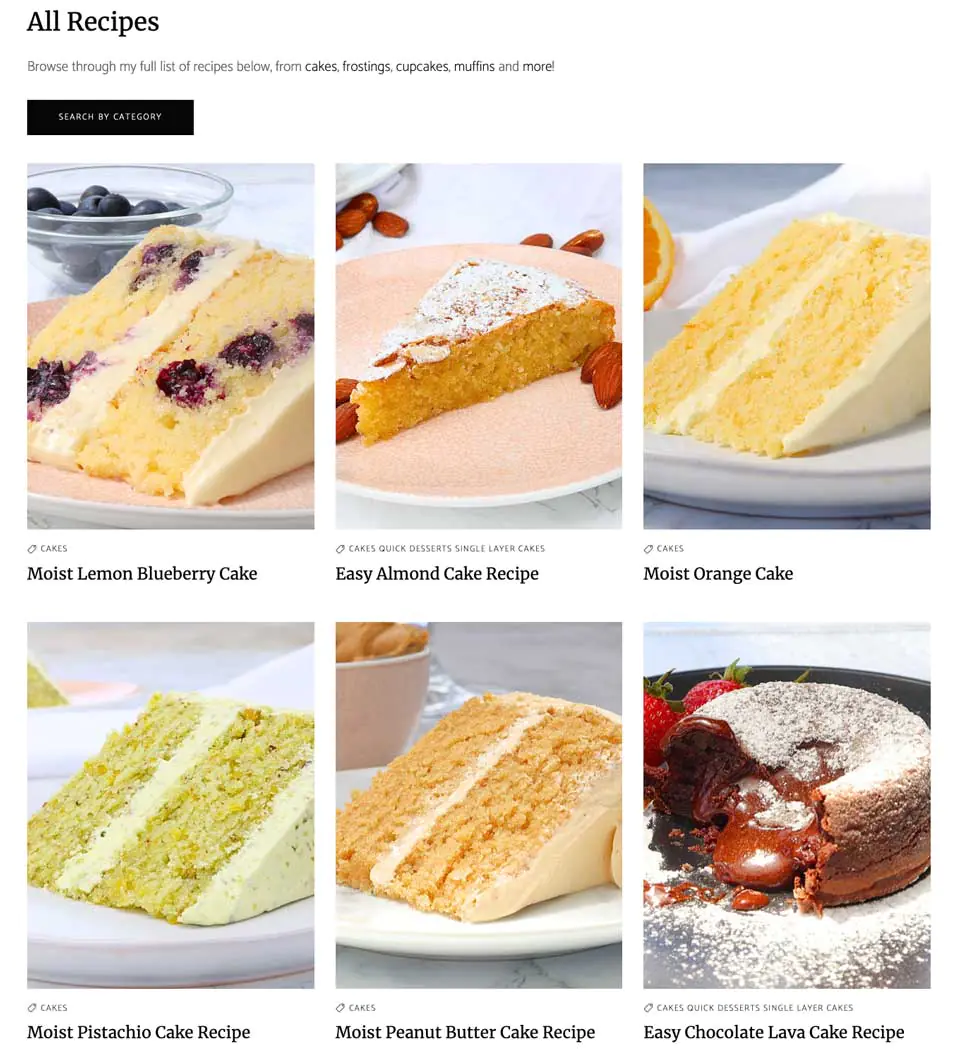

Resource Page From CakesbyMK

This is one of the best category pages that I have ever seen.

Linked from the top of the header navigation as "Recipes * " with an asterisk to get people clicking (great idea by the way, I have to test putting an asterisk next to one of my menu items to see if it increases click through rate)

The category page features mouth watering images of cakes that likely gets visitors clicking.

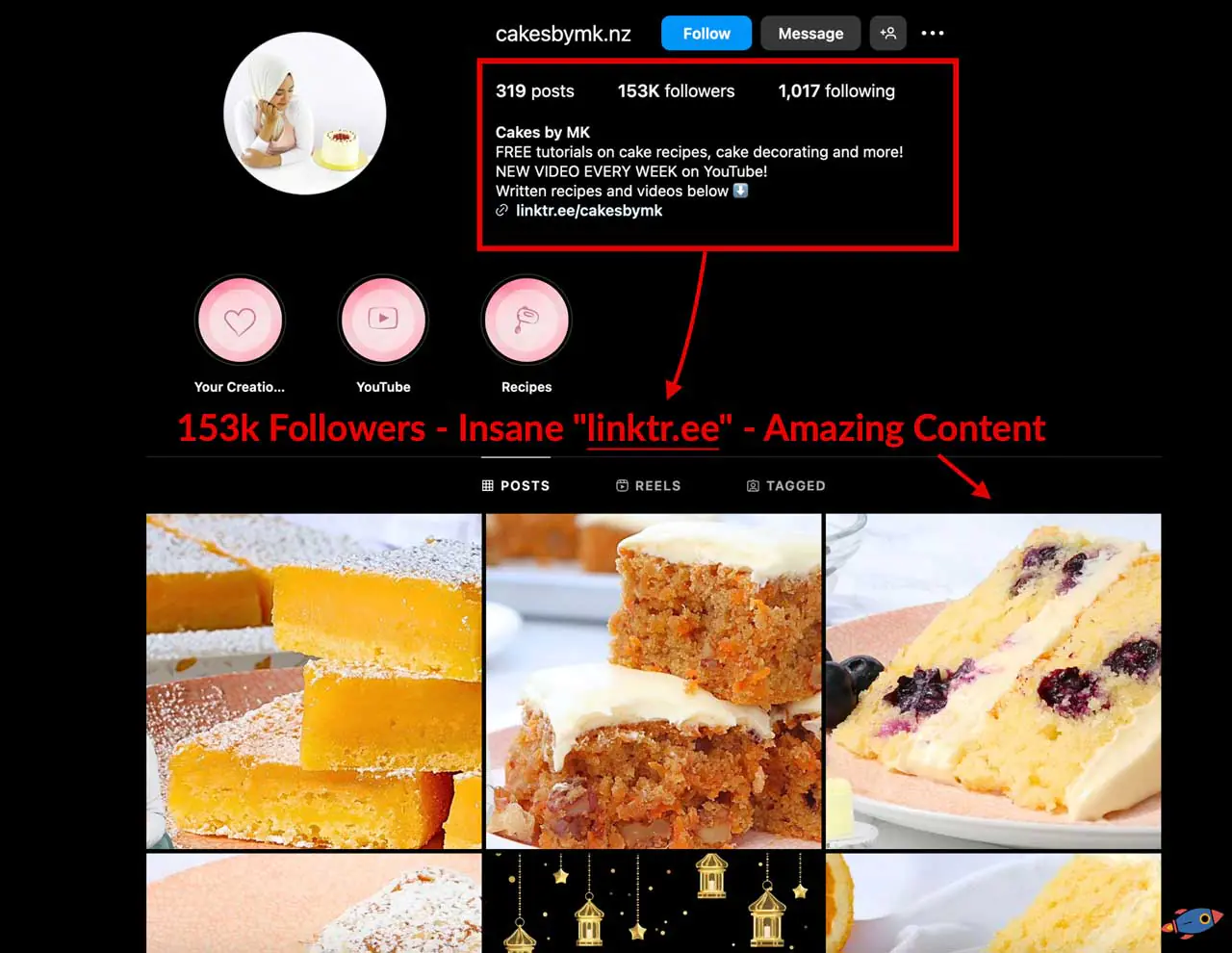

That said, even the best looking pages can sometimes struggle to capture the busy search engine visitor... so instead, CakesbyMK harnesses the power of social media to drive a horde of traffic to the site.

This, in turn, increases the overall site engagement... which then drives up organic Google rankings.

Instagram Account CakesbyMK

CakesbyMK features an impressive 153k followers on Instagram and has 454k subscribers on Youtube which acts as a source for all the traffic. Each piece of content published contains links to the website recipes, homepage, courses, etc.

I estimate that visitor originating from social media would browse at least 5 pages and spend at least 2 minutes on the site.

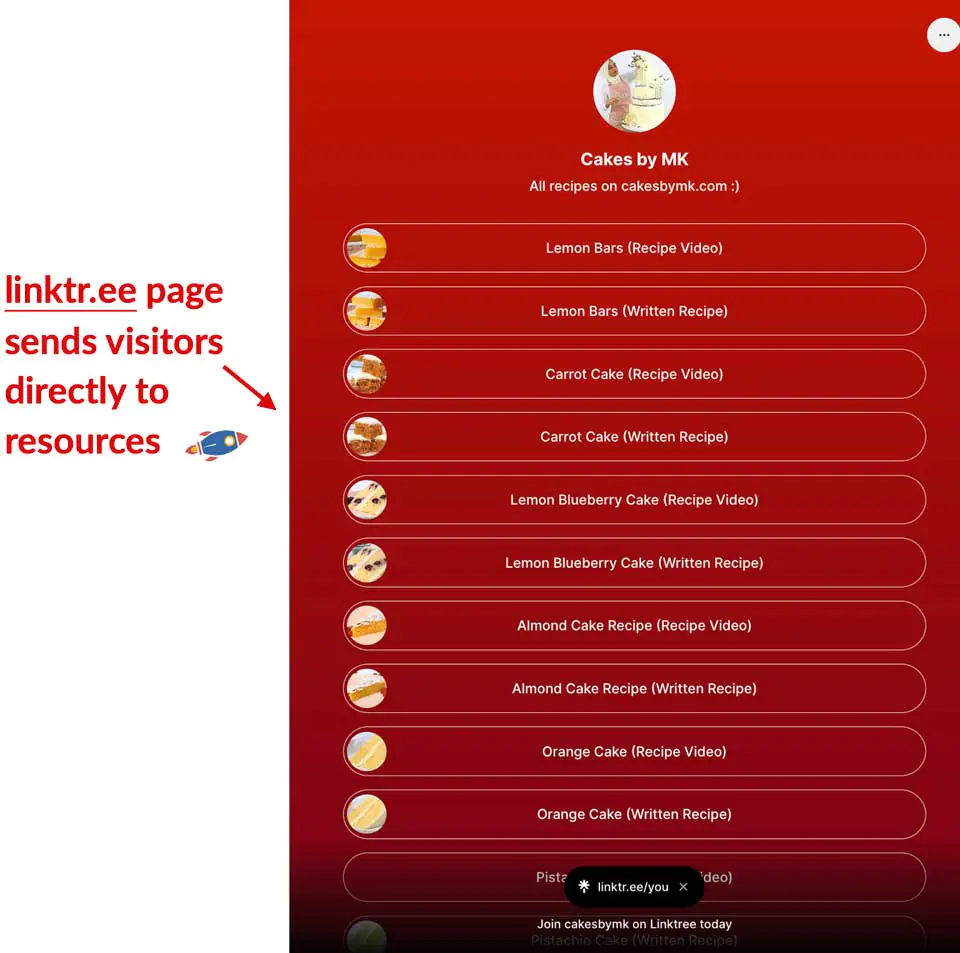

I did discover another trick they use. (This part is pure genius)

Linktr.ee Linked Inside Social Media Pages

Within the Instagram profile page, they have a Linktr.ee link which is commonly used to include links to various properties. Typically, users will include links to a Facebook page, a Youtube page and call it a day... but not CakesByMK.

Instead, they use this opportunity inside Linktr.ee to create a resource.

The Linktr.ee above links acts as a portal to the content on the site and I can imagine social media users using this to browse multiple recipes... thus increasing the engagement and returning visitors to the site.

Using this mechanism, I estimate that their site engagement metrics likely surpass nearly every other plain recipe site on the internet and thus, driving incredible organic growth with little to no links.

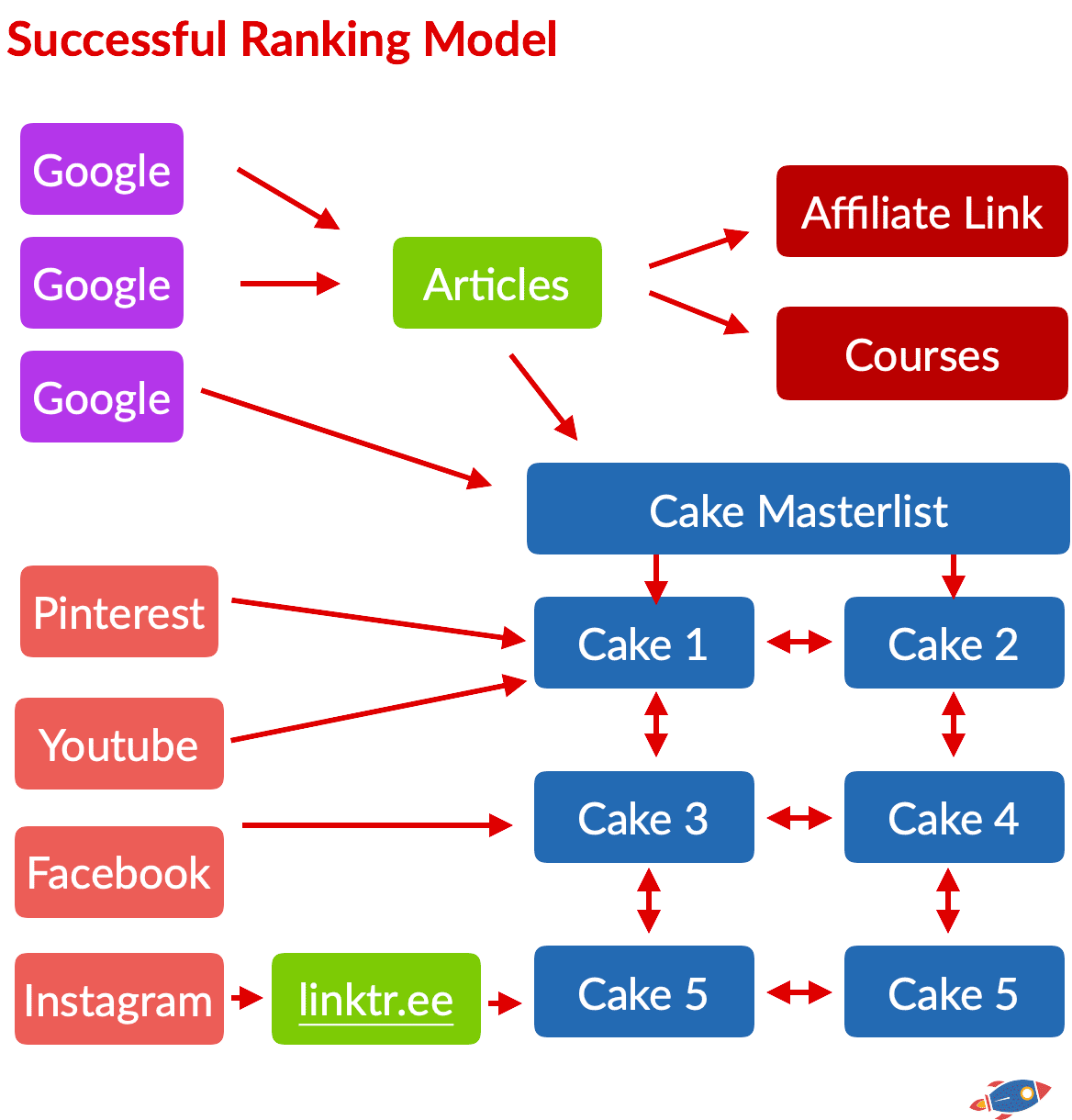

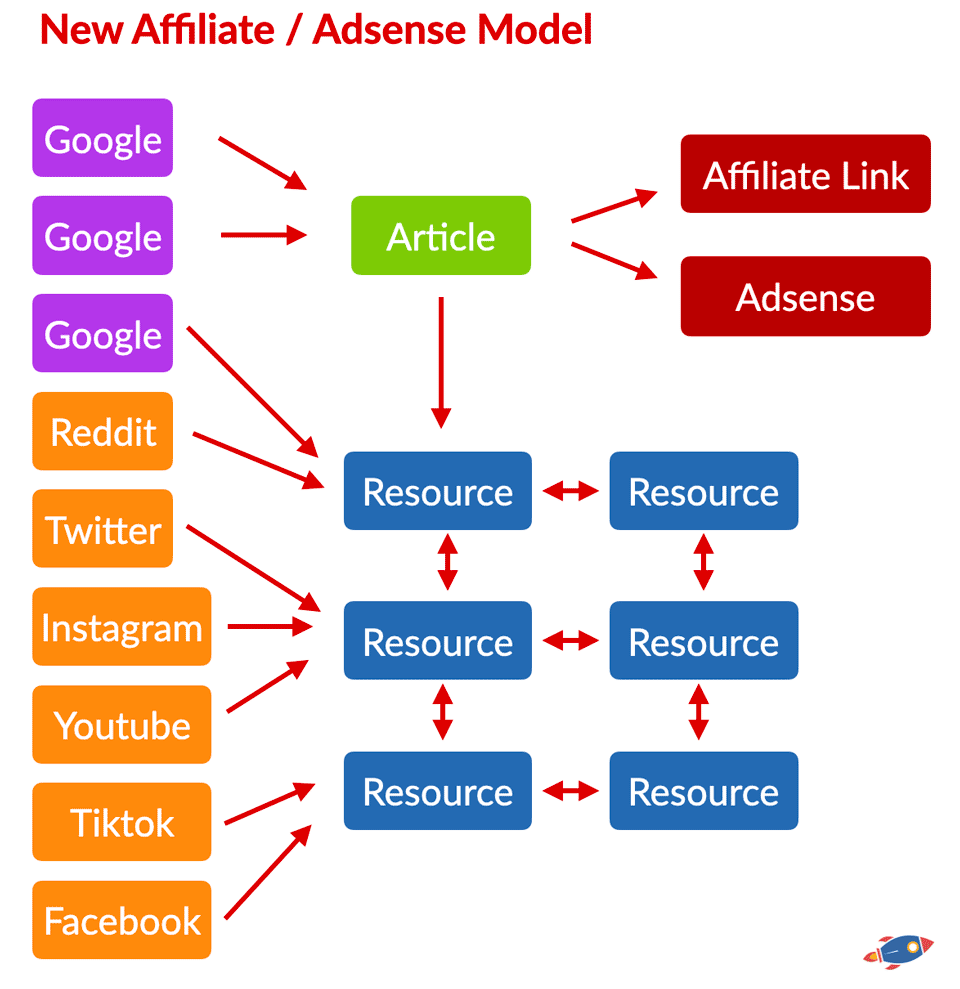

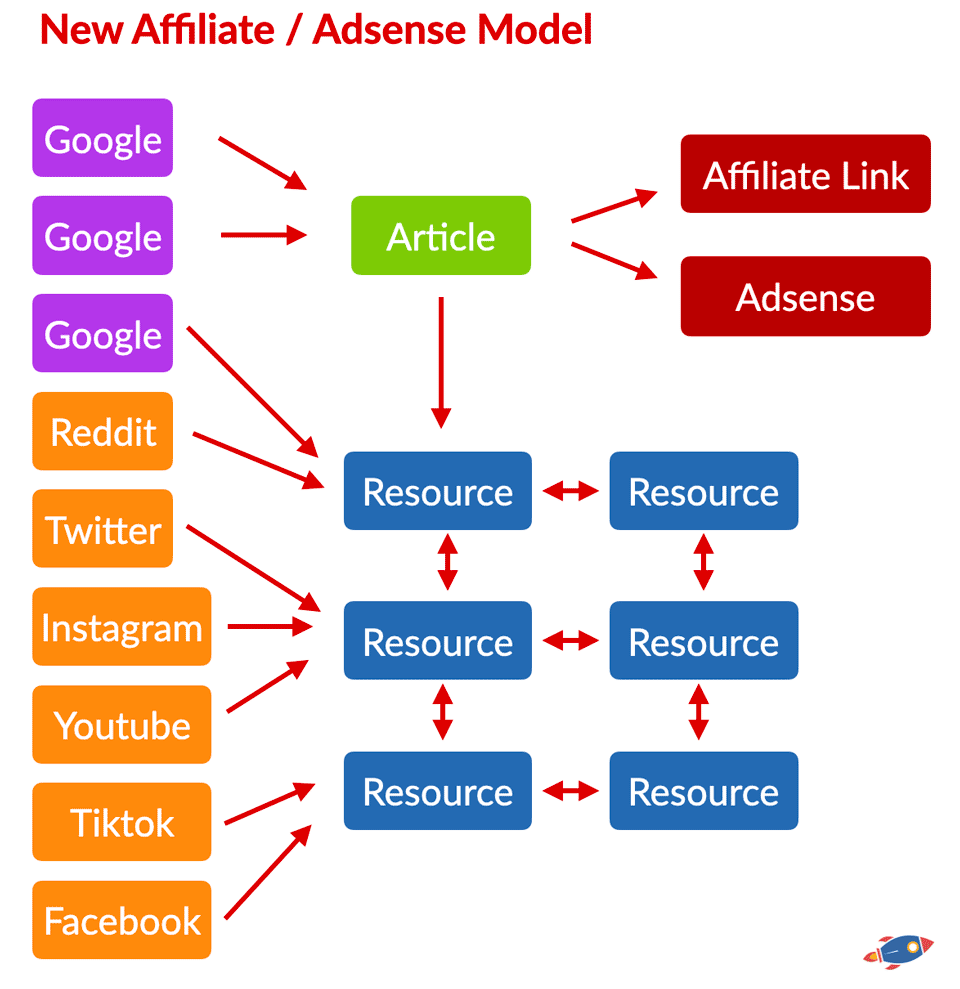

Here is a visual representation of the ranking model used by CakesbyMK:

Here we can clearly see how social media drives traffic to the "resource section" of the site (depicted in blue), which then increases the overall site user metrics. Once the site reaches a certain engagement level, Google begins to drive traffic to all the articles on the site.

Down below is a simplified explanation of the ranking mechanism:

Step 1) Social media drives traffic to a resource section on the site.

Step 2) Google notices this through Chrome and Android data.

Step 3) Google rewards the site with organic rankings and traffic.

Step 4) The website is free to monetize the articles with money making links.

I'm confident that this is quite a lucrative business model... and best of all, I believe anyone can replicate it.

9. Analysis and Trends

(Here's what keeps on coming back)

After reviewing the accumulated metric data (average word count, sub-headline breakdown, entities, etc) and several dozens of websites through manual review, some trends begin to emerge.

Here are some of the observed trends with regards to the recent search landscape:

1. AI content is everywhere. Both good and bad sites have AI content.

2. AI content does not seem to have been impacted and still ranks just as well as before (as long as the site is seen as favorable). That said, sites using low quality, generic AI content were more prone to drops. Sites that employed highly engaging content (created by AI or by humans) seemed to perform better.

3. Mentions of specific expertise within the page do not seem necessary. Include them IF you believe this will help your readers feel as if they can trust your content.

4. It appears as if having good articles might not be enough if you're relying entirely on Google visitors. Some affiliate sites had decent content and were still penalized.

5. Affiliate websites seem to have been impacted the most.

6. Local websites have seen a minimal / negligible impact from the update.

7. Ecommerce websites have not seen a major impact from the update.

8. Resource sites do not seem to have been impacted as from the update.

9. Sites with a large social media following thrived during the update.

10. Links (both external and internal) did not seem influence if a website was affected or not. Some penalized sites had hundreds of thousands of links while others with very few links thrived. Links do matter... however they don't seem to protect you from the wrath of the March core update.

11. It appears as if once the website is deemed to be trusted, then Google falls back to favoring entity density to as a primary ranking factor.

While these are the major trends observed across hundreds of pages, I must restate that correlation does not equate causation. These are just observations across many pages.

10. What I Believe Happened

Main Theory #1: Overall Site Engagement

The most frustrating part of the March Core update is that I know many webmasters (and SEO agencies) that HAVE diligently been working towards improving their pages and they are still experiencing traffic drops. If that applies to you, I hear you and I'm writing this specifically to help you.

I feel as if webmasters and SEO professionals have been mislead by Google. For decades, Google has said: "Create helpful content" and you'll rank just fine. What they failed to mention is what kind of helpful content.

Ultimately, I believe you can have a series of good pages and STILL be penalized by the March Core Update.

Allow me to explain:

1. I believe the new March Core Update introduces or modifies a site wide score. This impacts all pages on a site. For example, if you were to score a hypothetical 20/100, you could see all your pages drop by a significant amount.

2. The score attempts to measure some sort of user experience/satisfaction/accomplishment of the overall site. For example, sites that have many direct searches, long lasting sessions with multiple pages views (such as Reddit.com and LinkedIn.com) have been doing spectacularly well post-update. In addition, many Ecommerce sites, tool/services sites and community sites (forums, discussion sites, social sites) have performed well after the update.

Pure affiliate sites seem to be unequivocally affected by the update, even when they have decent content.

In short, the type of site of operate seemed to be correlated with the likelyhood of you being negatively impacted by the update... however, I do NOT think that Google is specifically looking at the type of website in order to penalize it. (For example, adding an "Add to Cart" button on your website won't make you magically recover.)

Instead, it's just that some TYPES of websites and some TRAFFIC SOURCES naturally lead to worse engagement... even if you are creating high quality content.

Why you are being penalized even with "good" content

We've seen numerous reports of webmasters with good, authentic content being penalized by the March Core in spite of doing all the "right things".

Previously, if you wrote about "how long does a chicken take to cook" and you provided an accurate, well explained answer, you would stand a chance of ranking without any issues on Google.

However, even in the BEST case scenario, with a perfect page, your readers would still likely land on the page, locate the information and then immediately leave as you satisfied the user intent.

Great, right? It's all about satisfying the user intent...

Right?

Previously, I would have agreed.

However it appears as if now, even if you DO satisfy the user intent, presenting an accurate answer on the page that is helpful to readers, that might not be enough.

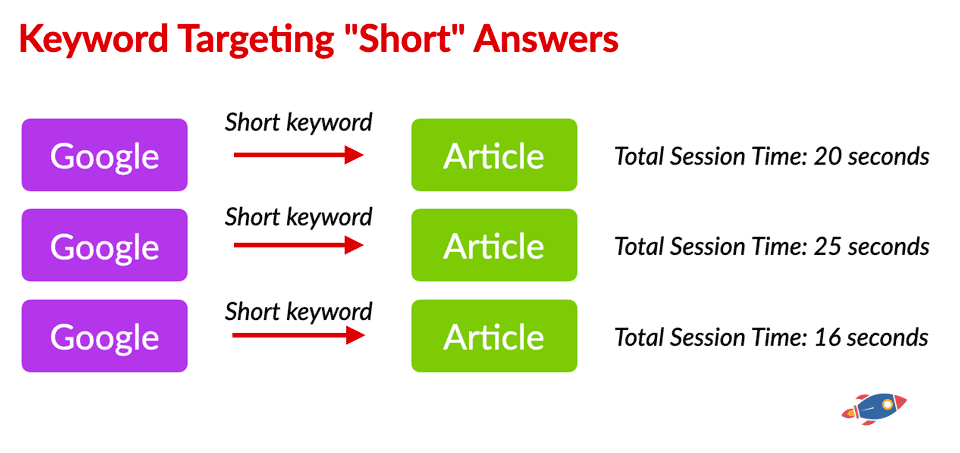

My explanation is that for this phenomenon is that certain keywords and traffic sources naturally lead to very short and brief sessions.

This means that if you look up a list of long tail keywords with short answers (I'll call them 'short' keywords for the sake of this report) and create content targeting those keywords, then even in the best case scenario, your overall site metric score won't be good enough to compete with different types of websites.

This might be why Google is saying that you should NOT target keywords.

As targeting 'short keywords' and relying exclusively on Google organic traffic will always result in 'short sessions'.

I believe the reason you might be penalized might not be because of the QUALITY of the content you're producing and instead, it's the TYPE of content you're primarily producing.

And in order to have a chance of ranking for 'short keywords', you must first build up your site metric score by ranking for keywords that lead to long sessions, recurring visitors and engaged users.

"Short Keywords" & "Low Engagement" Traffic Sources:

If you only target short keywords and receive visitors from Google that find an answer and immediately bounce away, your website metrics will be poor.

Let's draw up a fictional scenario of a pure affiliate site targeting long tail "short" keywords with quick answers.

Keywords:

"how to cook a turkey in a pressure cooker"

"how to mix beans and rice"

"what is the ideal temperature to cook a chicken"

"best air fryers for making french fries"

And now let's assume that you are answering every query properly, providing a good answer based on years of personal experience. How long would someone stay on the site if they came from a Google search? Would they return to the site?

Let's estimate the total session time of a successful search.

Ranking #1 On Google For The Keywords:

"how to cook a turkey in a pressure cooker":

The reader is likely looking for how long to pressure cook a turkey inside a pressure cooker and might be curious about the ingredients you can throw into a pressure cooker. They would likely skim the article to find the pertinent pieces of information and stay for a maximum of 45 seconds. (And then they would leave to make food!)

"how to mix beans and rice":

The reader is likely looking for images or a quick tip on when to mix beans and rice during the cooking process. They are likely to stay for a maximum of 15 seconds after locating the information.

"what is the ideal temperature to cook a chicken":

The reader clearly wants a single piece of information... it likely already saw the answer in the search snippet and might be clicking the article just to confirm. Maximum 10 second session as they find the information.

"best air fryers for making french fries"

The reader is looking for information on which air fryer to purchase. A well crafted page might retain SOME readers 2+ minutes however the majority (average low-attention span reader) will likely scan the page, click on an air fryer that follows an Amazon link and leave the site within 20-30 seconds.

With this in mind, in the BEST case scenario, even with high quality information answering and satisfying every user query, your total site metrics will seem poor relative to different types of sites.

And it isn't your fault.

It's just because you've been mislead by Google claiming you just need to produce high quality content... without them telling you the TYPE of content matters.

For example, if you ONLY target "short keywords" that provide a quick specific answer with Google traffic:

You'll end up with a site that has high quality content... but still has 'bad' metrics.

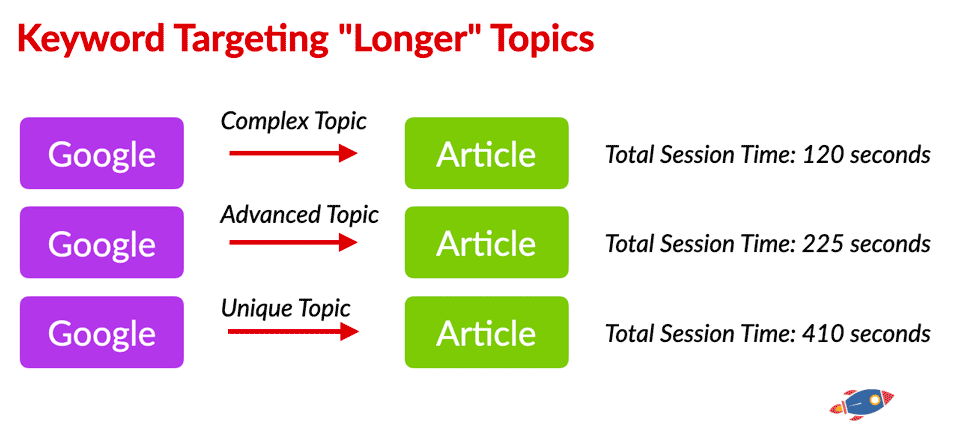

I believe that one of the first steps towards recovering is to change the targeting of the site, moving towards longer, more complex topics.

Writing about topics that require a reader to stay on a page longer will naturally lead to improved site engagement, even if your content quality is exactly the same.

For example, if you create an article that covers: "The Top 25 Celebrity Living Rooms - Don't miss Beyonce's pad"

Even if a reader was skimming through the pictures of different living rooms, spending a mere 5 seconds per image, would result in the reader spending over 2 minutes on the page.

Insert clever internal links within the article to get readers reading another follow-up article... and you're well on your way to session times of over 3-4 minutes, just by changing the keyword targeting.

I believe that one of the first steps towards recovering is to change the targeting of the site, moving towards longer, more complex topics.

How Google Is Likely Comparing Sites

As we just covered, even while doing everything 'right', the maximum average session time of a site targeting short keywords with Google traffic might only be 45 seconds. If all of your competition also had 45 seconds session times, that might be ok... however Google isn't only comparing you to other affiliate sites.

Imagine a typical affiliate site having the following metrics:

- 45 second average session time

- 1.3 average page views per user

- 86% bounce rate

All while having expertise, good content and satisfying the user intent.

Comparatively, sites that cover the same keywords could have:

1. Reddit = Average 12 minute sessions

2. LinkedIn = Average 5 minute sessions

3. Forbes = Average 4 minute sessions

..

4. Your Affiliate Site = 0.75 Minute Sessions

I believe that Google's March Core algorithm introduces a new comparison metric that does not only compare page-per-page and instead, compares site-page vs site-page.

If Google has a series of very similar results, with all the same on-page relevance:

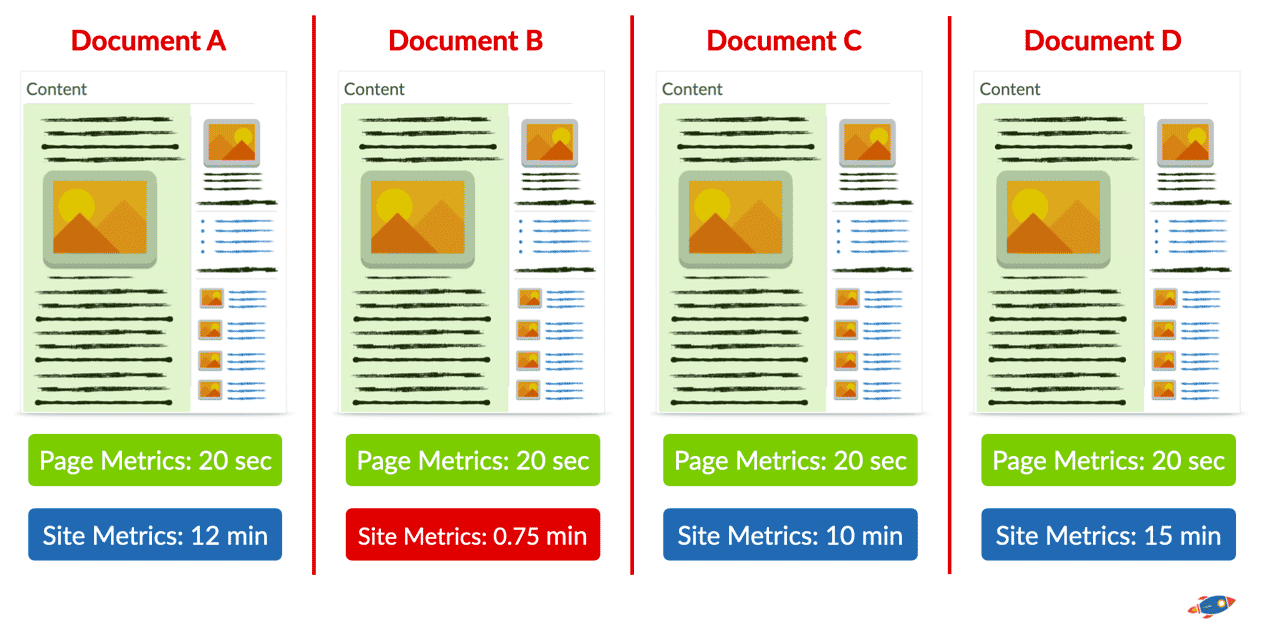

[Document A] = [Page Time 20 seconds] = [Average Site Time = 12 minutes]

[Document B] = [Page Time 20 seconds] = [Average Site Time = 0.75 minutes]

[Document C] = [Page Time 20 seconds] = [Average Site Time = 10 minutes]

[Document D] = [Page Time 20 seconds] = [Average Site Time = 15 minutes]

Google would likely say that Document B is not to be trusted EVEN if the individual page time is similar to the other pages. Because the average site time hosting document B is considerably shorter (0.75 minutes) than all the other sites, the document B could stand out as being suspicious.

From Google's perspective, it's safer to only serve documents published on sites already trusted by users.

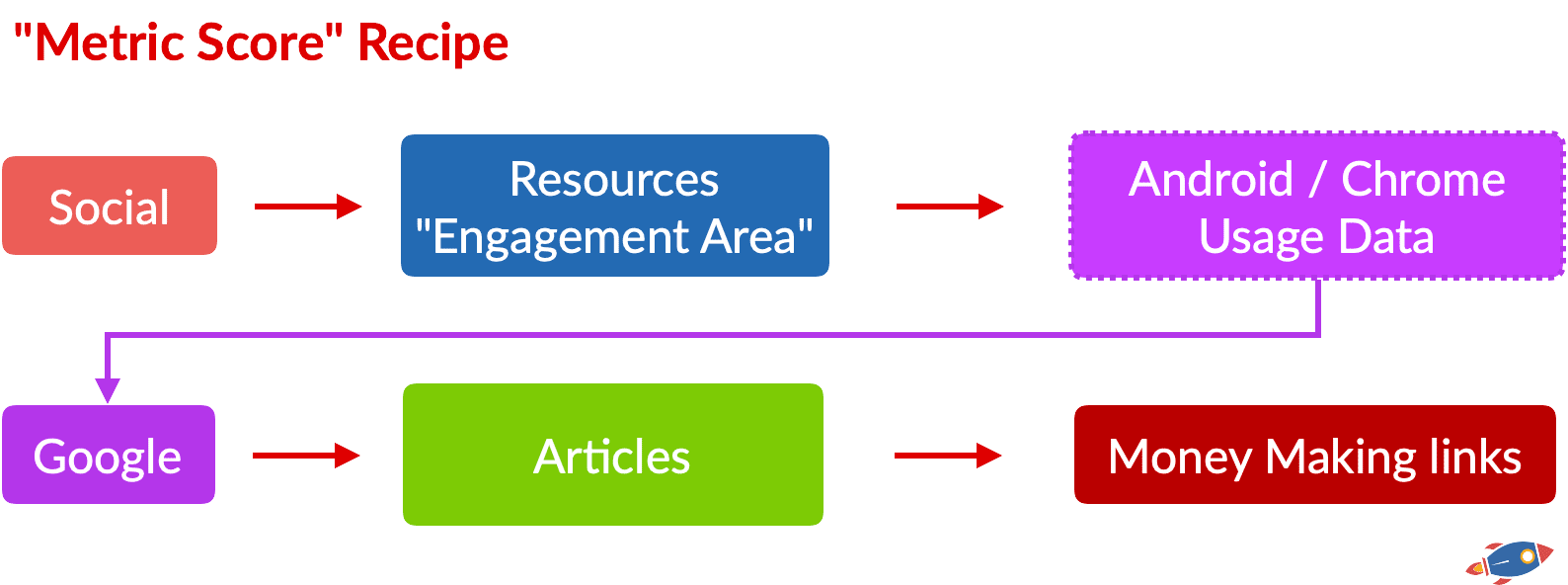

Google's New Metric Score

Even though I have been using average session time for demonstrative purposes due to it's simplicity. (It makes the example above relatively easy to understand).

I do NOT believe that Google exclusively uses session time to rank websites.

Instead, Google likely uses a combination of metrics that results in a "metric score" for a website. This is a key distinction as some industries, languages, device types might have varying degrees of session times and using a single measurement unit would not provide an accurate depiction of what's happening on the internet.

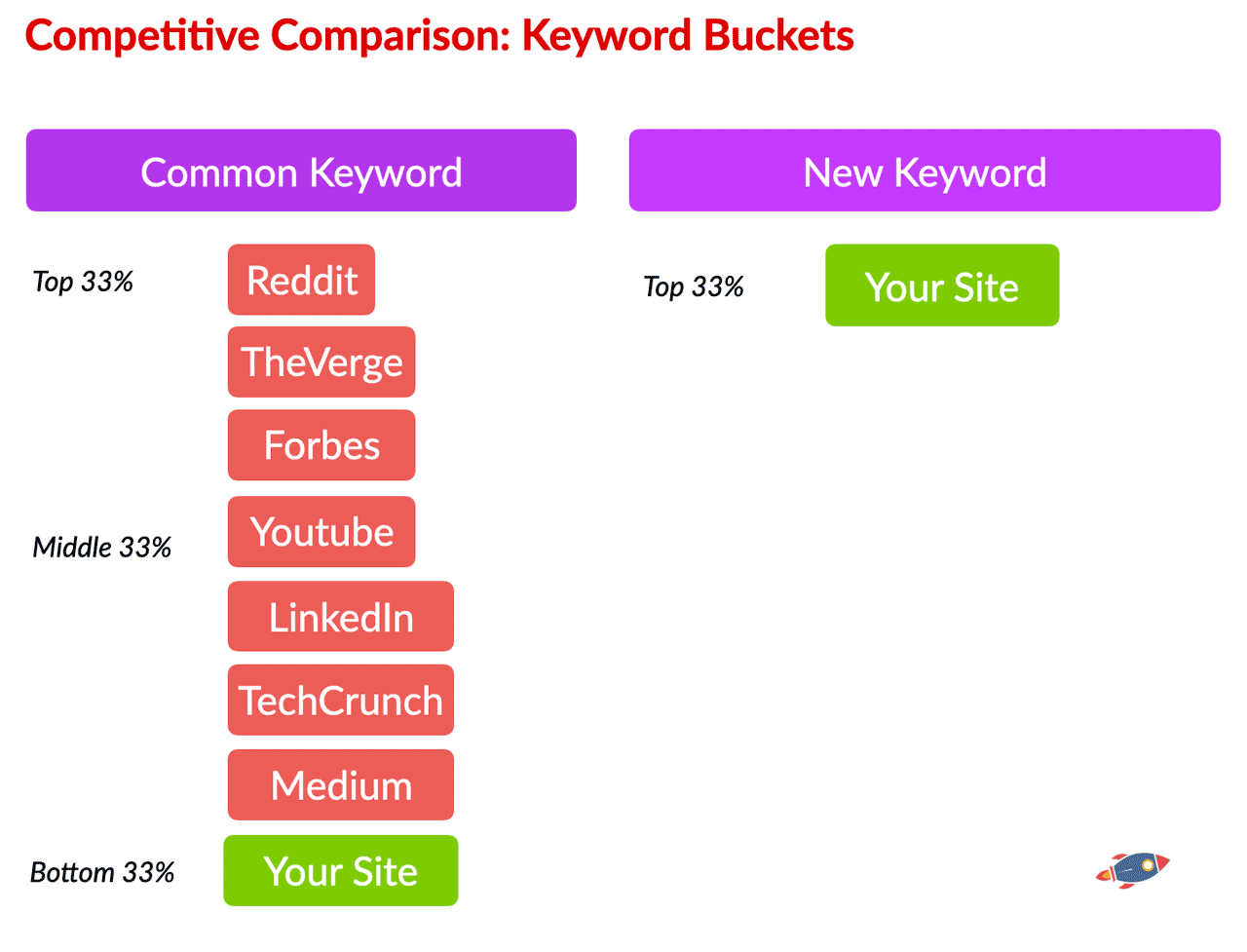

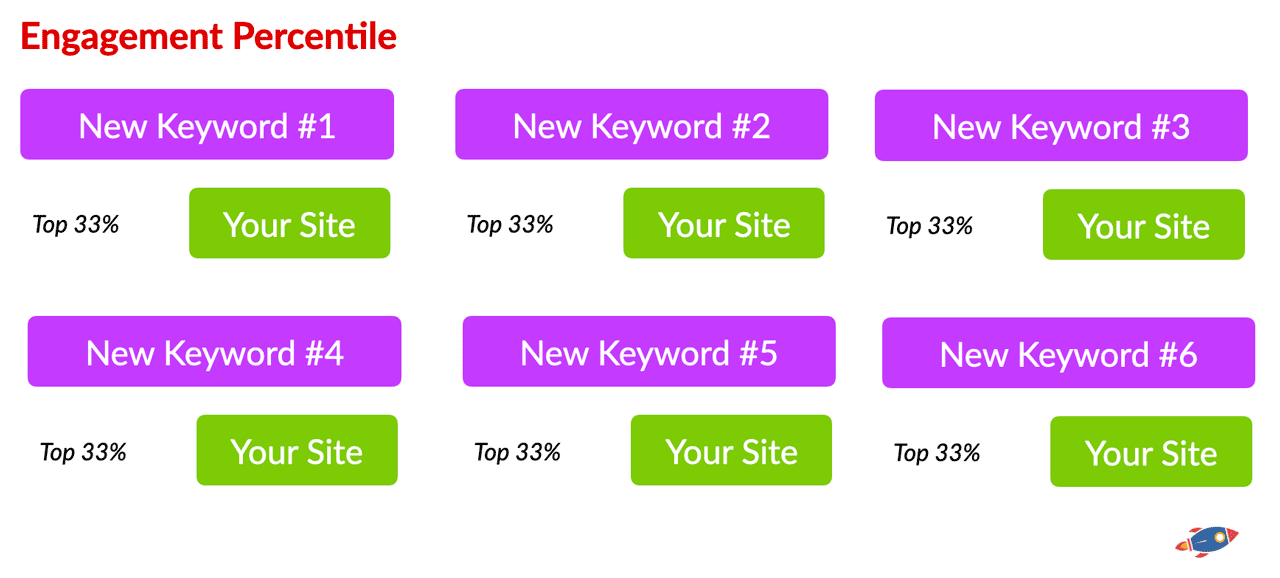

Instead, IF Google operates in a similar fashion to Core Web Vitals, then the metric score could be a combination of measurements that result in a rank percentile when compared to other sites competing for the same keyword.

Google likely compares the performance percentile within the same industry (keywords).

For example, if you have a list of sites competing for the same keyword:

"how to choose a fishing rod for beginners"

Google returns that there are 67,900,000 potential pages competing for that same result.

And for that term, Google will measure the metrics, comparing the page AND sites that host content for this keyword.

Rank 1 = [Document A] = [Page Time 120 seconds] = [Average Site Time = 12 minutes]

Rank 2 = [Document B] = [Page Time 120 seconds] = [Average Site Time = 11 minutes]

Rank 3 = [Document C] = [Page Time 119 seconds] = [Average Site Time = 10 minutes]

...

Rank 67,900,000 = [Document D] = [Page Time 1 seconds] = [Average Site Time = 0.3 minutes]

Writing for that keyword ultimately places you in a certain ranking percentile such as within the top 10% best metric scores if you're document A or B...

Or within the bottom 1% percentile if you're document D.

Each page likely receives a metric score and the combination of all metric scores from the pages on the website likely culminates into a final metric score for the website. (And this final metric score for the website is what dictates how well your site will perform online).

If you consistently find yourself within the lower percentile when compared to other sites within the keyword bucket, then your website will likely receive a lower site metric score which will prevent you from ranking.

This is why many local sites which are only competing against other local sites, have seen little fluctuation with the recent March Core update... and sites that compete on a larger scale run up against sites like Reddit that have incredible engagement.

Before we dive into potential recovery steps, let's address the final change that Google might have made during the March Core update regarding links.

11. Link Update

(Links + Metrics)

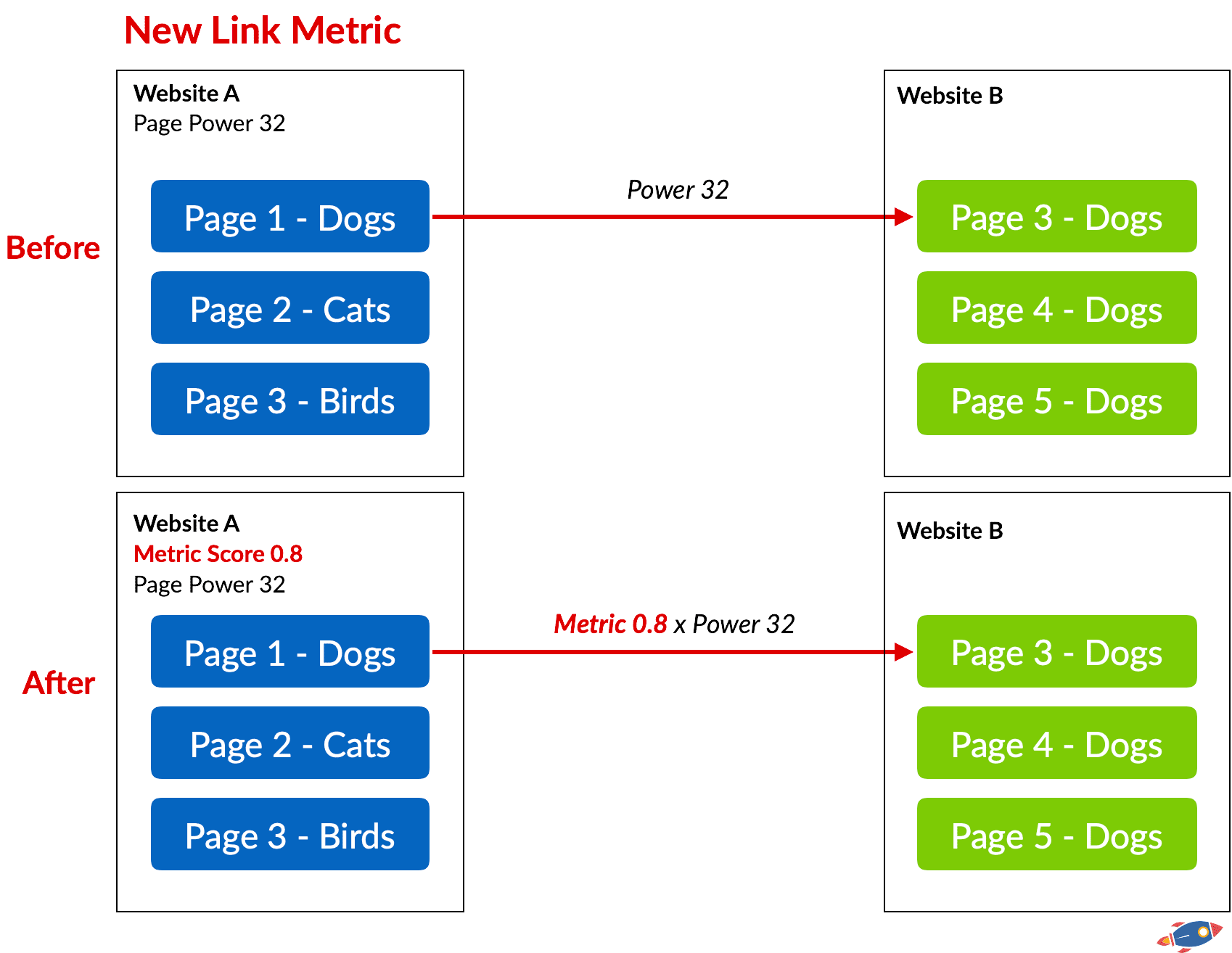

In an earlier section, we verified links originating from paid link sites and found no conclusive evidence that Google took action. So what did Google change with respect to links?

Possibly the algorithm itself.

As Google has been been heavily focusing on overall site engagement recently, it wouldn't be very difficult to imagine that Google could add a metric component to links.

In the simplest terms possible, if a link is coming from a site with poor metrics, then it shouldn't be worth as much.

For example if Google currently calculates the link value based on power, topical relevance, trust and anchor text relevance, then it could easily add website metric score as another variable.

Hypothetical example with fictional variables:

(Please note that this is simplified for illustrative purposes only. Google won't use the same terminology and the link equation is likely much more complicated.)

Previously, a link algorithm might have looked like this:

[PageRank] x [Topical relevance ] x [Trust score] x [Anchor text relevance] = Final Link Score

After this latest update, Google could very well integrate website metric score into the equation, increasing the impact of user behavior on links and overall rankings.

[PageRank] x [Topical relevance ] x [Trust score] x [Anchor text relevance] x [Metric Score] = Final Link Score

The consequence of this change is that sites that have minimal user interaction would pass less link equity and therefore, affect rankings less. This could help reduce the impact of PBNs and/or sites built for the sole purpose of linking.

If this is a change that Google has implemented, then it would be of the utmost importance to receive links from active, engaging websites. This would, in turn, increase the weight of links originating from social sites such as Reddit, LinkedIn, forums, ecommerce stores and even potentially press release sites.

This might explain, in part, why sites receiving so many social links have seen an increase in traffic.

Unfortunately at the time of writing, we haven't had a chance revisit our tests on the impact of social links to a website. (So take this information with a grain of salt)

We plan on A/B testing the impacts of social links in the near future.

12. How To Recover From The Google March 2024 Core Update

(Tentative Step by Step Instructions)

Until I repeatedly recover websites impacted by the March Content Update, I can only tell you what I would personally do if I had recover my own website. This will aim to cover all my theories.

Proceed at your own risk. I have no control over your site or Google, you are 100% responsible for any changes you make to your site.

Here's what I would personally do:

1. I would start by creating a resource on my website with the sole purpose of attracting and retaining visitors.

The word "resource" is a large term that encompasses anything that is valuable to your audience. For example, it could be a large collection of things that your audience wants to download. It could be a collection of images, files, PDFs, audio clips, videos, etc.

This resource would occupy a prominent section on my site that would make my site a go-to resource within the industry. It would be linked from the sidebar and featured as a resource on my sidebar so every visitor would get a chance to access it. I would even have a navigational menu item in the header with an asterisk next to it in order to attract attention to it.

For example, a perfect example of a resource page, the cake section:

Example of a resource section - CakesbyMK

No email opt-ins, no barriers to entry... just a largely accessible resource that people bookmark and consume.

This, in turn, would likely increase the overall user metrics for my site, irrespective of the other content on my site.

Other Resource Examples:

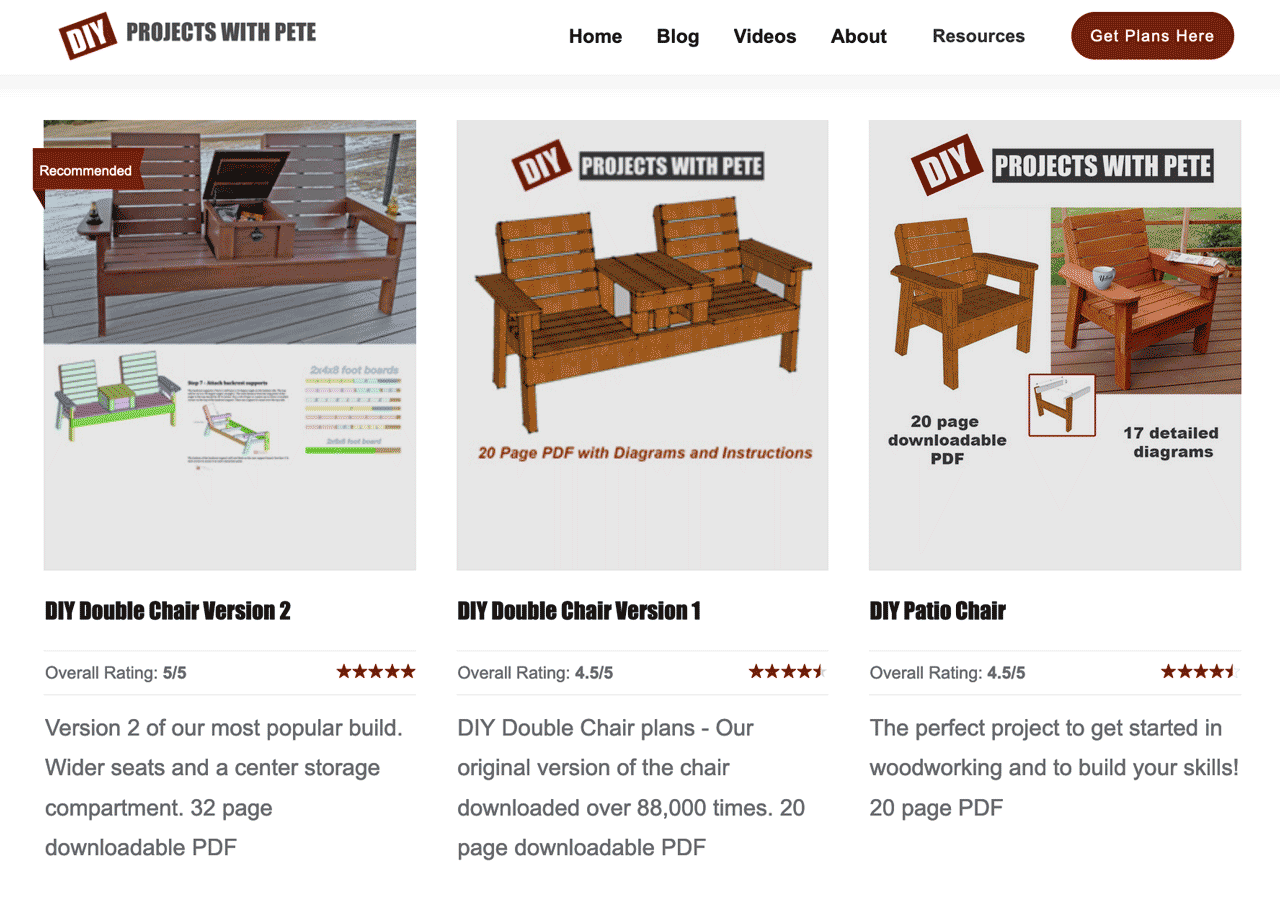

ie: A home renovation site features detailed blueprints / schematics in downloadable PDF format for 50 popular DIY projects. You access a giant tiled resource page where you can click on the DIY project of your choice and you download the PDF associated with it.

Here's a website doing just that...

Example of a resource section - Projects With Pete

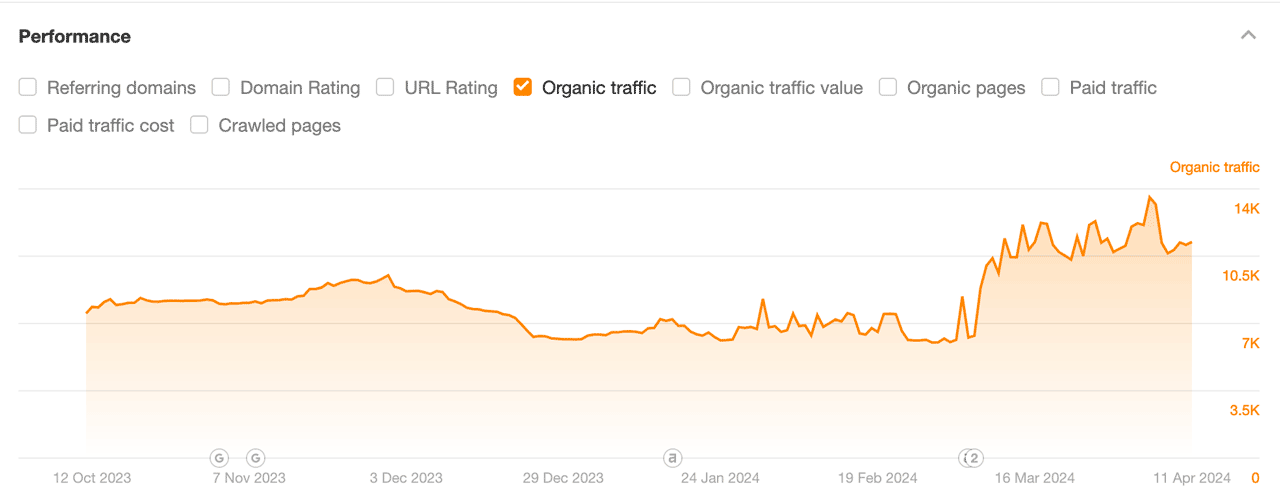

And they conveniently just doubled in traffic after the March Core Update.

Ahrefs Traffic Graph - Projects With Pete

Alternatively, a resource can ALSO be a calculator or a free tool that is valuable to the community. For instance, a free backlink checker within the SEO industry could be a valuable tool that encourages readers to return.

Within the casino industry, a free "odds calculator" or another tool that helps users perform at their best.

It can even be a single, extremely long and detailed report such as this one.

The type of resource doesn't really matter... as long as it's valuable and keeps visitors coming back for more. Best of all, if you can add it in the sidebar in order to 'hook' new visitors, it will be even better.

2. The next thing I would do is develop a new 'engaged traffic' source.

While Google traffic is known to convert into sales, it isn't known for it's long session times. When users come from Google, they usually want a quick answer and then leave as soon as possible.

In order to improve the overall site metrics, I would seek a secondary, more engaged, traffic source that would funnel users to my resource pages. This could be from Reddit, Facebook, Instagram, Pinterest, Youtube, TikTok, Twitter, LinkedIn and even streaming from Twitch. The source of traffic doesn't really matter as long as they remain engaged with my content.

Personally, I would opt for a Facebook group and I would consider running very low cost ads on Facebook & Instagram to drive a steady 'set and forget' flow of traffic to my resource pages. That way, you're virtually guaranteed to get visitors to the site.

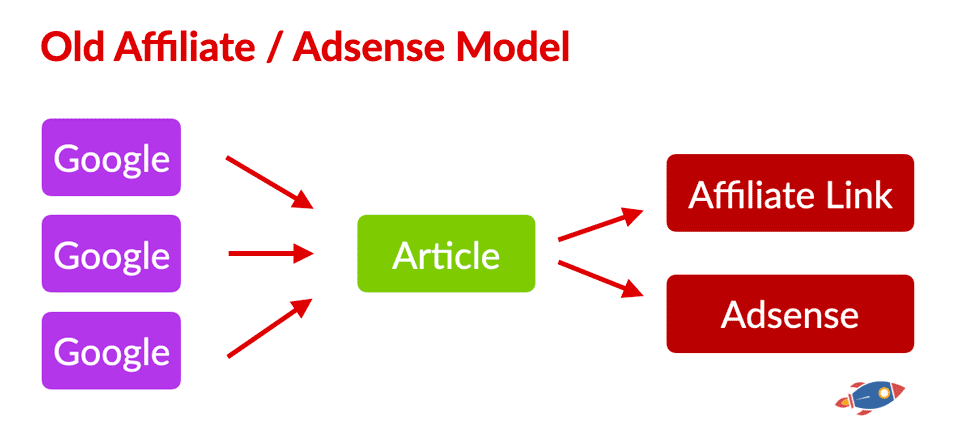

Next, I would move AWAY from this old model (down below):

In the old affiliate model, you drive traffic directly from Google to articles and then monetize them as quickly as possible.

This leads to poor site engagement even if the content is good.

Instead, here's the new affiliate model that I would adopt:

In this new model, I'm funneling visitors from social media to the new resource section of my site. This resource section is designed exclusively to 'trap my visitors into an endless loop of goodness' which will in turn, significantly increase the engagement of my site.

Once my site's engagement metric is high enough, all the articles on my site should rank. From there, I would optimize my content to have highly related entities (focusing on entity density) in order to rank for highly profitable keywords.

After implementing a new resource and funneling traffic, I would wait 1 month for Google to recalculate the site's new metric score. This might be all that needs to be done.

3. If the new resource funnel isn't enough on it's own to outweigh the poor metrics on the site, the next thing I would do is change my keyword targeting.

I'm functioning under the assumption that Google measures a metric score in a similar fashion to the Core Web Vitals, where it compares your results with sites competing for the same keywords and classifies it with a percentile.

With that in mind, if I change my keyword targeting to target entirely NEW keywords, then I have no competition to compete with... and therefore, I should be #1 by default.

(If no one else is competing for a keyword and I'm the only one writing about it... then I must be the best page on the topic!)

So instead of competing for 'short keywords' with Google traffic where I'm destined to have worse retention when compared to sites like Reddit, I would begin targeting brand new keywords that don't yet exist.

As Google consistently begins to discover that my site has the longest retention rates for the new keywords (because remember, no one else is competing and I have a great resource section so I'm always in the top 1% percentile), then in theory, the entire reputation of my site should change.

And yes, new keywords ARE more difficult to create and DO require some level of effort. You can't just put a seed keyword into a keyword tool and receive a list of new, never before covered, keywords tools, almost by definition, cover existing keywords.

My process for developing new keywords:

A) I start by looking at existing keywords / topics.

B) Then I research upcoming trends, developments and news within the industry. I dive deeper into a topic, covering things that others have not covered yet.

C) Finally, I forecast what readers will be searching for in the near future. (These are my new keywords)

The ultimate goal is to create content that still attracts readers while being completely unique. It's not just the content that's unique... it's the idea/topic/keyword that's unique!

And yes, I'm sure others will follow suit and copy my "new keywords", however by then, I'll have already established myself and my metric score will be higher.

Troubleshooting

#1 What if I already have a large quantities of generic, short content?

This is a tricky one... and ultimately I believe there are two options:

I could take the brute force approach, trying to create a resource that's so valuable that it outweighs the short, generic content... or I can address it.

One approach might be to repurpose content, transforming it into a new topic in order to eliminate (or reduce) any competition.

For example, if I have a pre-existing article on "how to bake a chocolate cake". It might be a great page... but there are approximately 2 million other pages on the same topic.

Instead, I could rework the page to change the targeting.

With some quick modifications, I could transform the targeting of the page from "How to bake a chocolate cake" to "Making my niece a chocolate mousse cake for her birthday"

In SEO terms, we've just changed it from a common keyword such as: "how to bake a chocolate cake" and created a brand new keyword: "chocolate mousse cake for niece birthday"

The article is almost the same, except for the title, H1 tag, the URL slug and perhaps a few words in the intro.

The targeting however... changes entirely.

We are no longer competing against millions of other pages and we stand alone as one of the only people to cover the topic of chocolate mousse cakes for nieces.

This greatly increases the chances of our pages being in the top percentile of most engaging pages. It might attract significantly less readers... however those visitors would likely be more engaged once they landed on my site, thus rewarding the entire site.

After all, if you've already lost 100% of your traffic due to poor site metrics, then this would be a step up.

Plus, I would personally take another drastic step after the March Core Update...

(Warning: this might not be for everyone)

I would remove / trim short content that has no chance of keeping people on a site until I recover.

When recovering, I would avoid creating content that has a very simple answer and actually remove content that might be dragging down my overall site metrics (even if it's a good answer).

For example, if I have a page that is dedicated to answering the question "how long should I cook a chicken", I would remove it because there are likely hundreds of other pages competing for the same term AND there is no chance that I'm going to have better metrics/engagement than those other pages.

Do I want to rank for terms that encourage users to quickly come and go from my site? Or, do I want to rank for "long keywords" that increase the likely hood of users staying on the site.

For example, instead of "how long should I cook a chicken" (short keyword).

Instead, I might target a keyword such as "Risks and side effects of consuming uncooked chicken". Now THAT'S a page that your reader is likely to spend time consuming, reading every bit of information. (Perhaps even follow it up with another article that says: "What to do if you suspect you've consumed uncooked chicken")

And you'll find yourself a highly motivated crowd that is very engaged with your content.

For comparison,

Ranking for "how long should I cook a chicken" might lead to 5-10 second site sessions...

While ranking for "Risks and side effects of consuming uncooked chicken" with a follow-up article "What to do if you suspect you've consumed uncooked chicken" might lead to a 3-5 minute session... even if the quality and effort to create the content is the same!

#2 Help! I'm worried I'm going to waste a bunch of time creating a resource and it won't work!

The beauty about the web is that you can test quickly and you get unlimited attempts. When I'm stuck trying to figure out 'what will work', I will put out a single high quality product, measure the results... and then either scale it or go in an entirely different direction.

While I understand creating a resource might seem daunting, I believe it's possible to test the FIRST piece before spending hundreds of hours scaling your resources.

For example, if I'm thinking of creating a collection of DIY blueprints for home projects, I can create ONE of them and share it on social media. If the reaction is positive, then I know I'm on to a winner. If no one cares and I barely get any engagement, then it's probably not a good resource.

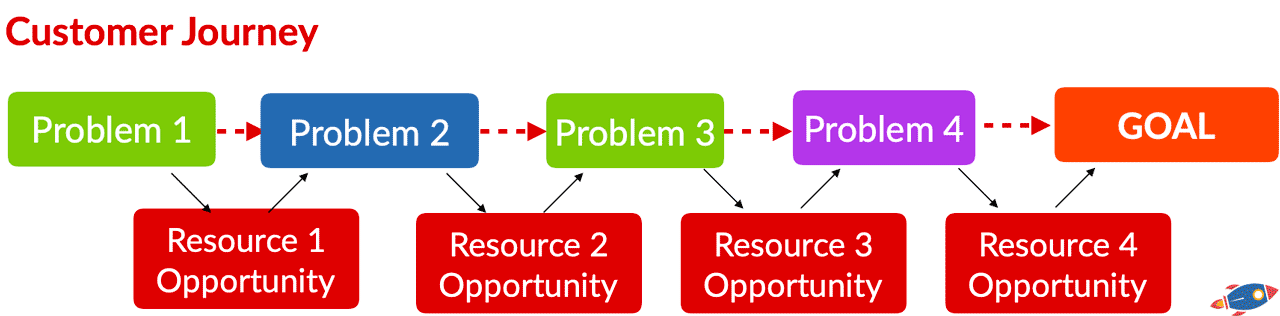

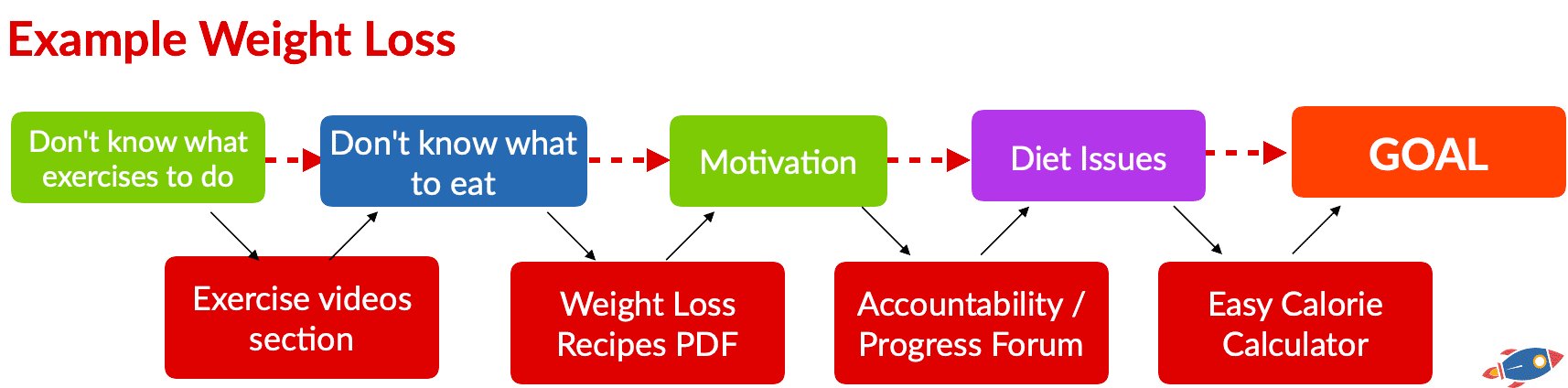

When brainstorming resources, I imagine my customer's journey and identify all the problems (pain points) that they must go through in order to accomplish their goal.

For each problem, there is likely a resource you can create that will help them get to the next step. One question I like to ask myself when measuring the value of a potential resource I'm thinking of creating is:

"Would someone be willing to pay for this?"

If I believe I could potentially sell the resource I'm creating for $5 to $20, then I feel confident that if I people will feel value when I give it out for free.

Here's a practical example:

For each step in my customer's journey, there is an opportunity to create a resource that can help them. In the example above, we have different TYPES of resources ranging from videos, PDFs, a forum to a calculator.

All of them can potentially work.

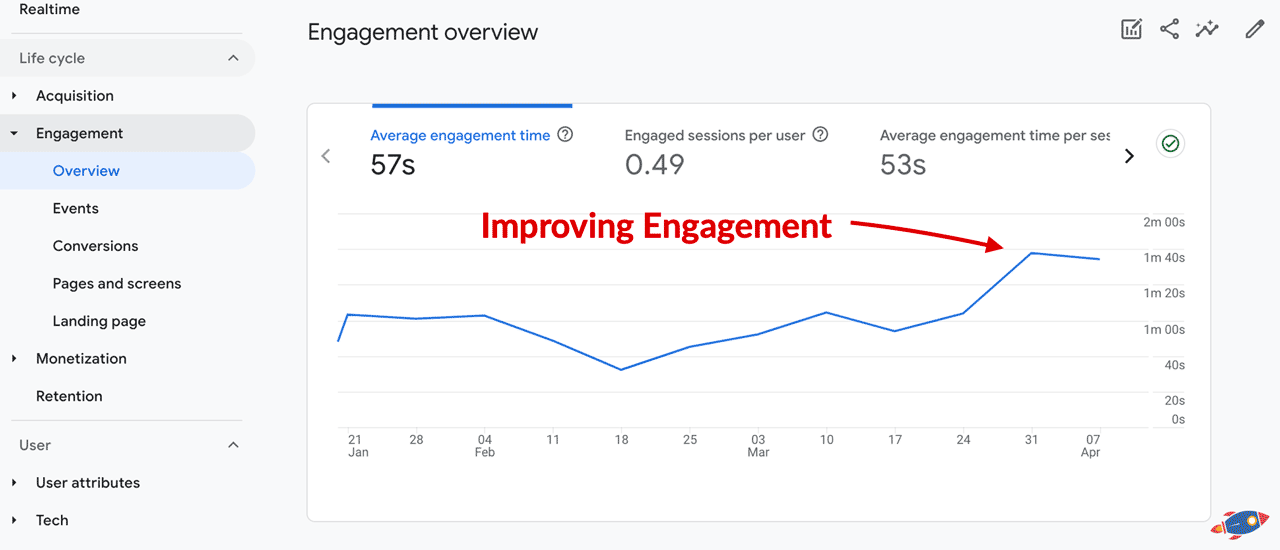

Tracking Progress

After creating a resource section, funneling traffic from social media to my resource page and dealing with content that might lead to short sessions, I would monitor the following metrics using an analytic tool such as a Google Analytics or Plausible

Specifically, I would monitor:

- Overall session time

- Page views per visitor

While bounce rate is interesting, as long as the overall session time is high and that my visitors are engaging with multiple aspects of my site, I don't care.

For those with multiple sub-domains, I would personally try to combine the data as I believe that Google measures a site-wide metric. If your www.site.com metrics are great then your forum.site.com is likely to perform well as well which is why many sites with active forums have seen stable traffic numbers in recent times.

For example, with Google analytics:

Measuring Engagement With Google Analytics

Using Google Analytics, we tracked the engagement improvement over time, nearly doubling in less than a few weeks.

13. On-Going March Core Tests & Experiments

(Validating Theories)

While theories are nice, real world testing is what will determine what really happened during the March Core update. In order to solidify our understanding of the March Core Update, we have set up multiple real-world SEO tests to see how Google reacts in certain situations.

We will be updating this page with the latest test results and encourage you to bookmark this page if you wish to see the updated test results in the future.

Historically, it has taken Google multiple weeks to months to react to changes so we'll have to see how quickly we achieve results.

Test #1 (On-Going)

- We are creating a unique resource section that will be featured on the site's sidebar and header. This resource will receive social media traffic in order to improve the overall site metrics. Ultimately, we want to get our users in an engagement loop as outlined in our model down below:

Test #2 (On-Going)

- We are changing the keyword targeting of pages so that they target unique, rarely seen keywords. The goal is to set us apart as and be the best source of information on the internet for those specific, unique keywords. In order to accomplish this, we are reworking titles, URL slugs, first paragraphs.

Test #3 (On-Going)

- We are creating a viral 'clickbait' style article that will be linked on the sidebar of the site. The absurdity of the image & title associated of the article will encourage users to click it. We plan on split testing titles & images to achieve the highest click through rate. The goal is to offer an alternative to the resource section as another place to click on the site.

10. Potential Loopholes

(Warning / Exploits )

The beauty of an ever-changing search landscape is that there are always new opportunities, new loopholes, and new avenues to be leveraged (or capitalized on). Of course, there are good opportunities (such as programmatic content) and then there are opportunities that bad actors will likely abuse.

I do not recommend or endorse anything in this list.

Here are some of these potential new opportunities that this change allows.

#1 Programmatic Content

With the advances in AI, creating fully automated sites with programmatic content has never been easier. The trick however, is to provide value (resources) while targeting keywords that no one else has targeted before.

Programmatic content usually combines different data sources to produce new content that is both useful and that has never been seen before.

For example, if you were to combine data from:

- Historical temperature records

- Current temperature records

- Umbrella sales

- Portable heaters sales

- Air conditioning units sales

You could create a programmatic website that publishes new keywords such as:

Weather-Driven Sales Trends [Date]

Weather-Driven Sales Trends April 2024

Weather-Driven Sales Trends May 2024

Weather-Driven Sales Trends June 2024

With new pages being published each month with pertinent data.

Weather Impact On Sales In [City / Region]

Weather Impact On Sales In New York

Weather Impact On Sales In Paris

Weather Impact On Sales In Montreal

This could be scaled by adding data for nearly every city / region that you have data for. You could easily create hundreds of thousands of pages with original, programmatic data that is useful to readers.

By creating new keywords at scale, your programmatic website could stand alone, capturing traffic from industry experts looking for unique combinations of data.

#2 Resource Scraping & Spinning

I am NOT suggesting anyone do this however I know it's going to happen so I thought I should warn people about this in advance.

With the advances in AI, it is exceedingly easy to "spin / re-imagine " resources that have already been created by other content creators.

1. Did someone else create a PDF?

Someone can spin, rewriting it with AI in a matter of minutes. Do this 20 times and you now have a collection of 20 "original" PDFs you can offer for download on a website.

This is even EASIER when working in a language other than English. Imagine taking a collection of PDF documents, translating them from English to French... and then calling it a day.

Please note that I do NOT recommend claiming other people's content as your own. If you were to translate things, I would get the author's permission first and provide credit to the author.

2. Did someone else create an image?

People are likely to 're-imagine' images with tools such as Midjourney, taking images from other content creators to transform them into your own.

I'm not entirely sure how you can protect yourself from this happening... however I did want to warn that if resource sections become the next 'hot thing', this is almost inevitable. Especially since AI manipulation might make DMCAs less effective because the content might be 'different enough' while still being heavily inspired.

It's a murky situation.

Please note that I do NOT recommend creating spinning images. With AI, it's easy to create new ones from scratch.

#3 Fake Stories / Deep Fakes

We all know that negative and outlandish news sells. In an effort to capture attention, I foresee that we'll likely see an increase in fake outlandish content being created for the sole purpose of getting people clicking.

Imagine being on a F1 racing website and seeing a deepfake of Lewis Hamilton in the sidebar with a sub-title:

"F1 driver's horrendous crash"

I'm sure your imagination can run wild with the pure ridiculousness that can come up in desperation to keep people on a site. This is likely to increase.

Please note that I do NOT recommend creating outlandish/fake stories. This will destroy your reputation.

#3 Social "Take Over" / Parasite SEO

This has already started happening as malicious actors have taking over high ranking websites to host their own content.

While you could start your own Reddit sub-reddit and start posting content there... it's ALSO possible to sometimes take over an entire existing sub-reddit.

For example, in the past year, the million subscribe r/SEO sub-reddit was taken over by a group when they discovered the moderators were inactive on the sub-reddit.

There are likely thousands of pre-existing sub-reddits on Reddit with good authority. Hijack a sub-reddit, start posting all your content... and profit?

Similarly, places like LinkedIn.com still allows posting stories that rank.