How To Achieve Top Google Rankings With Entities

While Avoiding Over-Optimization (Full Case Study)

By Eric Lancheres, SEO Researcher and Founder of On-Page.ai

Web Version

Watch the read the web version or download the PDF.

Last updated Nov 16th 2023

1. The Key Google Rankings

Google's Secret Algorithm Revealed

During the recent U.S. and Plaintiff States v. Google LLC trial, multiple eye opening documents were presented by active and former Google employees.

One statement in particular, was interesting:

Slide from Google's "Search all hands" presentation from the trial exhibits

"We do not understand documents, we fake it" (Source)

This revelation, long suspected by those in the SEO world, was finally acknowledged by Google, providing a moment of validation for many.

(It's important to understand that this statement specifically referred to the state of Google's search engine from 2017 to 2019. This is before Google implemented advanced AI algorithms like the Helpful Content Update classifier. The engineer who made this remark also clarified that while this was the case at that time, they expected significant advancements and changes in how Google understands and processes content in the future.)

As it stands, I believe we are in an era where this is partially true and I will provide a full explanation of how I believe Google uses named entity recognition (entities), a user feedback loop and AI to rank documents.

I aim to explain what Google means when they say: "Avoid search engine-first content" (Source) and how optimizing too much without any regards for your visitors can actually hurt your entire website. To back up my claims, I will reveal the results of a secretive ranking experiment.

Plus, I'll let you in on my latest step-by-step strategy for ranking content. It's been working really well for me.

2. How Google Initially Ranks Webpages

(Multi-Step Process)

One of the main advantages that Google has over the competition is it's incredible speed.

While many SEOs will lament over the time it may take to index certain documents, in general, Google is incredibly fast. This speed is especially evident when you see new pages getting indexed and ranking just hours after they're published, a feat that’s really impressive given the sheer size of the internet.

For example, within hours of a death of a celebrity or a natural disaster, Google will have indexed pages discussing the breaking news.

In order to accomplish such a feat, Google has to "fake it", skipping the advanced analysis of pages and instead, use more primitive, brute force approaches to ranking documents.

This is done through an initial index which is designed with speed in mind. (By the way, I think this would be a great time to explain that Google has at least 2 indexes... one fast and one slow one. Here's a Google patent about it.)

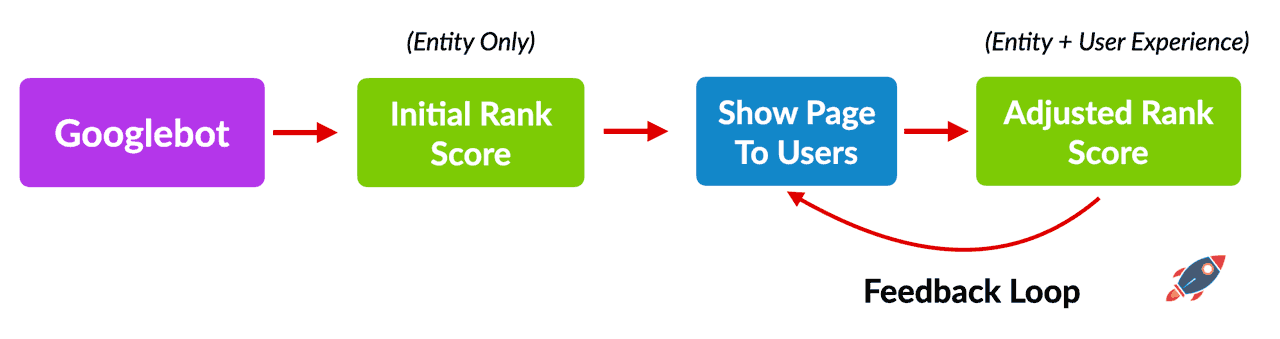

Once a webpage enters the initial index, Google then queues it up for further, in-depth analysis. This secondary analysis is considerably slower and uses more resources (such as AI) to process. At this point, Google will re-adjust the document score (and therefore, the ranking).

Initial Ranking: Entity Based Rankings

According to my tests (more on this later on), the initial rankings are based entirely on differently weighted rankings factors that Google can quickly calculate.

The main ranking factor, according to my recent analysis post-Helpful Content Update, is the density of related entities within the text.

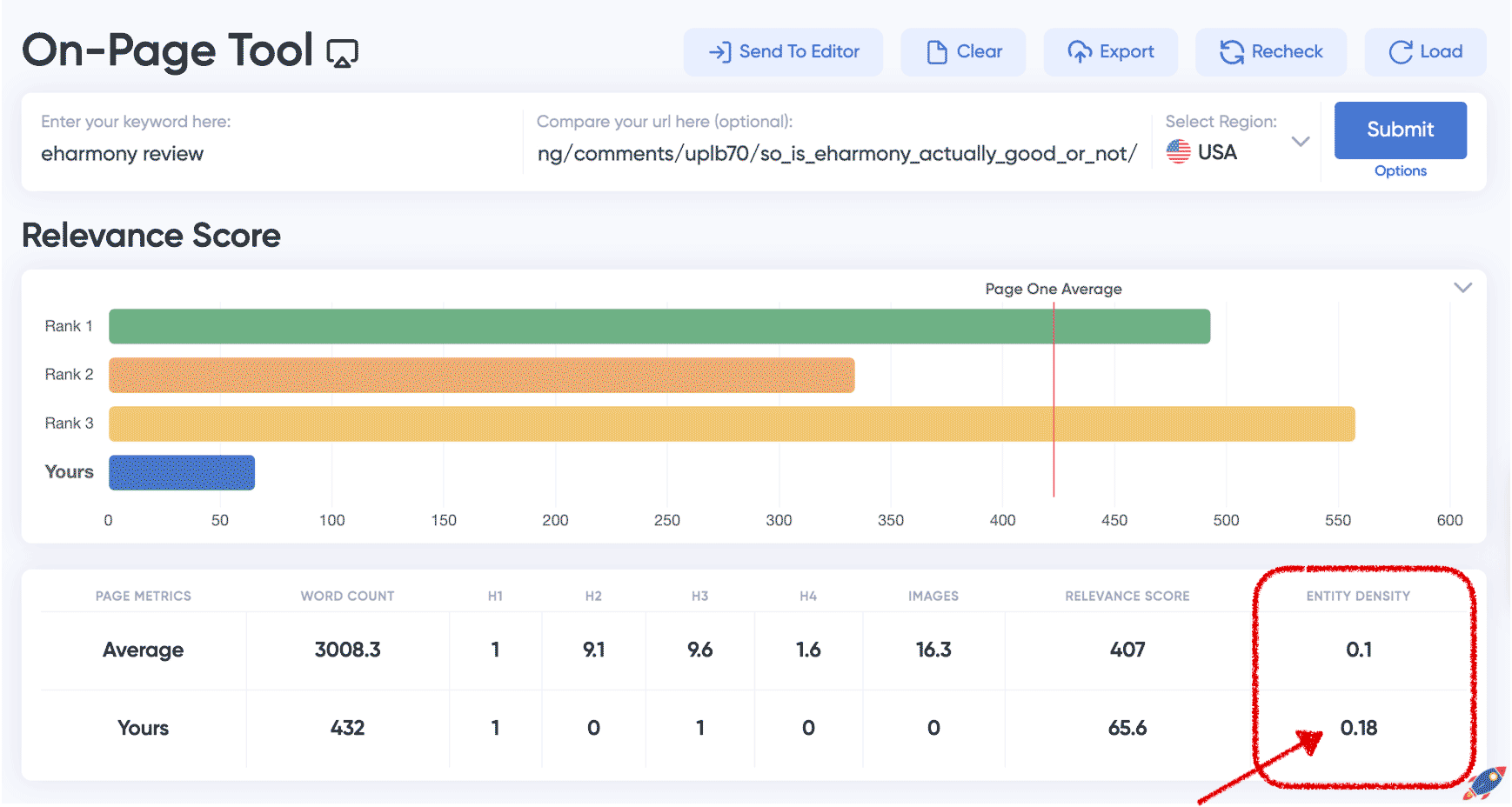

With nearly double the related entity density of the average, this page ranks with a mere 432 words.

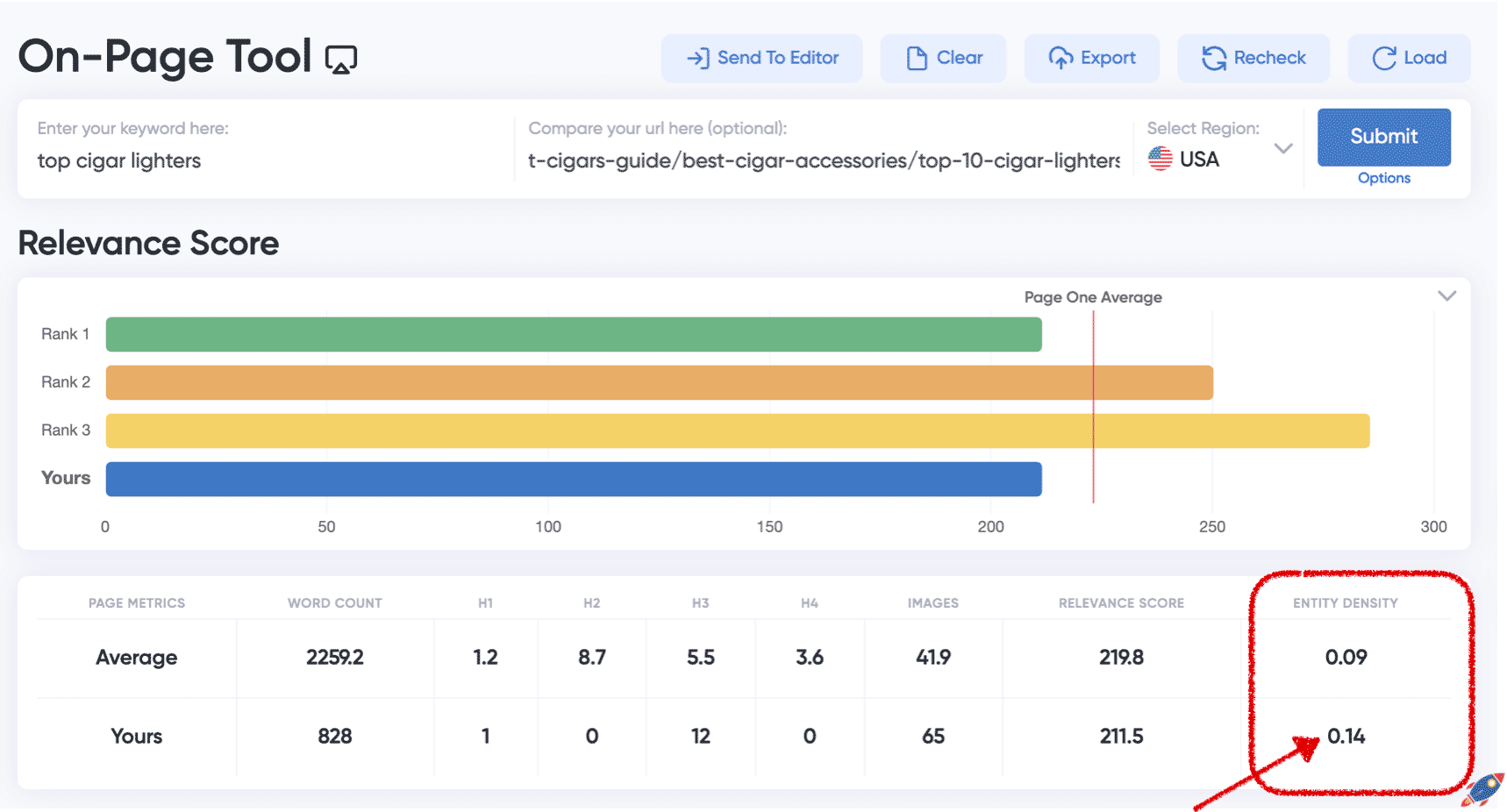

This subjectively low-quality 828 word outranks higher quality pages, in large part due to a significantly higher related entity density.

In simpler terms, it's about how often important, topic-related keywords (entities) show up on a webpage.

Generally, the more relevant entities a document contains, the more it's seen as related to the search term, and the higher it might rank.

(But remember, this is a bit of an oversimplification. Other factors, like where these entities are on the page, matter too. For instance, having them in your title tag and subheadings counts for more. And of course, there are many other ranking factors we're not getting into here.)

Ultimately, Google ranks documents by processing these highly relevant entities, a key factor in this process. This active approach is the primary drivers of the initial index.

Example

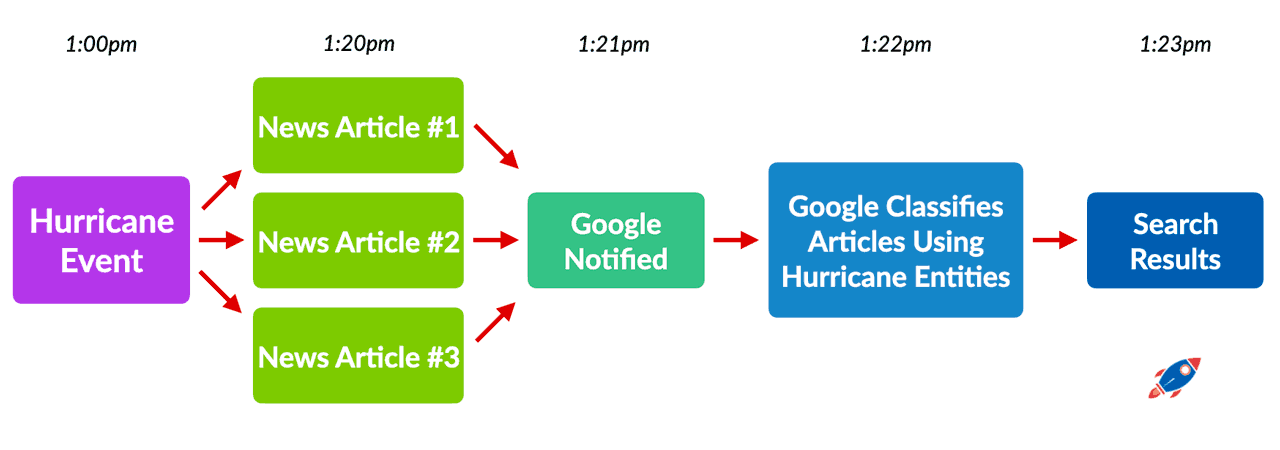

For example, if a hurricane hits Hawaii and users want immediate information on the recent disaster, Google doesn't have time to process all the new stories through multiple layers of analysis.

It needs to show relevant result as fast humanly as possible.

This is what likely happens in a matter of minutes:

1. Event occurs

2. Journalists immediately start writing articles about the event.

3. When a news story is published on a Google approved news website, the Googlebot quickly crawls the webpage. (News websites can submit a news sitemap that get crawled faster by Google.)

4. Google scans the document for all the recognized entities, calculates the density for each query and ranks the document within minutes.

This is how we can achieve near-instant rankings on Google using the fast index.

Secondary Index

Once Google has an initial ranking for a document, it can start showing it to users to see their reaction. This allows Google to gather additional user-based data on the webpage such as click-through-rate, engagement, bounce-back-to Google and so forth. Google will play around with your title tag, different meta descriptions, and contrast these listings with others in Google search.

If you've ever heard of the "Google dance" where results fluctuate from one day to the next as Google tests user interactions, this is it!

User interactions will offer them valuable feedback, enabling Google to determine whether to boost or lower the page's rankings.

In addition, they have time to run the page through a more thorough processing stage. This stage involves employing AI, sophisticated layout algorithms, and a deeper analysis of the page.

This process, combined with the user data gathered during this period, allows Google to reassess and refine the page’s score, factoring in all relevant metrics.

On-Going Score Update

Googlebot will periodically revisit your page to check to see if there have been significant changes that warrant a complete recalculation of its score.

Minor edits like altering a single word won't trigger this, but adding several paragraphs (or rewriting the entire page) will initiate a full reassessment of your page's score.

With regards to user metrics, these are constantly being reassessed as engagement rates continually fluctuate. As your content attracts more clicks relative to competitors, Google adjusts its ranking accordingly.

3. Testing The Power Of Entities

Case Study

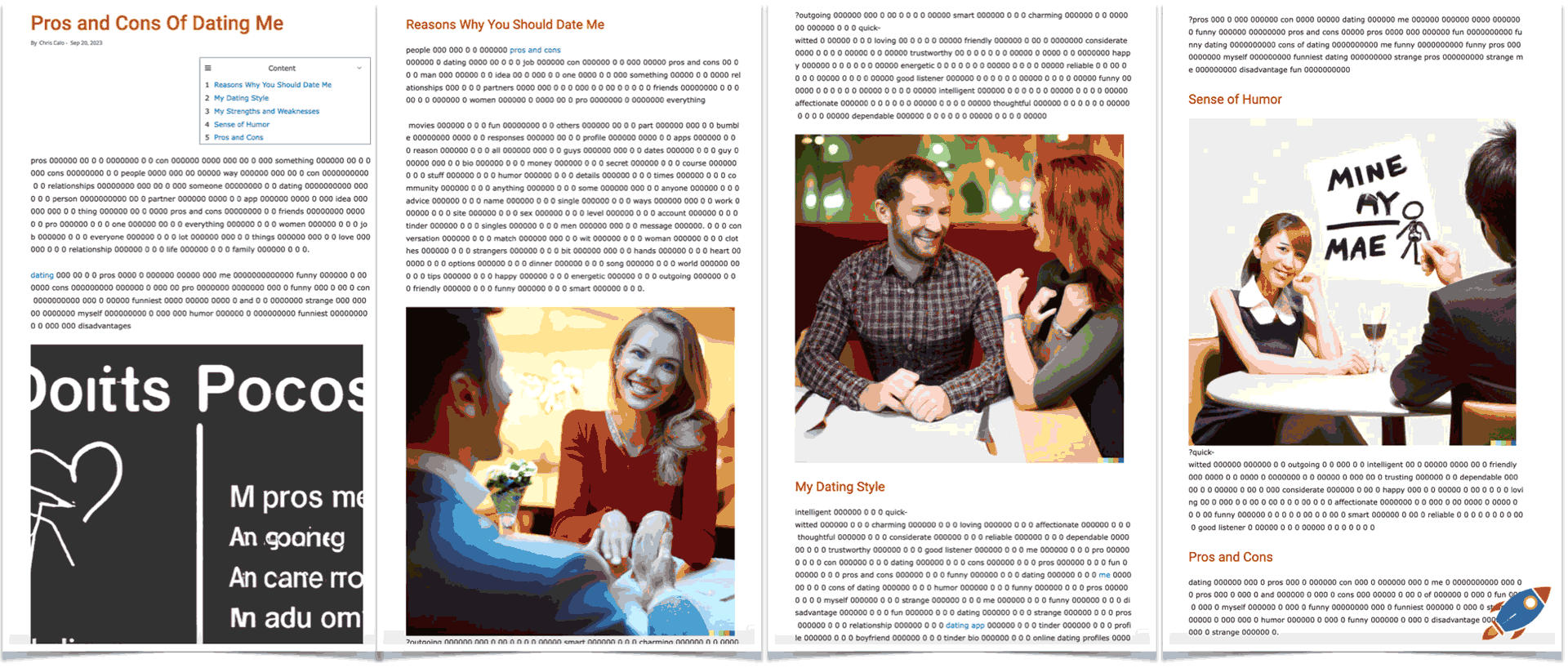

In order to validate some of the theories put forward, I conducted an interesting experiment which entailed ranking a document crafted exclusively with entities and gibberish. The surprising results helped to understand how Google truly functions.

Test Setup

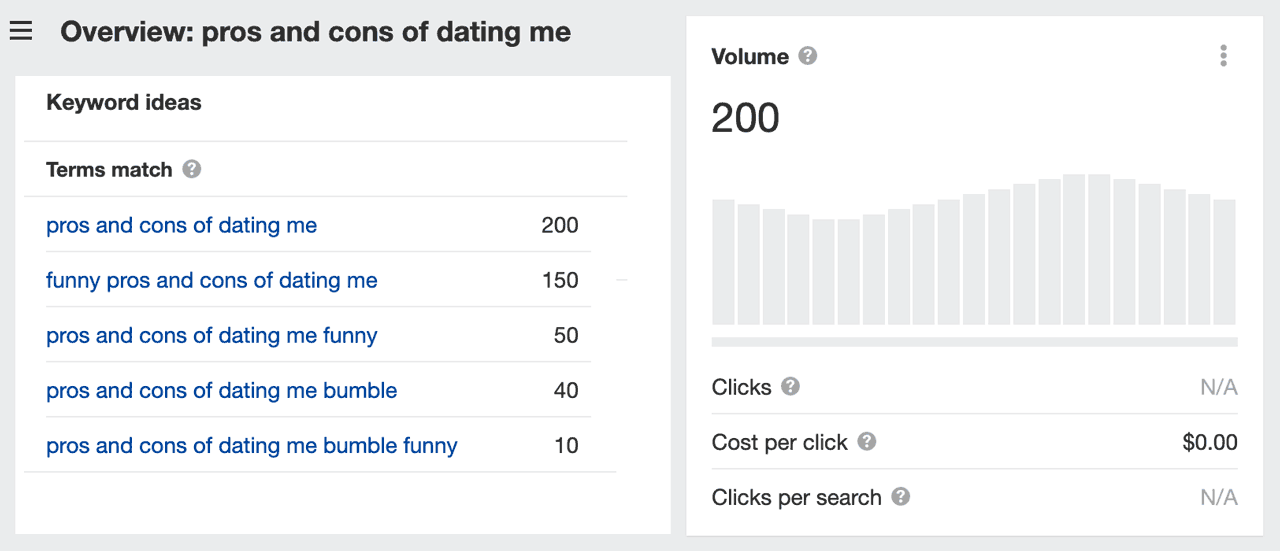

I selected a real, albeit low competition, keyword that has an associated search volume with a search volume estimated between 200 per month according to Ahrefs:

"Pros and cons of dating me"

This meant the page would receive real traffic which could provide additional insights into how Google functions.

Note: As much as I believe there is value in testing abstract keywords with zero search volume, testing with real recognized keywords provides an additional layer of complexity that allows the full Google algorithm to exert it's muscles.

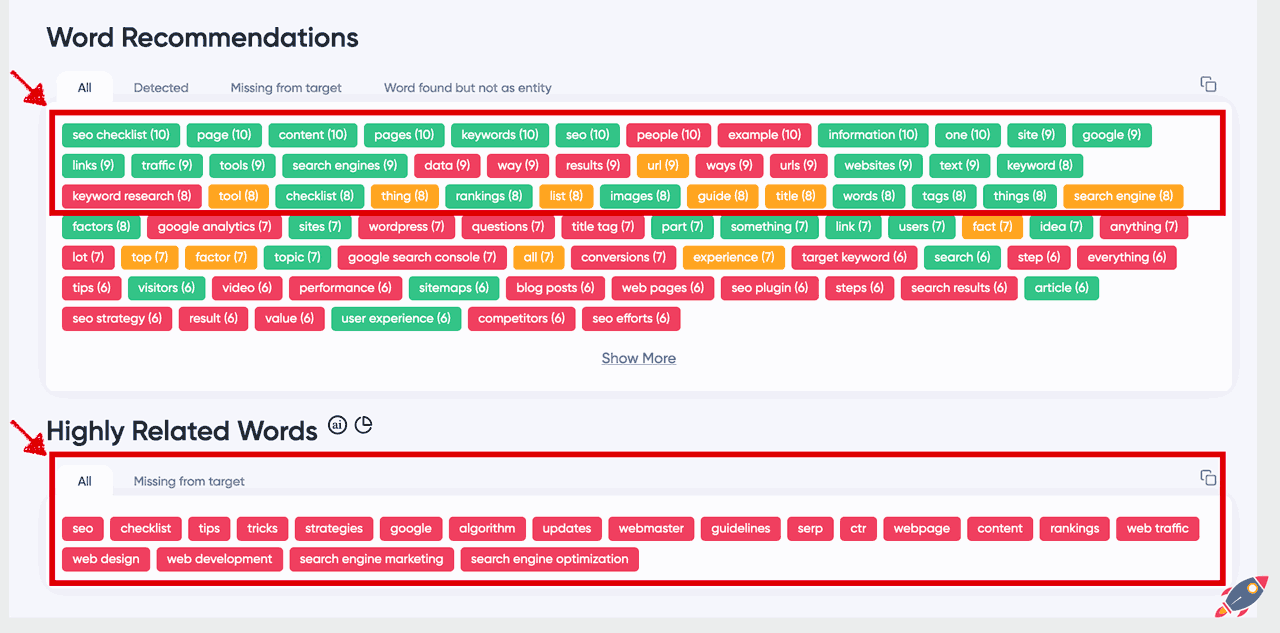

1. Locating Relevant Entities

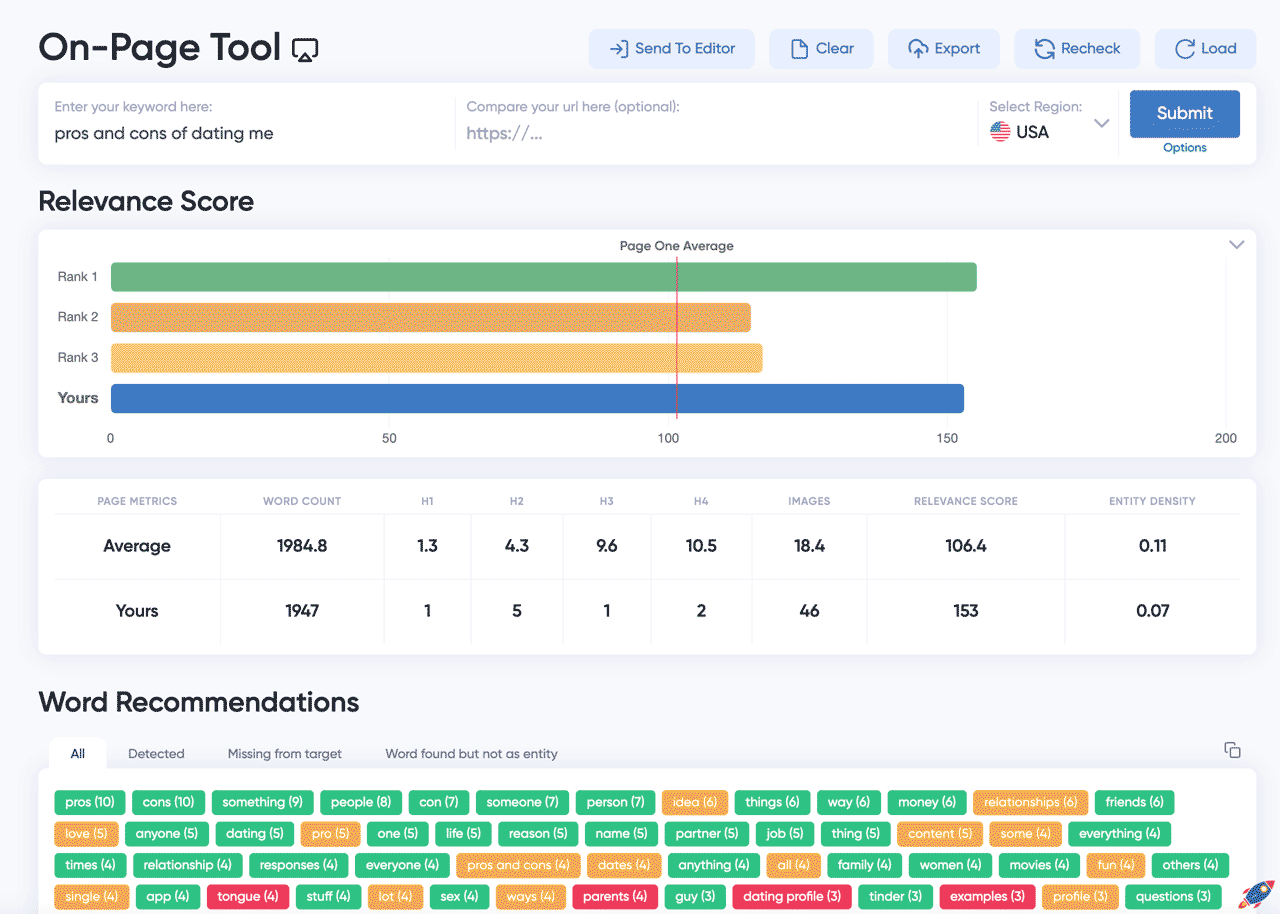

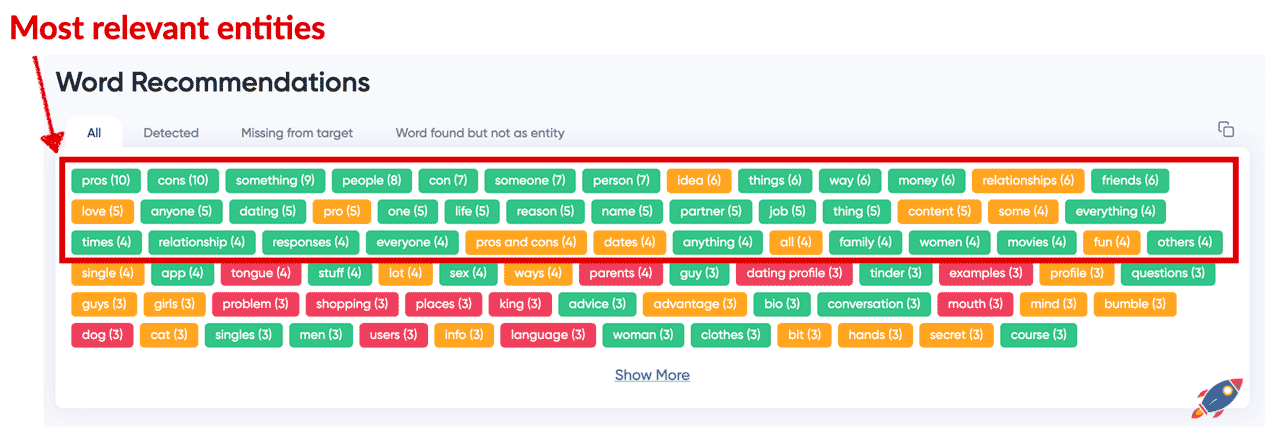

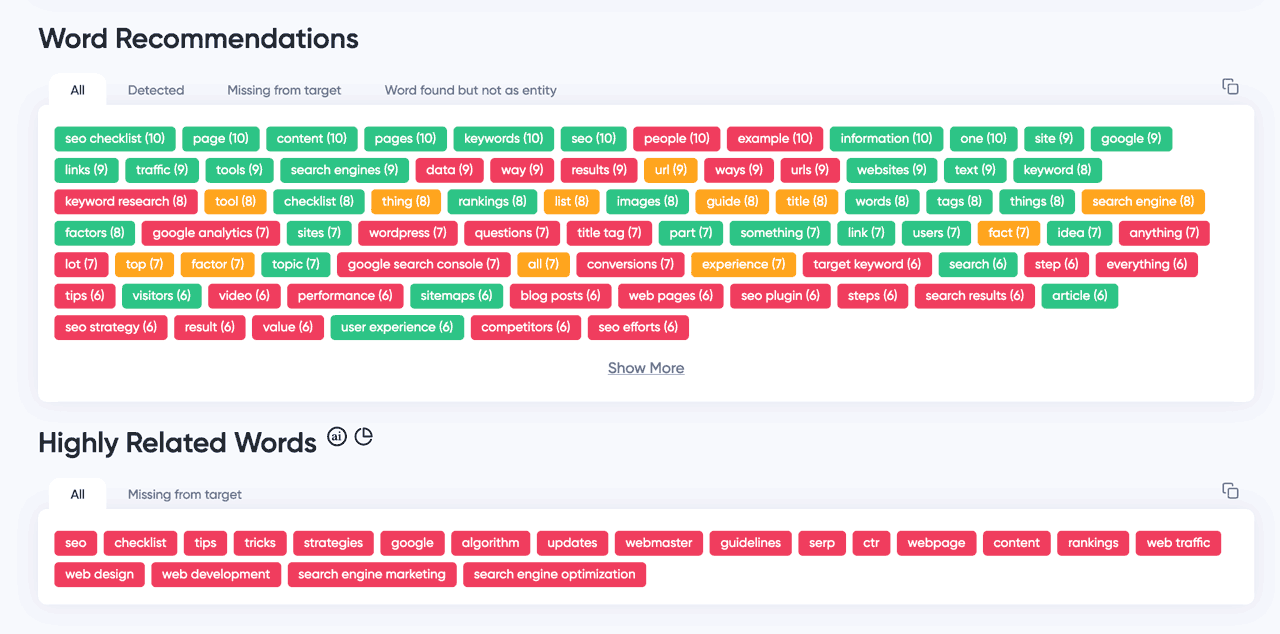

Using the On-Page.ai scan tool, I performed an audit of the target keyword to uncover all the most relevant entities associated with the term.

From within the "Word Recommendations" section, I copied the first 3 rows of the most relevant entities.

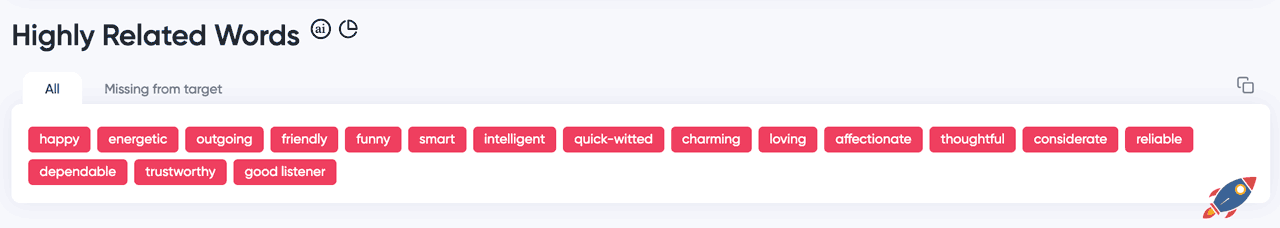

I also took all the suggestions from the "Highly Related Words" section. (The Highly Related Words is an On-Page.ai exclusive that provides you with highly relevant words that your competitors may not be using. They can help you gain an advantage over them.)

2. Page Creation

Then I created a webpage which mixes in random strings of the number 0, for example, 000 00 00 00 and inserted the entities throughout the document. I tried to keep a reasonable, natural space between the entities and random numbers throughout the document (see below).

And I continued this process, creating an entire page from the recommended entities list and random 000 000 00 patterns...

I included 4 generated images, 4 sub-headlines and a table of content all following the same, computer first, approach. The end result is a page that no reasonable human would ever consume!

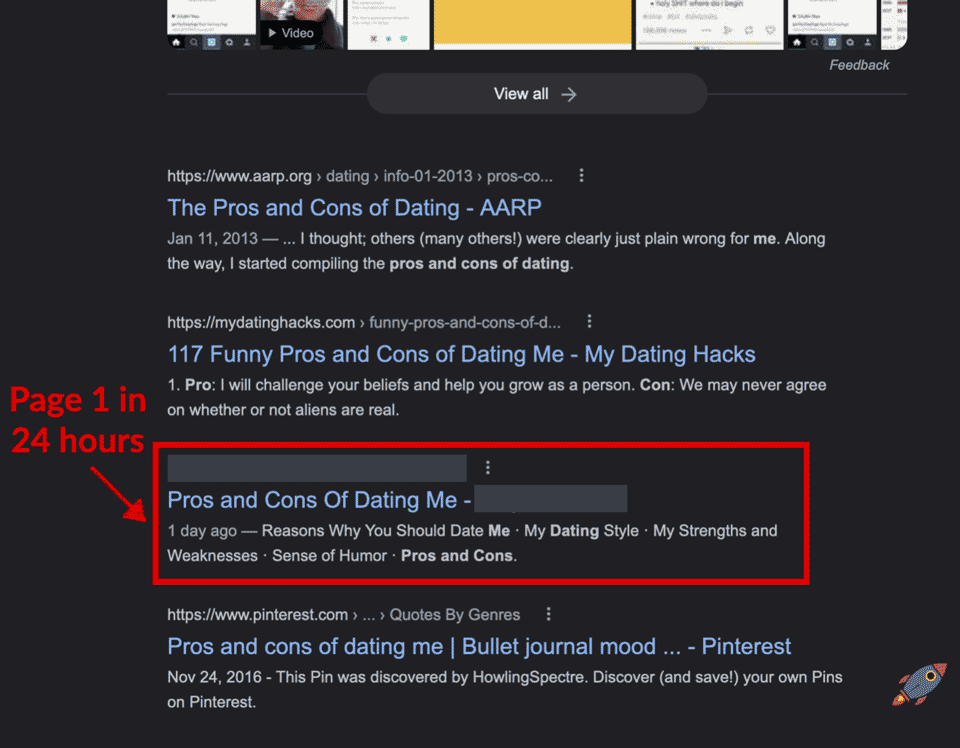

3. Ranking Results

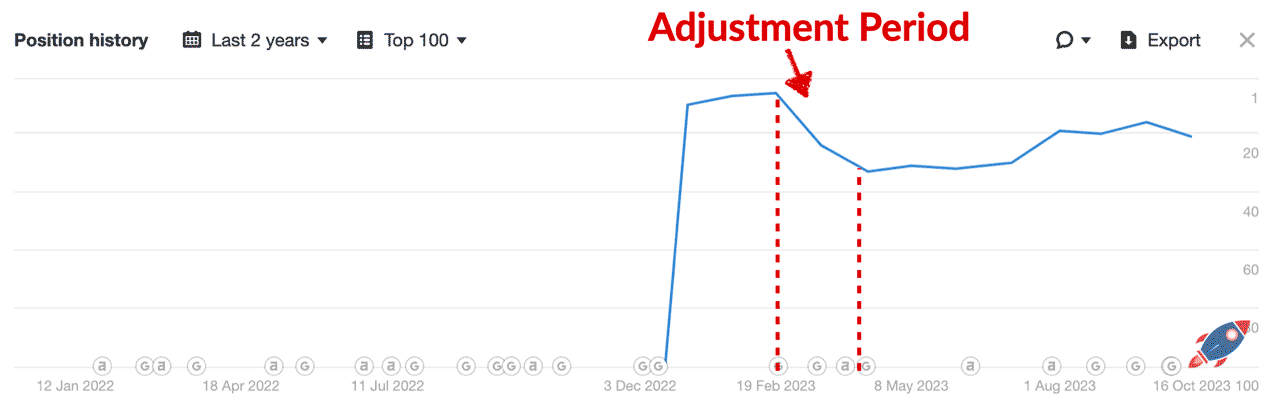

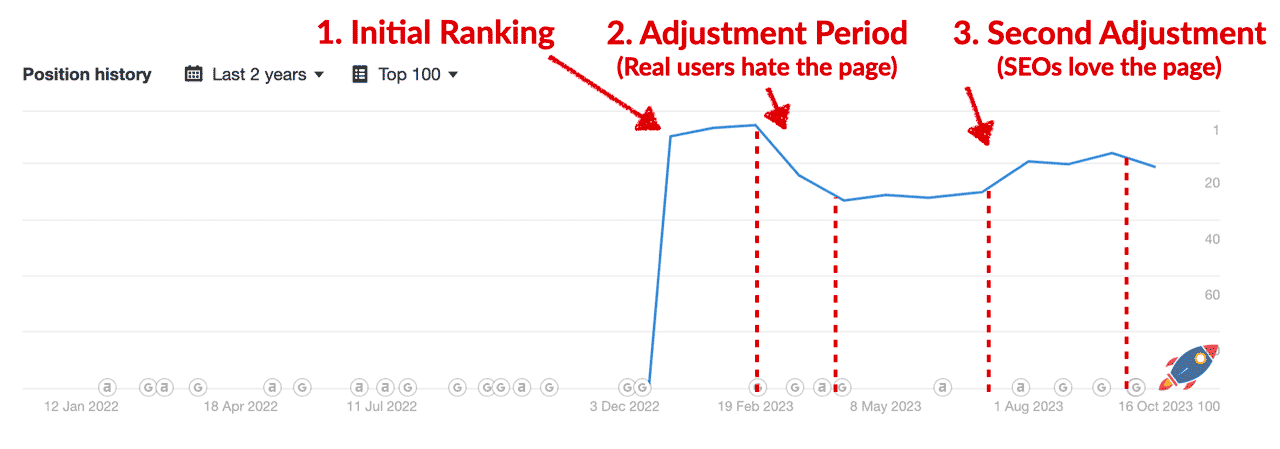

To my surprise, the webpage ranked on page 1 of Google...

WITHIN 24 HOURS

The page instantly shot up to page 1, even briefly making an appearance in position #1 of page 1. It's normal for new pages to bounce around when they are new and it didn't stay there for very long.

In fact, Ahrefs tracked in position #6. (See below)

To my astonishment, Google had just ranked an illegible page based purely on entities.

However, what happened next is equally interesting....

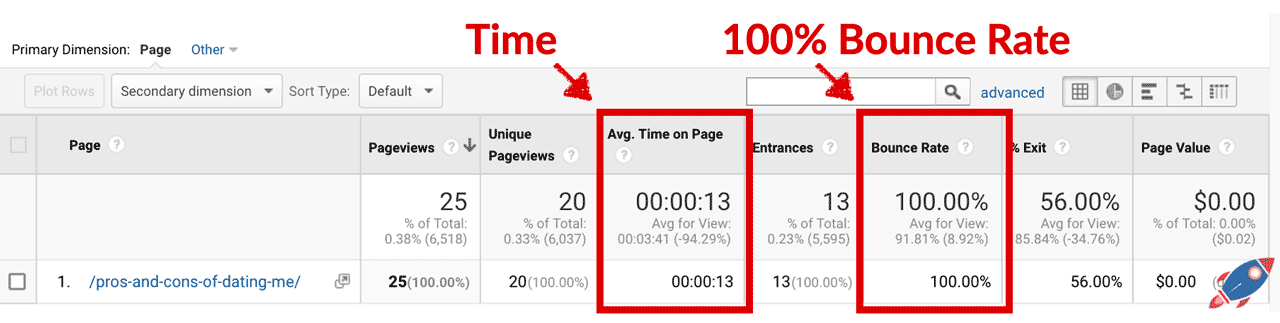

Shortly after it's initial rise, the page began to drop in rankings.

This is likely when the page entered the testing phase (phase 2 of rankings), accumulating data from the user interactions with the page.

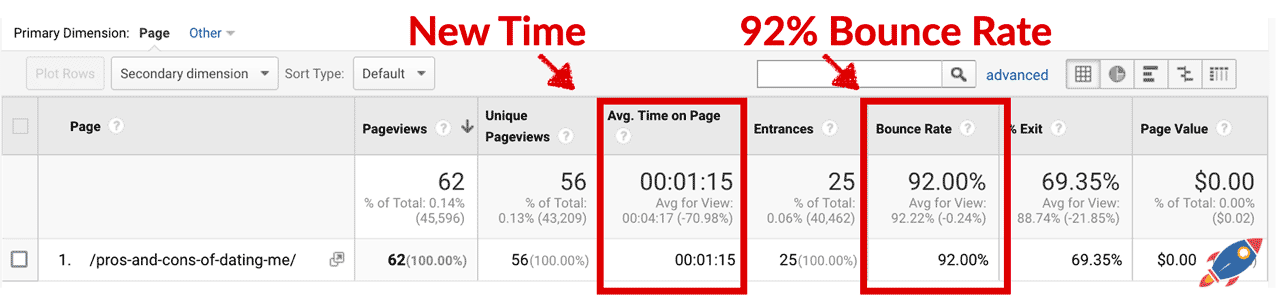

Due to the page being completely illegible, any visitor landing on the page quickly returned to Google to click on a different result. As confirmed by the Google Analytics graph, the bounce rate was 100% and the time on page was a measly 13 seconds (probably from the users being flabbergasted by the weird page).

As Google learned that the page did NOT satisfy the user intention, it re-adjusted the score of the page, lowering the ranking.

Due to negative user interactions, the page dropped by approximately 15 positions. This change occurred as Google took time to readjust the page's final score, after which it remained stable for many months.

That is until...

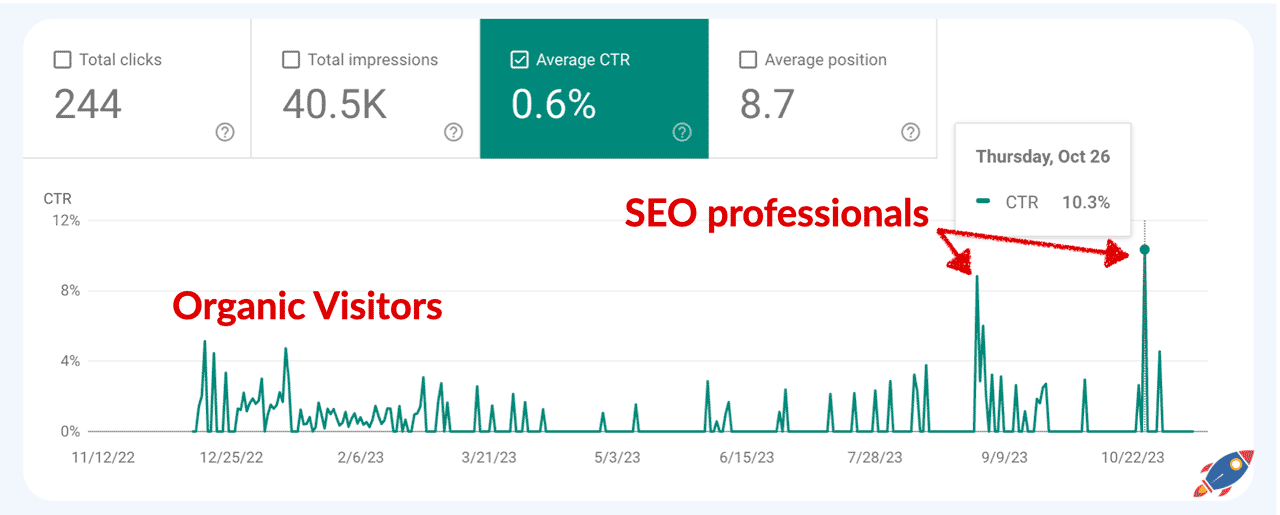

I finally told some SEO professionals from my inner circle about the experiment and out of curiosity, they Google'd the page, actively searching and clicking on it to see it for themselves.

The new metrics, this time exclusively from SEO professionals interested in the test, produced improved user metrics (almost 1 extra minute of user engagement) and Google subsequently increased the page rankings.

Hilariously, Google search console shows the exact moments in which SEO professionals actively searched for the experimental page and significantly increased it's click-through-rate / time on page metric. When Google noticed all the incoming searches (and importantly, no one was returning to Google to search again for the same term after viewing the page), the page increased in rankings.

Putting it all together, it becomes evident that the Google algorithm is influenced by a combination of entities and user feedback. Initially, rankings are determined primarily by related entities.

This is swiftly followed by a readjustment phase, where user feedback comes into play, potentially impacting the page's rankings either positively or negatively.

4. Calculating Rank Score

Within the recent Google vs US trial, we learned about Google's adjusted scoring mechanism. This is corroborated by the recent experiment and provides us with a better understand of how Google ranks webpages.

Here's how I believe Google ranks webpages:

The way you initially craft your page will play a big role in determining for which keywords (if any) Google decides to test your page.

I also believe it plays a crucial role in providing you with an initial score (which is VERY important). Within the Google trial, they shared an example of the feedback loop that Google uses to adjust web rankings after they have an initial score.

If we take an overly simplified model of the Google algorithm,

(The Google ranking algorithm is comprised of hundreds of ranking factors that are carefully weighed against each other to dictate the search engine results. While we describe ranking factors with common representative names such as links, domain authority, content relevance, speed, user experience, Google uses drastically different terminology within their algorithm. For the sake of understanding entity based ranking, I'm omitting all the other factors from the simplified model.)

Grouping all the traditional ranking factors inside together, we can imagine:

EXAMPLE:

Website #1: [Initial Google Ranking Score] = 94

Website #2: [Initial Google Ranking Score] = 87

Website #3: [Initial Google Ranking Score] = 67

Website #4: [Initial Google Ranking Score] = 59

Website #5: [Initial Google Ranking Score] = 58

At a basic level, the higher your ranking score, the higher you rank in the search results.

If we assume that Google then proceeds to test pages in order to modify the score based on user experience, it might look like this:

[Initial Google Ranking Score] +/- [User Experience Modifier] = Adjusted Ranking Score

Which means that the final adjusted score is always influenced by both the content on the page (entity density) and the user experience. Even if a page initially has the highest score due to excellent SEO optimization, the user experience might not support it.

For example, if website #1 had a poor user experience in comparison to the other websites.

(And Google decided to associate a -10 deduction to the score due to poor user experience)

Website #2: [Initial Google Ranking Score] = 87 + 12 = 99

Website #3: [Initial Google Ranking Score] = 67 + 20 = 87

Website #1: [Initial Google Ranking Score] = 94 - 10 = 84

Website #4: [Initial Google Ranking Score] = 59 + 5 = 64

Website #5: [Initial Google Ranking Score] = 58 - 3 = 55

We could potentially see website #1 dropping from position #1 to position #3.

The secondary modifier (user experience) is constantly updated based on the user interactions from within the search results.

In a practical sense, this means that in order to improve rankings (achieve a better a rank score) we could:

A) Increase entity density (relevance)

B) Improve user experience

It's important to note that even if you enhance the entity density, it might not yield significant benefits unless it is also supported by human interaction

If Google determines that users hate your webpage, throwing more entities might initially help but it won't solve the long term problem as Google is likely to use the modifier to lower it back down by applying an EVEN LARGER negative modifier.

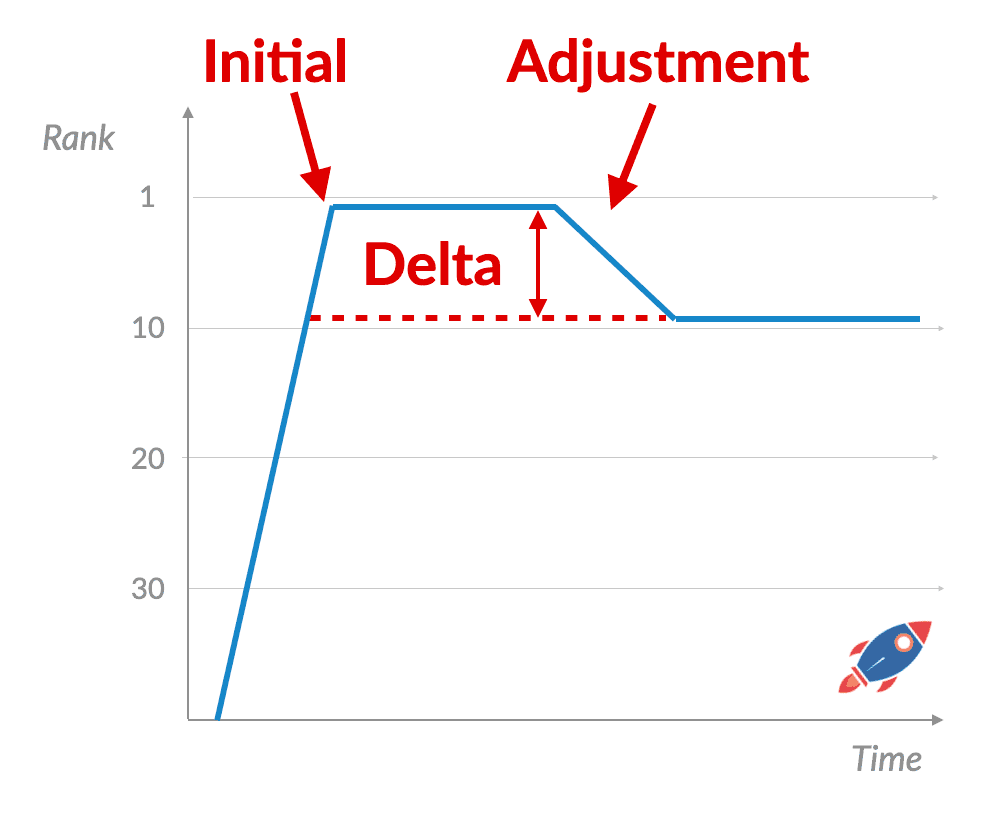

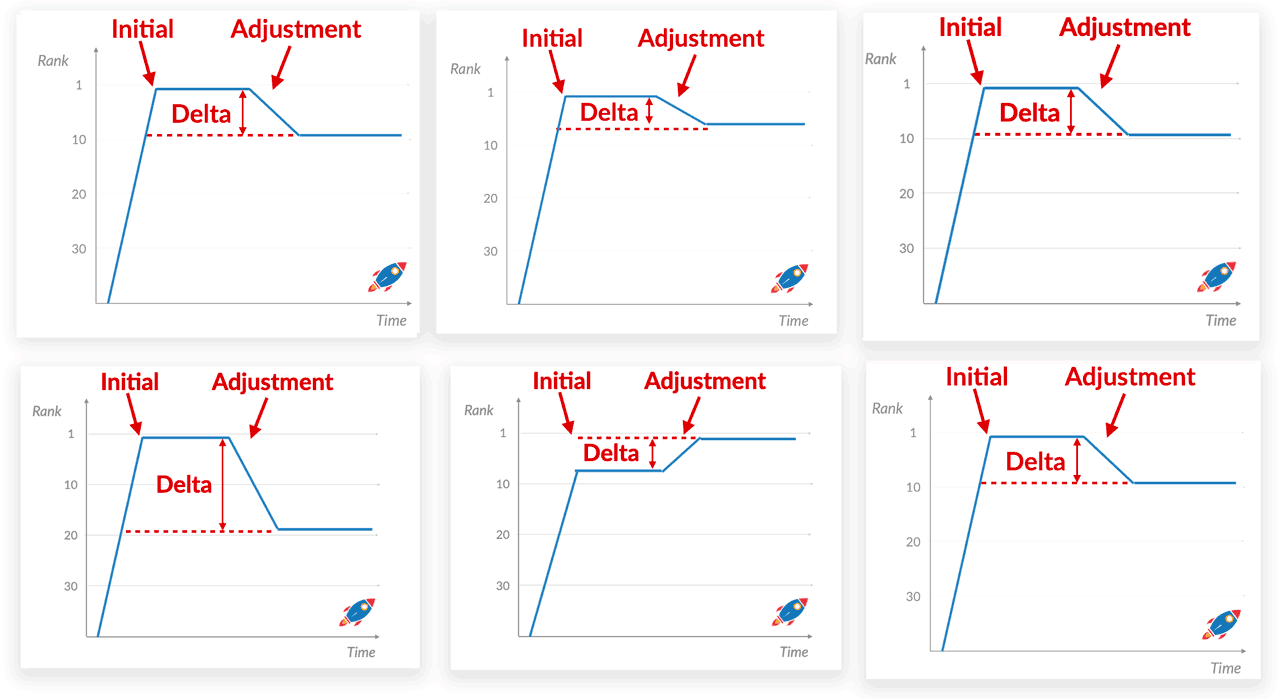

3. Initial Score VS Final Score

Where it gets really interesting is measuring the delta between the initial rankings (based purely on entities) and the adjusted rankings, after accounting for user interactions.

For example, if your initial rank score results in a ranking of #8 due to a high related entity density but then due to user interactions, Google reduces your rankings to #18, then we can imagine we have a -10 point gap.

While I'm sure Google uses a sophisticated scoring system, it is entirely possible that they measure the discrepancy between the initial entity rank and then the adjusted, user backed, rankings.

If this happens once, then maybe it's just one 'bad' page that was well optimized for that term due to entities... but then failed to meet the expectation level of humans.

Where it gets really interesting is that Google can do this across ALL your pages.

In the example above, Google could measure how often a page increases in rankings after the user experience adjustment period... how often it decreases... and by how much!

(And guess what, if Google notices that your pages ALWAYS decrease after the user experience adjustment period... then it might decide "Why wait to decrease it?")

I'm almost certain that Google is measuring this metric and this is exactly what they mean when they say:

"Avoid search engine-first content" (Source)

My interpretation is that if you plan on achieving an initially high score by using a high density of entities, then you better make sure the users like the page.

I also suspect that this may be a component of that is tied to the Helpful Content update.

If, hypothetically, they notice that on average, after measuring human interaction on your pages, your pages usually drop by 10 points, Google might decide to apply a direct -10 penalty to all pages on the domain...

That way, they won't need any further adjustment!

4. Not All Entities Are Equal

(Watch Out For Fake Entities)

Now for the burning question... if a high related entity density can help rank, which entities do I add?

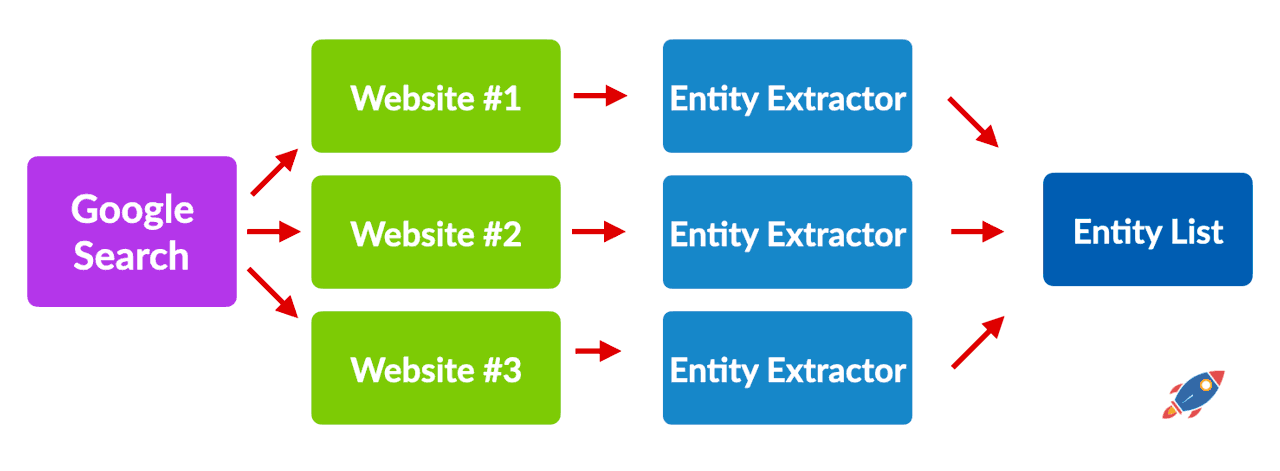

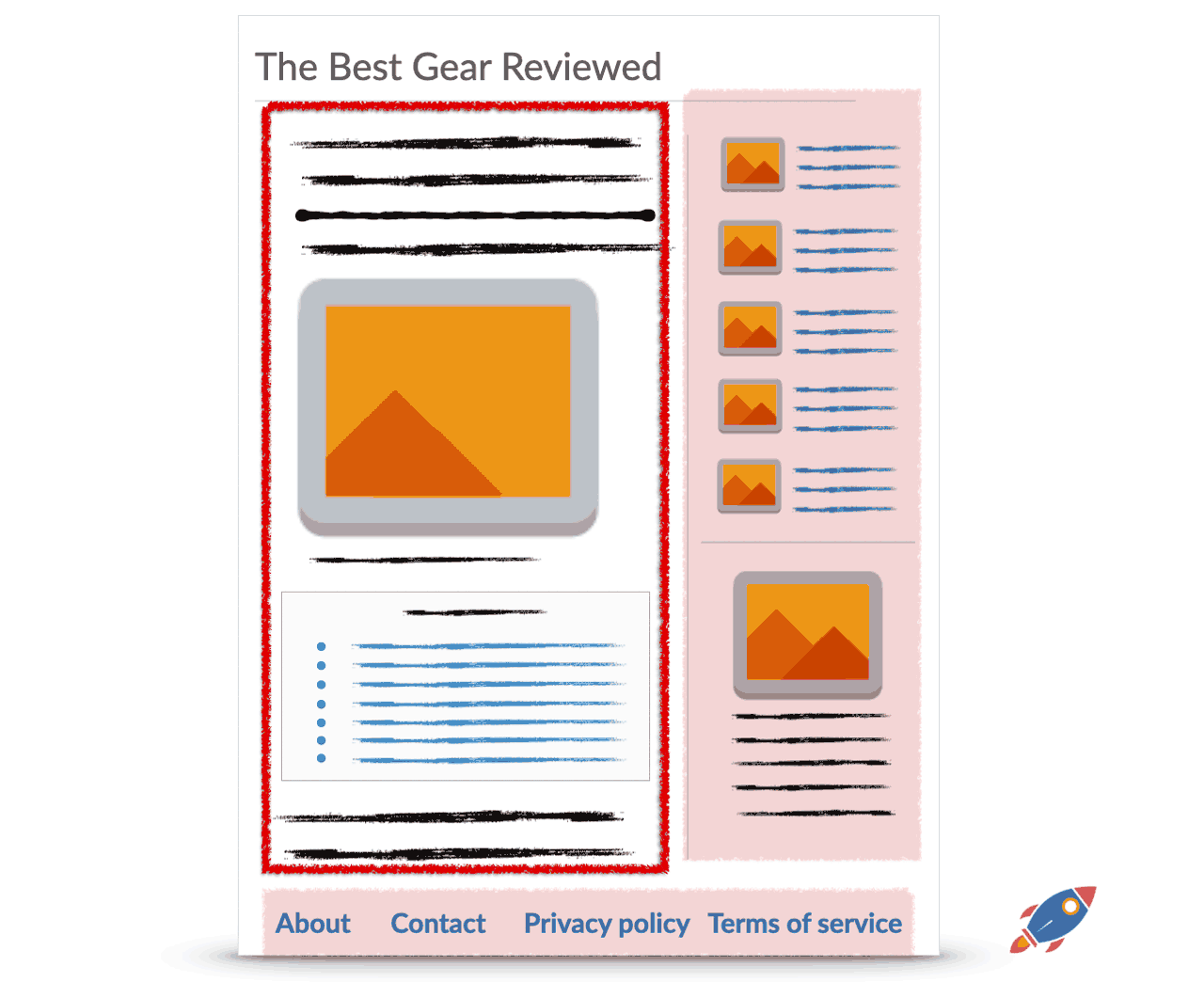

While we'll never know exactly which entities the Google algorithm seeks out for a certain query, we can get fairly close. Determining the most relevant entities for a query can be accomplished through various methods ranging from looking up entities from within Wikidata, to using human intuition, to using SEO tools that locate entities by reverse engineering the current search engine listings.

My preferred method is to use tools designed explicitly for this purpose because they can process large quantities of data in a short period of time.

Here's how most entity based SEO tools (such as On-page.ai) function:

1. Search for a keyword on Google.

2. Look at all the pages ranking for that keyword.

3. Identify the entities that the top ranking pages have in common.

Then the tools compile a list of the top entities for you to use. This can act as a roadmap for ranking.

On a personal note, I feel that with a good entity roadmap, I have a much easier time ranking for competitive terms...

I believe the worse thing an SEO professional can do is try to rank with a poor roadmap of incorrect entities. Not only will it can make ranking extremely time consuming, it can also lead over-optimization and many awkward moments as you try to force incorrect strings of words into your text.)

Source Of Entities

In their simplest form, entities are words with meanings attached to them that computers can understand. To be more technical, they are identifiers linked to words, representing concepts or objects, all stored in a database.

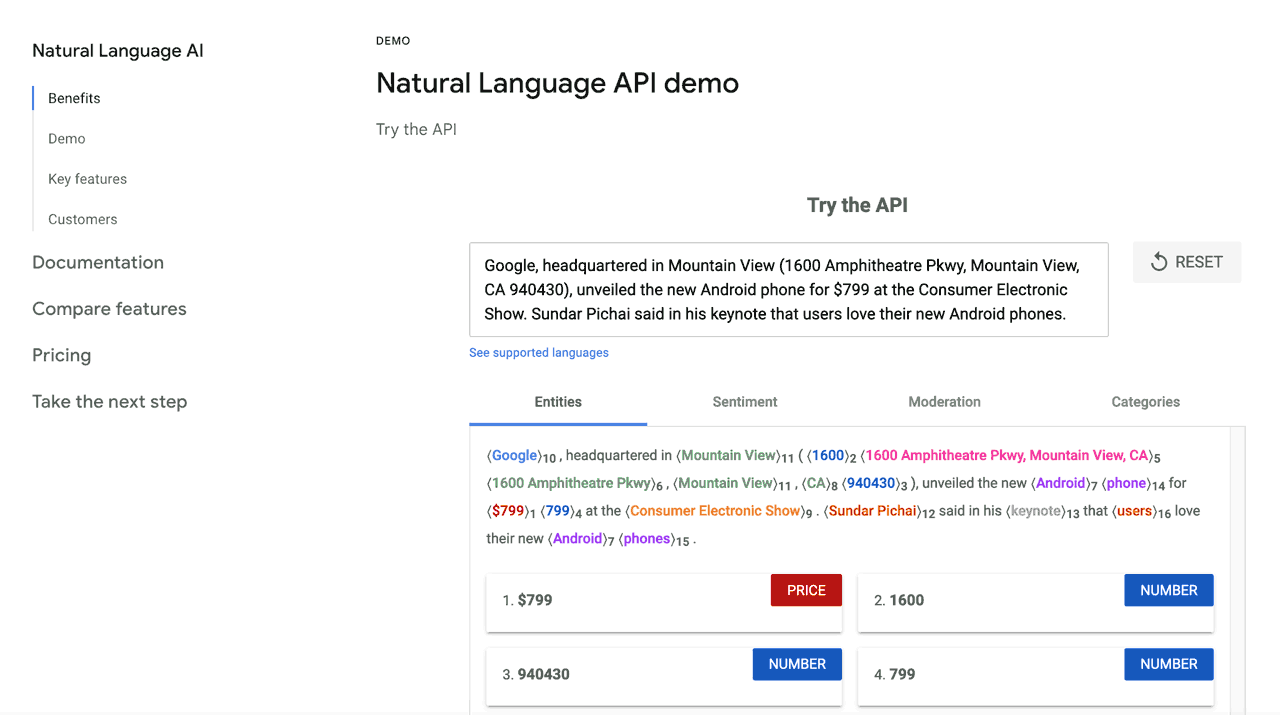

In order to extract entities from a webpage (or any text document), they must first be parsed and processed through a natural language (NLP) system designed to extract entities. This is the process that most SEO tools utilize when they provide you with suggestions.

There are many different NLP systems available and you can even build your own.

Some popular entity extractors include:

- Textrazor

- Amazon Comprehend

- NLTK

- IBM Watson Natural Language

And of course...

Google's own Google Natural Language AI

Yes, you can actually use Google's own NLP AI to extract entities. This is one of the many AI engines that On-Page.ai uses to provide you with recommendations because it gives us the best understanding of what Google thinks about your page.

When we extract entities... we want a list of entities that Google recognizes, and that is exactly why we use Google's own NLP to extract entities. So why doesn't everyone use Google's NLP?

Mainly price.

Last time I checked, Google's NLP was approximately 100x more expensive than a solution like TextRazor which is why many (if not all) the cheap SEO tools use Textrazor or NLTK... or worse, they fake it with ChatGPT.

Some trickier tools will claim to use Google's NLP but only do so partially, in limited quantities or hide it behind an option that isn't enabled by default.

Watch Out For Fake Entities

As entities became an SEO buzzword, many SEO tools started to claim they were providing lists of entities... without actually doing so.

First, let's understand entities. The word: "party" can have multiple different meanings and they are each an entity.

You can have:

- "Let's go to the party!" (event)

- "Let's party!" (verb)

- "The political party" (organization)

- "We have a party of 5 waiting for a table" (group)

- "I'm at the party" (location)

While the word is the same, it carries a different meaning depending on the context. Machine learning algorithms understand the words based on the context which is why Google knows when you're searching for Apple the company and apple the fruit.

Unfortunately, there are quite a few SEO tools providing 'fake entities'. These are just strings of words (sometimes long strings) that they found on many pages.

For example, "best lightweight running shoes" is NOT an entity.

That's a string of words...

If ever you see a long string of word that looks more like a long tail keyword, then run. That isn't an entity and suggestions like that are more likely to lead you astray than anything else. Unfortunately this is all too common within the SEO industry.

If you're interested in my personal ranking strategy, entities and want to learn more about On-Page.ai inner workings, then read on.

Extracting The Correct Entities From The Content

While we were developing On-Page.ai, we tested all the most popular NLP systems to see which ones provided the best results. After months of testing (and even developing our own custom solution), we determined that Google's own NLP kept on providing the most accurate list of entities and their classification.

When comparing entity solutions, it's not about having more words, it's about having the CORRECT words. This is one of the main ingredients and methods that we use at On-Page.ai in order to provide you with the best list of related entities.

I like to say "We pay Google and pass the results on to you" as the base costs associated with this method are quite high however I believe it is worth it to get the absolute best results.

However... it's not enough to just use Google's NLP and call it a day.

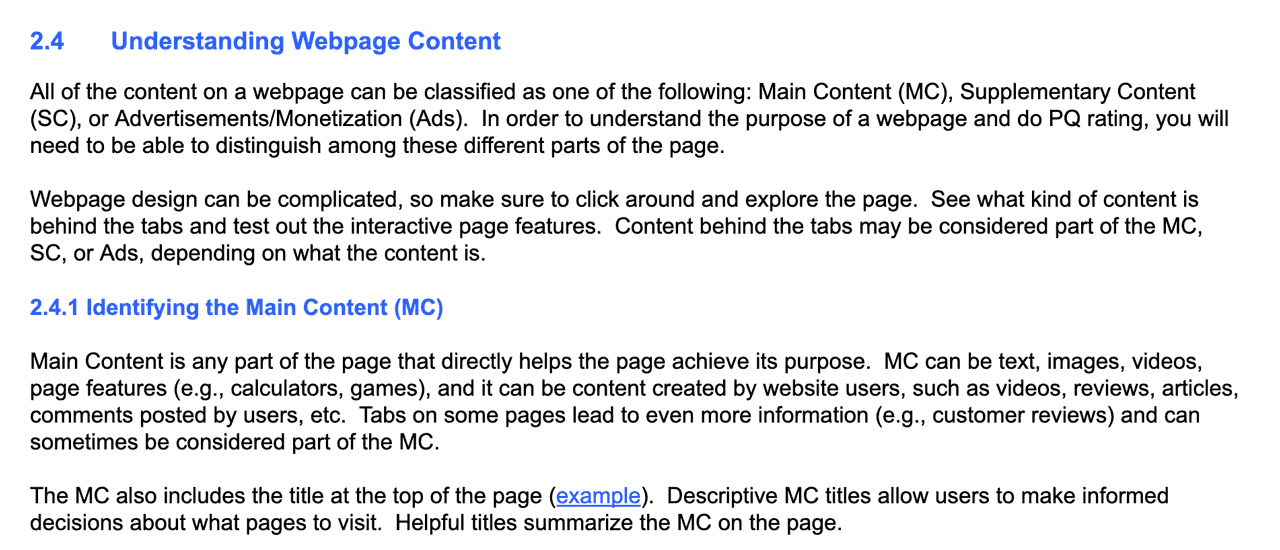

Instead, when Google crawls a page, I believe (and according to search quality evaluator guidelines it does) it breaks it down into multiple sections such as the header, footer, sidebar, main content and supplemental content.

When Google is scoring a webpage, I believe is pays attention primarily to the main content of the page. That way, if you have a sidebar discussing different topics or a footer disclaimer, it won't accidentally rank the page for the wrong thing.

In order to provide the most accurate list of entities, we spent a year developing and refining On-page.ai's own main content extraction system so it could break down pages like Google does.

Here's a visual representation of how On-Page.ai's main content extraction looks:

By identifying the header, sidebar, footer, advertisements and main content, we can better focus in on what really matters to Google.

This is a key distinction of On-Page and it is another reason why it provides the most accurate results.

Unfortunately, most competitors are processing words from the footer, sidebar and even advertisements!

(If you've ever seen weird suggestions from competitors such as "Add 48 H2 sub-headlines" or "Add 30 images", this is why. They are picking those up from the sidebar/header/footer.)

Being able to properly identify what is important on a page is a critical element to determining the most relevant entities for a term.

Going Above And Beyond

As far as I know, nearly every single SEO tool out there is focused on merely matching the top ranking websites are doing... and I believe this is the wrong approach if you aim to beat the competition.

Think about it, if you are only copying the top ranking websites, then in the best case scenario, you're going to end up being a mediocre clone of the #1 ranking website. Not only is this bad SEO, it's also not great for your audience.

Instead, my philosophy at On-Page.ai has always been to go above and beyond the competition. That way, you can actually surpass and beat the competition, and have them trying to copy YOU.

This is why we spent years developing and perfecting our own custom system that returns "Highly Related Words".

These are entities that your competition may or may NOT be using within their content. So with On-Page.ai, you get multiple levels of optimization:

1. First, you optimize by using the entities from the search landscape, this is the "Recommended Words" section. (Use Google's NLP and our main content extraction)

2. Then, we help you go above and beyond the competition, providing you with terms that the competition may have forgotten to use in their text, this is called the "Highly Related Words".

So while everyone else is trying to copy each other, you can be focused on creating better content than the competition.

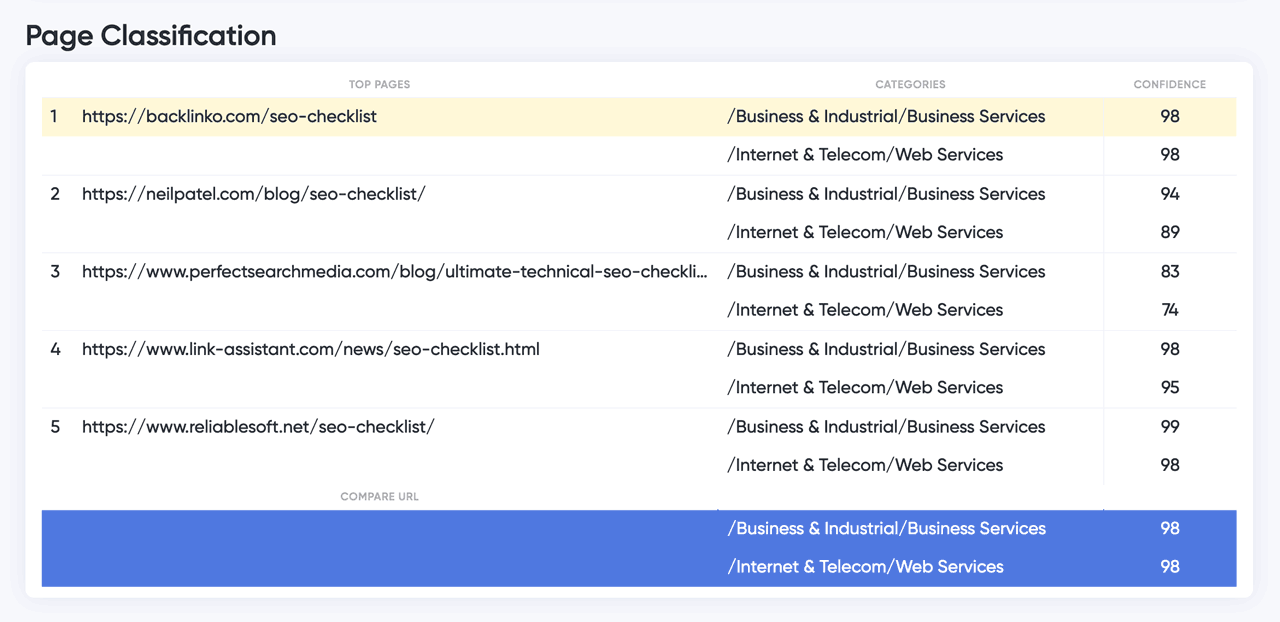

(Optional Categorization)

This isn't a ranking factor and isn't essential... however I did feel the need to clarify that we (On-Page.ai) actually help you optimize on 3 different levels.

The third, and last way we help you optimize is by providing a list how Google's NLP categorizes the top ranking pages. While I need to emphasize that this is not a ranking factor, it can provide crucial clues as to how you stack up against the competition.

If all the top ranking pages are in a specific category, for example,

/Business & industrial/

And you notice that your page is classified in the

/Arts & Entertainment/

It may warrant a second look. We also developed a unique system that provides you with word suggestions to get into the top ranking category.

(It's really cool. Just like Google continuously learns from it's users, we continuously learn from Google's search results.)

5. My Approach To Ranking

(Step by Step Method)

My approach to creating a top ranking page is quite simple:

I aim to have a higher related entity density than the competition while making my content captivating and engaging for users.

Having a high entity density provides me with a high initial ranking score and then I maintain (or even exceed it) by providing the users with exactly what they want when they land on the page. (This is also the design philosophy of the Stealth Writer.)

Step 1. I identify the top ranking entities by searching for the term using On-Page.ai

Step 2. Then I write an article, making sure to include the word suggestions from the first 3 rows of entities in the "Recommended Words".

I try to repeat the entities that have an importance level of 8 or above (denoted by the number beside each recommended word) at least 3-5 times within my article.

I also include every single "Highly Related Word" within my content. Other than that, I focus on writing naturally while focusing on the user intent. I'm constantly asking myself: "What does the reader want to find on this page? What is the problem he is trying to solve?" and that guides me.

Step 3. I review my article, making sure to use the top related entities within my title, main headline (H1) and sub-headlines (H2) of my article.

I want every sub-headline to include at least ONE relevant entity.

For example, if I'm trying to rank for the term "SEO Checklist", then I would avoid a sub-headline such as "Conclusion".

Instead, I would use sub-headline such as: "Google Checklist Recap" in which includes 2 entities from my list: "Google" and "Checklist".

Step 4. I then add multiple images to my article. (Usually 3-4 but sometimes many more as they help retain users on my page.)

I name ONE of filenames of images my main keyword with the alt-text of my target keyword.

For example:

seo-checklist.jpg alt="seo-checklist"

The rest of the images are named after combinations of the top entities.

For example,

google.jpg alt="Google"

google-checklist.jpg ="Google checklist"

local-seo.jpg alt="Local SEO"

I suspect that Google trusts the filename more than they do the alt-text so I name both the same.

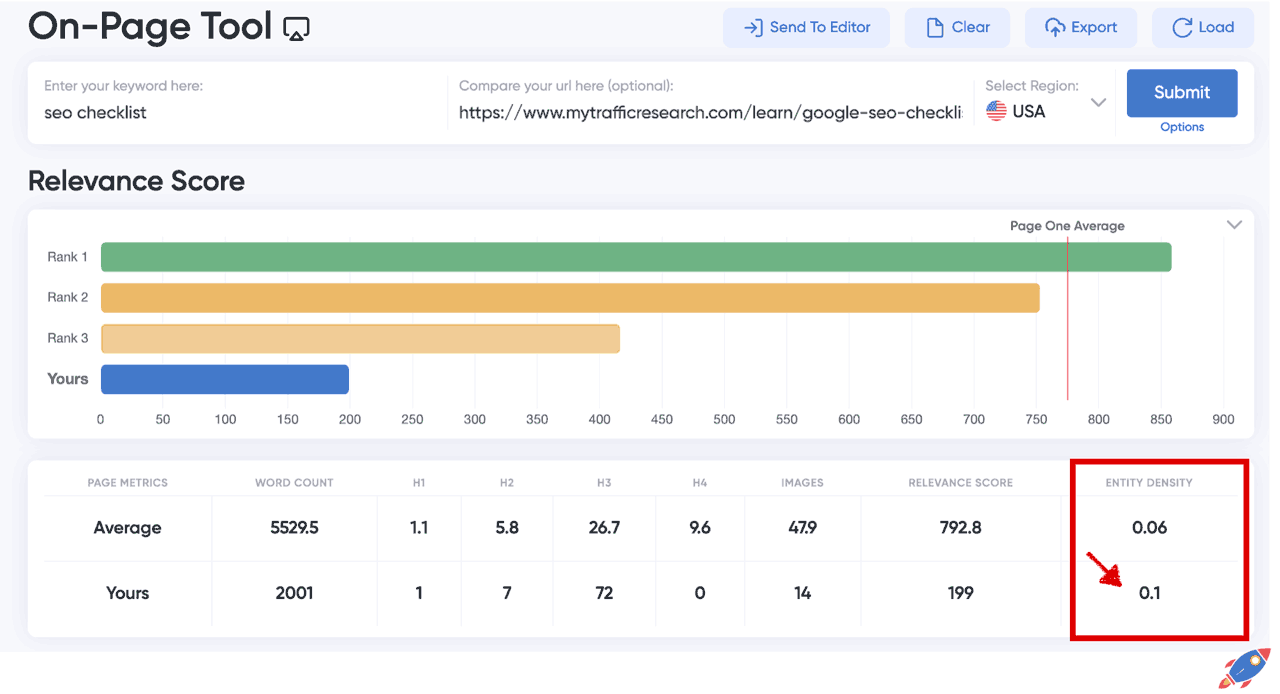

Step 5. When I'm confident in the SEO optimization and the engagement of the article, I publish it on my site. This provides me with a publicly accessible URL. Immediately after publishing (before Google has a chance to crawl the page), I check with On-Page.ai

Step 6. I check the page with another On-Page.ai scan and insert my URL into the "Compare URL" section.

This provides me insights on the pages performance in comparison to the competition.

Specifically, I'm looking at my entity density. I want it to be higher than the average.

In the screenshot above, the score of 0.1 is higher than the average of 0.06 so this would be OK. That said, depending on the query, I might also want to improve the overall relevance score but that has less of an impact post-Helpful Content Update.

Step 7. When I confirm that I have a higher entity density than the competition, I share my page with the public and let Google start testing it with real users.

By sharing the page, I mean creating at least one link that Google can use to discover the page. It can be as simple as posting about it on Twitter, posting it on Reddit, sharing it on another website, etc.

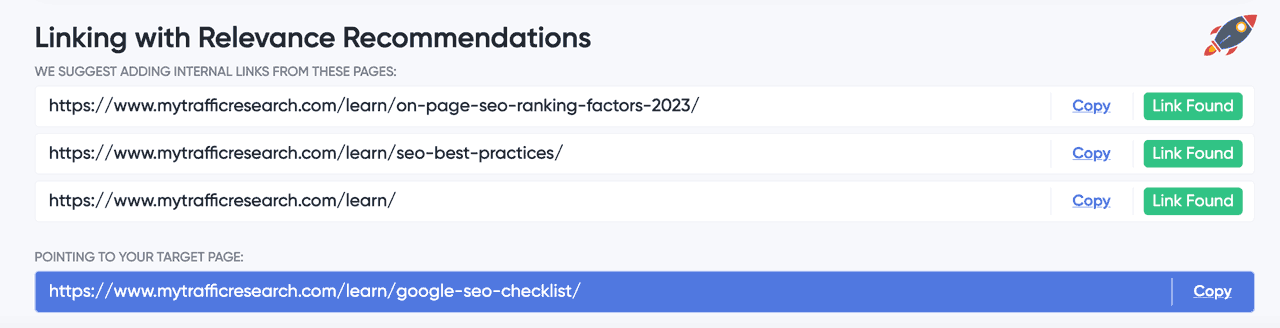

Bonus Step 8 (Optional)

Once posted, I add 3 internal links from the most related pages, as recommended during the On-Page scan. This funnels power to the pages and help avoid orphan pages, improving the odds that my page will rank for a long time.

11. Final Words On Entities

(Feedback & Limited Time Offer)

This project represents a significant time investment—over 80 man-hours over a 1 year period from my team and I. I'm sharing it publicly because I believe that these insights may help you rank better and potentially even recover from a Google penalty (especially if you consistently fit into the optimized-but-not-supported-by-people trend).

Ultimately, I believe that entity based ranking is the easiest way to increase rankings if done correctly. As long as the user experience can support it, I have seen incredible results in a short period of time.

If you wish to access the same source of entities used within this case study, then try out On-Page.ai

Should you feel this resources valuable, feel free to share this page within your professional network, within Facebook groups, Slack/Skype/Discord chats and forums.

And if you wish to contribute any discoveries, case studies or any corrections,

please email me at team@on-page.ai

Sincerely,

- Eric Lancheres

Presented By

Eric Lancheres

Eric Lancheres is one of the most respected SEO experts in the world. He has been featured as an authority at Traffic & Conversion Summit, SEO Rock Stars, SEO Video Show and countless other interviews and podcasts.

For 10 years, he researched SEO trends, coached business owners and agencies, conducted experiments, and published his findings.

Eric has poured all his accumulated knowledge, best-kept secrets, and proven processes into the development of On-Page.ai, making it the go-to tool for effective online ranking.

© Copyright 2023 On-Page. All Rights Reserved